Load spreadsheet data into APEX

Face it: your users are in love with Microsoft Excel, and you can’t do anything about it.

You can show them the excellent Interactive Report and Interactive Grid features of APEX, and train some of your users to use some of their capabilities, but at the end of the day, your users will still download the data into their spreadsheet software to do their own stuff with it.

Once they’ve finished with the data, odds are they’ll come back to you and ask “how do I upload this data back into APEX?” and expect that the answer is merely a flip of a switch to enable a built-in APEX feature. Of course, you know and I know that this is not necessarily a simple thing; it is certainly not just an option to be enabled. Depending on what exactly they mean by “upload this data” it may be reasonably easy to build or it could get quite complex.

File Formats

Typically the data will be provided in some kind of text format (CSV, tab delimited, fixed width) or binary file (XLS or XLSX). If they have copied the data from a table in Excel, it will be in tab-delimited format in the clipboard. Perhaps in some odd instances the user will have received the data from some system in fixed width, XML or JSON format – but this is rare as this is typically part of the build of a system integration solution and users expect these to be “harder”.

Actual Requirements

When your user wants you to provide a facility for uploading data, there are some basic questions you’ll need to ask. Knowing these will help you choose the right approach and solution.

- Where are the files – i.e. are they stored on the database server, or is the user going to upload them via an online APEX application

- How much automation is required, how often – i.e. is this a ad-hoc, rare situation; something they need to do a few times per month; or something that is frequent and needs to be painless and automatic?

- What are the files named – i.e. if they are stored on the database server, do we know what the files will be called?

- How consistent is the data structure?

Data Structure

That last one is important. Will the columns in the file remain the same, or might they change all the time? If the structure is not amenable to automated data matching, can the file structure be changed to accommodate our program? Is the structure even in tabular form (e.g. is it a simple “header line, data line, data line” structure or are there bits and pieces dotted around the spreadsheet)? If it’s an Excel file, is all the data in one sheet, or is it spread across multiple sheets? Should all the sheets be processed, or should some of them be ignored? Can the columns vary depending on requirement – might there be some columns in one file that don’t exist in other files, and vice versa?

Finally, is all the data to be loaded actually encoded in text form? This is an issue where a spreadsheet is provided where the user has, trying to be helpful, highlighted rows with different colours to indicate different statuses or other categorising information. I’ve received spreadsheets where some data rows were not “real” data rows, but merely explanatory text or notes entered by the users – since they coloured the background on those rows in grey, they expected my program to automatically filter those rows out.

Solution Components

Any solution for processing uploaded files must incorporate each of the following components:

- Load – read the raw file data

- Parse – extract the text data from the file

- Map – identify how the text data relates to your schema

- Validate – check that the data satisfies all schema and business rule constraints

- Process – make the relevant changes in the database based on the data

Each of these components have multiple solution options, some are listed here:

- Load – External tables / SQL*Loader; APEX file select

- Parse – External tables / SQL*Loader; APEX; 3rd-party code

- Map – Fixed (we already know which columns appear where) (e.g. SQL*Loader Express Mode); Manual (allow the user to choose which column maps to which target); or A.I. (use some sort of heuristic algorithm to guess which column maps to what, e.g. based on the header labels)

- Validate – Database constraints; and/or PL/SQL API

- Process – INSERT; MERGE; or call a PL/SQL API

The rest of this post is focussed primarily on Parsing and Mapping Text and Excel files.

Solutions for Text files

These are some solutions for parsing text files (CSV, tab-delimited, fixed-width, etc.) that I’ve used or heard of. I’m not including the more standardised data interchange text formats in this list (e.g. XML, JSON) as these are rarely used by end users to supply spreadsheet data. “Your Mileage May Vary” – so test and evaluate them to determine if they will suit your needs.

- External Table / SQL*Loader / SQL*Loader Express Mode (CSV, delimited, and fixed width files)

- APEX Data Workshop (CSV & delimited files)

- APEX Data Loader Wizard (CSV and delimited files only)

- Alexandria PL/SQL Library CSV_UTIL_PKG – Morten Braten, 2010

- LOB2Table PL/SQL (CSV, delimited and fixed width files) – Michael Schmid, 2015

- Excel2Collection APEX process plugin (CSV, delimited, XLS and XLSX files) – Anton Scheffer, 2013

- csv2db – load CSV files from the command line (Linux). Gerald Venzl, 2019

- KiBeHa’s CSV parser – a simple CSV parser in PL/SQL written by Kim Berg Hansen, 2014 (this is a simplistic parser that will not handle all CSV files, e.g. ones that have embedded delimiters with double-quoted text)

It could be noted here that the Interactive Grid in APEX 5.1 and later does support Paste; if the user selects some data from Excel, then selects the corresponding columns and rows in the grid, they can Paste the tab-delimited data right in. Of course, this requires that the columns be in exactly the right order.

External Table

This uses the SQL*Loader access driver and you can look up the syntax in the Oracle docs by searching for “ORACLE_LOADER”. Since Oracle 12.1 you can use the simple “FIELDS CSV” syntax to parse CSV files, e.g.:

create table emp_staging

( emp_no number(4)

, name varchar2(10)

, ...

)

organization external

( default directory ext_dir

access parameters

( records delimited by newline

FIELDS CSV

reject rows with all null fields

)

location ( 'emp.dat' )

)

reject limit unlimited

Note that the filename (location) is hard-coded in the external table definition. Since Oracle 12.2 you can modify some of the attributes of an external table such as file name directly in the SELECT statement, without requiring any ALTER TABLE – Override External Table Parameters From a Query in Oracle Database 12c Release 2 (12.2).

SQL*Loader Express Mode

Since Oracle 12.1 the sqlldr command-line utility supports “Express Mode” which by default reads a CSV file, and loads the data into a given table. Read this quick intro here. This can come in handy for scripting the load of a number of CSV files on an ad-hoc basis into tables that already have exactly the same structure as those CSV files.

> sqlldr userid=scott/tiger table=emp

This expects to find a file named “emp.dat” which contains CSV data to be loaded into the nominated table. Internally, it creates a temporary external table to load the data. Additional parameters can be added to change the filename, delimiters, field names, and other options.

APEX Data Workshop

The APEX Data Workshop is found under SQL Workshop > Utilities > Data Workshop and allows the developer to quickly load data from a CSV, tab-delimited, XML, or copy-and-paste from Excel into an existing or new table. This can be very handy when your client sends you some spreadsheets and you need to quickly load the data as one or more tables.

A related utility is the Create Application from Spreadsheet which does the same thing, plus creates a basic application to report and maintain the data in the new table.

APEX Data Loader Wizard

If your users need to load data on an ad-hoc, on-demand basis, and you don’t know necessarily what the structure of the files will be (e.g. the headings might change, or the order of the columns might change), you can use the APEX Data Loader Wizard to build an APEX application that guides your users in loading, mapping, and validating the data for themselves. If required you can customise the generated pages to add your own processing.

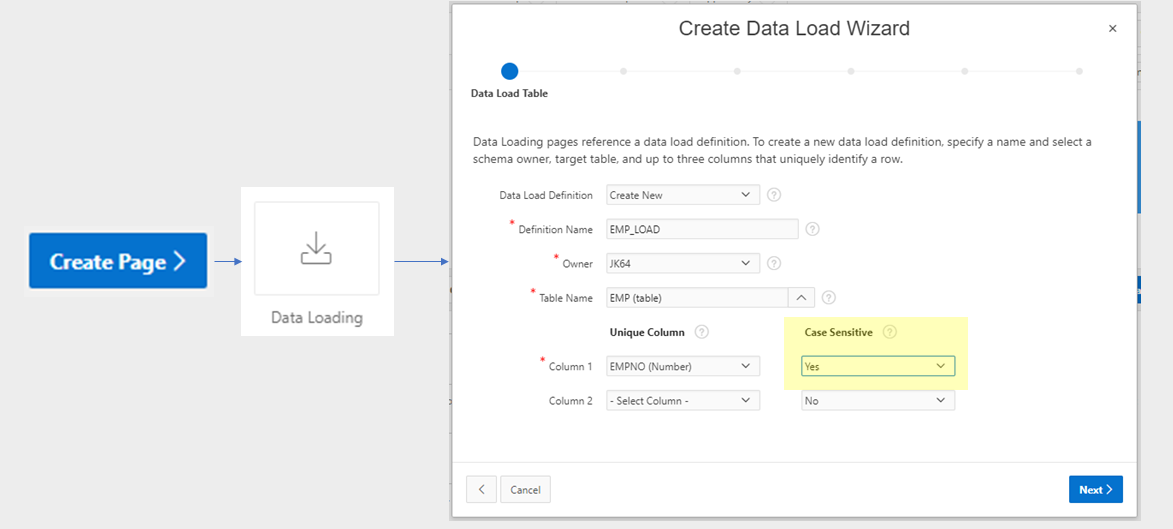

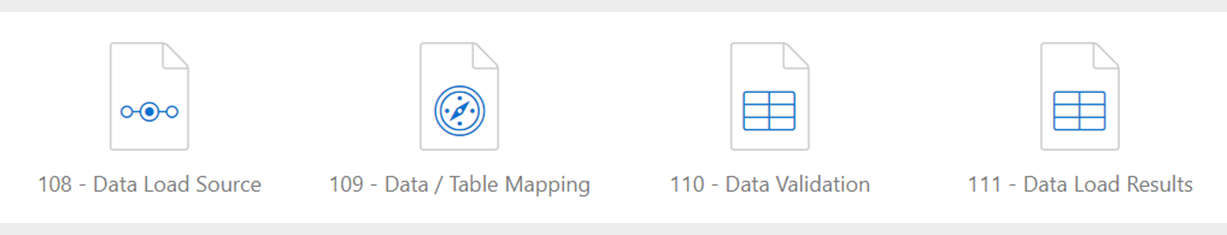

To create the wizard, click Create Page, choose Data Loading, and follow the steps to define the Data Load Definition and its associated pages. The definition determines the target table, the unique column(s) for data matching, transformation rules, and lookups (e.g. to get foreign keys for reference tables). Once this is done, the following four pages will be created for you:

You can customise these pages to modify the look and feel (e.g. moving some of the more complicated options in a collapsible region), or to add your own custom processing for the data.

For example, I will often add an additional process on either the 3rd page (to be run after the “Prepare Uploaded Data” process) that will do further processing of the loaded data. The process would be set up to only run When Button Pressed = “NEXT” and would have a Condition “Item is NULL or Zero” = “P111_ERROR_COUNT”. The result is that on the Data Validation (3rd) page, after reviewing the validation summary, the user clicks “Next” and the data is loaded and processed.

Alternatively, I sometimes want the user to view additional validation or summary information on the 4th page before doing further processing. In this case, I would add the process to the Data Load Results (4th) page, When Button Pressed = “FINISH”. For this to work, you need to modify the FINISH button to Submit the page (instead of redirecting). I also would add a CANCEL button to the page so the user can choose to not run the final processing if they wish.

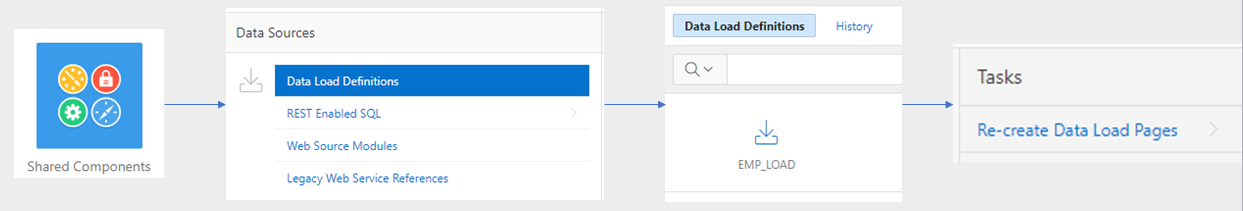

Updating a Data Load Definition

The Data Load Definitions (one for each target table) may be found under Shared Components > Data Load Definitions. Here, you can modify the transformations and lookup tables for the definition. However, if the table structure has changed (e.g. a new column has been added), it will not automatically pick up the change. To reflect the change in the definition, you need to follow the following steps:

- Edit the Data Load Definition

- Click Re-create Data Load Pages

- Delete the new generated pages

This refreshes the Data Load Definition and creates 4 new pages for the wizard. Since you already had the pages you need (possibly with some customisations you’ve made) you don’t need the new pages so you can just delete them.

CSV_UTIL_PKG

The Alexandria PL/SQL Library includes CSV_UTIL_PKG which I’ve used in a number of projects. It’s simple to use and effective – it requires no APEX session, nothing but PL/SQL, and can be called from SQL for any CLOB data. It’s handy when you know ahead of time what the columns will be. You could read and interpret the headings in the first line from the file if you want to write some code to automatically determine which column is which – but personally in this case I’d lean towards using the APEX Data Loader Wizard instead and make the user do the mapping.

If you don’t already have the full Alexandria library installed, to use this package you must first create the schema types t_str_array and t_csv_tab. You will find the definition for these types in setup/types.sql. After that, simply install ora/csv_util_pkg.pks and ora/csv_util_pkg.pkb and you’re good to go.

In the example below I get a CSV file that a user has uploaded via my APEX application, convert it to a CLOB, then parse it using CSV_UTIL_PKG.clob_to_csv:

procedure parse_csv (filename in varchar2) is

bl blob; cl clob;

begin

select x.blob_content into bl

from apex_application_temp_files x

where x.name = parse_csv.filename;

cl := blob_to_clob(bl);

insert into csv_staging_lines

(session_id, line_no, school_code, school_name

,line_type, amount, line_description)

select sys_context('APEX$SESSION','APP_SESSION')

,line_number - 1

,c001 as school_code

,c002 as school_name

,c003 as line_type

,replace(replace(c004,'$',''),',','') as amount

,c005 as line_description

from table(csv_util_pkg.clob_to_csv(cl, p_skip_rows => 1))

where trim(line_raw) is not null;

end parse_csv;

Read more details on CSV_UTIL_PKG here.

LOB2Table

This PL/SQL package written and maintained by Michael Schmid parses CSV, delimited or Fixed-width data embedded in any LOB or VARCHAR2, including in a table with many records. The data can be read from any CLOB, BLOB, BFILE, or VARCHAR2. This makes it quite versatile, it reportedly provides excellent performance, and it includes a pipelined option. It will read up to 200 columns, with a maximum of 32K per record.

It requires execute privileges on SYS.DBMS_LOB and SYS.UTL_I18N and creates some object types and a database package. You can download the source from sourceforge. It appears to be well supported and was most recently updated in June 2018. I recommend checking it out.

select d.deptno, d.dname,

t.row_no,

t.column1, t.column2,

t.column3, t.column4

from dept d

cross join table(

lob2table.separatedcolumns(

d.myclob, /* the data LOB */

chr(10), /* row separator */

',', /* column separator */

'"' /* delimiter (optional) */

) ) t;

Excel2Collection

This is a process type APEX plugin written by Anton Scheffer (AMIS) in 2013, and has been actively maintained since then. You can download it from here or find it on apex.world.

The plugin detects and parses CSV, XLS, XML 2003 and XLSX files which makes it very versatile. It will load 50 columns from the spreadsheet into an APEX collection (max 10K rows). If you need to load larger spreadsheets you can send Anton a donation and your email address and he’ll send you a database package that can handle larger files.

Solutions for Excel files

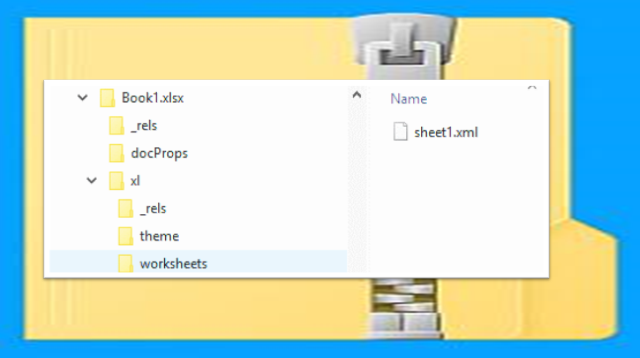

The first thing to know about XLSX files is that they are basically a ZIP file containing a folder structure filled with a number of XML documents. Parsing an XLSX file, therefore, involves first unzipping the file, reading the relevant XML documents and parsing their contents. As usual with any Microsoft file format, the internal structure of these XML documents can be quite complex so I’d much rather leave the work to someone who has already worked out the details. Thankfully, this has largely been done.

These are some solutions for loading data from Microsoft Excel files (XLS, XLSX) that I’ve come across. Again, “YMMV” – so test and evaluate them to determine if they will suit your needs.

- Alexandria PL/SQL Library OOXML_UTIL_PKG (XLSX files only) – Morten Braten, 2011

- Excel2Collection APEX process plugin (CSV, delimited, XLS and XLSX files) – Anton Scheffer, 2013

- Apache POI (XLS and XLSX files) – Christian Neumueller, 2013

- XLSX_PARSER (XLSX files only) – Carsten Czarski, 2018

- ExcelTable (XLS, XLSX, XLSM, XLSB, ODF files) – Marc Bleron, 2016

OOXML_UTIL_PKG

The Alexandria PL/SQL Library includes OOXML_UTIL_PKG which provides a number of utilities for parsing (and creating) XLSX, DOCX and PPTX files. It provides functions to get file attributes (including title, creator, last modified) and a list of worksheets in an XLSX. It provides functions to get the text data from any cell in any sheet, although for reasonable performance if you need more than one cell you should use the functions that returns a range of cells in one go.

Installation requires zip_util_pkg, sql_util_pkg, string_util_pkg, xml_util_pkg, xml_stylesheet_pkg, and ooxml_util_pkg, all of which are in the Alexandria library. Given this list of dependencies (and probably others I didn’t notice) I recommend installing the whole library – after all, there’s a lot of useful stuff in there so it’s worth it.

declare

l_blob blob;

l_names t_str_array := t_str_array('B3','C3','B4','C4','B5','C5');

l_values t_str_array;

begin

l_blob := file_util_pkg.get_blob_from_file('EXT_DIR','sample.xlsx');

l_values := ooxml_util_pkg.get_xlsx_cell_values(l_blob, 'Sheet1', l_names);

for i in 1..l_values.count loop

dbms_output.put_line(l_names(i) || ' = ' || l_values(i));

end loop;

end;

More details on OOXML_UTIL_PKG here.

Excel2Collection

As noted above, the Excel2Collection APEX plugin can detect and parse XLS, XML 2003 and XLSX files (as well as CSV files). The fact that it detects the file type automatically is a big plus for usability.

Apache POI

This solution involves installing Apache POI (“Poor Obfuscation Implementation”), a Java API for Microsoft products, into the database. The solution described by Christian Neumueller here parses XLS and XLSX files although it is admittedly not very efficient.

XLSX_PARSER

In 2018 Carsten Czarski posted a blog article “Easy XLSX Parser just with SQL and PL/SQL” listing a simple database package that parses XLSX files. This uses APEX_ZIP which comes with APEX, although using it does not require an APEX session or collections. It can load the file from a BLOB, or from APEX_APPLICATION_TEMP_FILES. It uses XMLTable to parse the XML content and return the text content of up to 50 columns for any worksheet in the file. It may be modified to support up to 1,000 columns.

To get a list of worksheets from a file:

select * from table(

xlsx_parser.get_worksheets(

p_xlsx_name => :P1_XLSX_FILE

));

To get the cells from a worksheet:

select * from table(

xlsx_parser.parse(

p_xlsx_name => :P1_XLSX_FILE,

p_worksheet_name => :P1_WORKSHEET_NAME

));

I used this solution in a recent APEX application but the client was still on APEX 4.2 which did not include APEX_ZIP; so I adapted it to use ZIP_UTIL_PKG from the Alexandria PL/SQL Library. If you’re interested in this implementation you can download the source code from here.

ExcelTable

ExcelTable is a powerful API for reading XLSX, XLSM, XLSB, XLS and ODF (.ods) spreadsheets. It is based on PL/SQL + Java and reportedly performs very well. It requires a grant on DBMS_CRYPTO which allows it to read encrypted files. It includes an API for extracting cell comments as well. It can return the results as a pipelined table or as a refcursor. It knows how to interpret special cell values and error codes such as booleans, #N/A, #DIV/0!, #VALUE!, #REF! etc.

It includes API calls that allow you to map the input from a spreadsheet to insert or merge into a table, defining how to map columns in the spreadsheet to your table columns, which may improve throughput and may mean it uses less memory to process large files.

The API was written by Marc Bleron in 2016 and has been in active maintenance since then (latest update 22/10/2018 as of the writing of this article). You can read more details and download it from here: https://github.com/mbleron/ExcelTable

EDIT 17/12/2018: thanks to Nicholas Ochoa who alerted me to this one.

APEX 19.1 Statement of Direction

DISCLAIMER: all comments and code samples regarding APEX 19.1 in this article are based primarily on the Statement of Direction and are subject to Oracle’s “Safe Harbour” provision and must therefore not be relied on when making business decisions.

The SOD for the next release of APEX includes the following note, which is exciting:

“New Data Loading: The data upload functionality in SQL Workshop will be modernized with a new drag & drop user interface and support for native Excel, CSV, XML and JSON documents. The same capabilities will be added to the Create App from Spreadsheet wizard and a new, public data loading PL/SQL API will be made available.”

APEX Statement of Direction

Whether this release will include corresponding enhancements to the APEX Data Loader Wizard remains to be seen; I hope the wizard is enhanced to accept XLSX files because this is something a lot of my users would be happy about.

EDIT 6/2/2019: Early Adopter of APEX 19.1 reveals that the Data Workshop supports loading CSV, XLSX, XML and JSON data. If you load an XLSX file, it will allow you to choose which worksheet to load the data from (only one worksheet at a time, though). Also, the Data Loader Wizard has not been updated to use the new API, it still only supports loading from CSV files. This is on the radar for a future version of APEX, however.

APEX_DATA_PARSER

The most promising part of the APEX 19.1 SOD is the PL/SQL API bit, which will mean no plugins or 3rd-party code will be needed to parse XLSX files. It appears the package will be called APEX_DATA_PARSER, providing routines to automatically detect and parse file formats including XLSX, XML, JSON and CSV/tab-delimited files, e.g.:

select * from table(

apex_data_parser.parse(

p_content => blob,

p_file_name => 'test.xlsx',

p_xlsx_sheet_name => 'sheet1.xml'));

select * from table(

apex_data_parser.parse(

p_content => blob,

p_file_name => 'test.xlsx',

p_xlsx_sheet_name => 'sheet1.xml'));

select * from table(

apex_data_parser.parse(

p_content => blob,

p_file_name => 'test.xml'));

select * from table(

apex_data_parser.parse(

p_content => blob,

p_file_name => 'test.js'));

select * from table(

apex_data_parser.parse(

p_content => blob,

p_file_name => 'test.csv'));

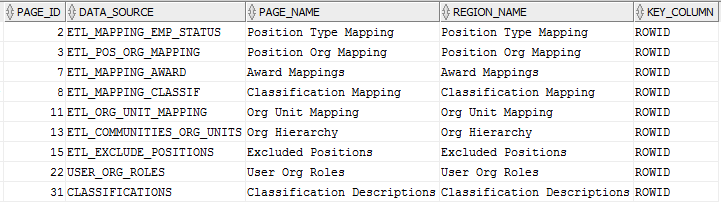

Capability Matrix

| Method | CSV | Fixed width | XLS | XLSX |

|---|---|---|---|---|

| External Table | Yes | Yes | ||

| APEX Data Workshop | Yes | 19.1 | ||

| APEX Data Loader Wizard | Yes | |||

| CSV_UTIL_PKG | Yes | |||

| LOB2Table | Yes | Yes | ||

| Excel2Collection | Yes | Yes | Yes | |

| OOXML_UTIL_PKG | Yes | |||

| Apache POI | Yes | Yes | ||

| XLSX_PARSER | Yes | |||

| APEX_DATA_PARSER | 19.1 | 19.1 | ||

| ExcelTable | Yes | Yes | ||

| csv2db | Yes |

Have I missed a tool or 3rd-party code that you have found useful for parsing files to load into your APEX application? If so, please comment below.

This blog post is partially based on the following presentation delivered at the AUSOUG Connect 2018 Perth conference, November 2018.

Related

- SQL*Loader Express: https://connor-mcdonald.com/2015/08/17/loading-file-data-easier-than-you-think/

- Alexandria PL/SQL Library: https://github.com/mortenbra/alexandria-plsql-utils

- LOB2Table: https://sourceforge.net/p/lob2table/wiki/Home/

- Excel2Collection (Anton Scheffer): https://github.com/antonscheffer/excel2collections

- ORDS excel2collection (Kallman): https://joelkallman.blogspot.com/2017/06/excel2collection-functionality-of-ords.html

- Apache POI (Christian Neumueller): https://chrisonoracle.wordpress.com/2013/11/13/read-excel-file-in-plsql-using-java-in-the-db/

- XLSX_PARSER (Carsten Czarski): https://blogs.oracle.com/apex/easy-xlsx-parser%3a-just-with-sql-and-plsql

- APEX 19.1 Statement of Direction https://apex.oracle.com/en/learn/resources/sod/

- ExcelTable https://github.com/mbleron/ExcelTable

So you’ve built an APEX application to solve a problem for one client, or one department, or just yourself – and you think it might be useful for others as well. How do you make that application available for other users, departments, or companies to reuse, while ensuring each sees only their own data and cannot mess around with others’ data?

So you’ve built an APEX application to solve a problem for one client, or one department, or just yourself – and you think it might be useful for others as well. How do you make that application available for other users, departments, or companies to reuse, while ensuring each sees only their own data and cannot mess around with others’ data? a. Tenant isolation – no tenant sees data for another tenant; no tenant can affect the app’s behaviour for another tenant; protect against “noisy neighbours” (i.e. those which impact system capacity and performance).

a. Tenant isolation – no tenant sees data for another tenant; no tenant can affect the app’s behaviour for another tenant; protect against “noisy neighbours” (i.e. those which impact system capacity and performance).

I wanted to use the “Cards” report template for a small report which lists file attachments. When the user clicks on one of the cards, the file should download and open in a new tab/window. Unfortunately, the Cards report template does not include a placeholder for extra attributes for the anchor tag, so it won’t let me add “target=_blank” like I would normally.

I wanted to use the “Cards” report template for a small report which lists file attachments. When the user clicks on one of the cards, the file should download and open in a new tab/window. Unfortunately, the Cards report template does not include a placeholder for extra attributes for the anchor tag, so it won’t let me add “target=_blank” like I would normally.