My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

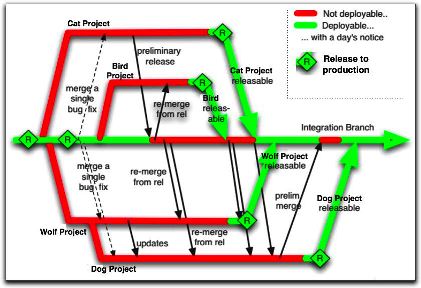

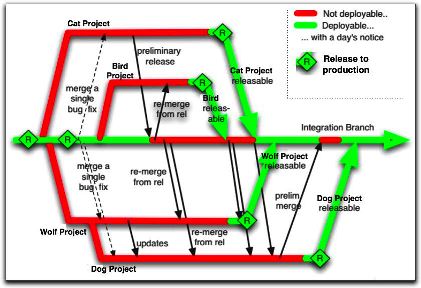

They always have a number of projects on the go with it, and they wanted us to develop two major revisions to it in parallel. In other words, we’d have v1.0 (so to speak) in Production, which still needed support and urgent defect fixing, v1.1 in Dev1 for project A, and v1.2 in Dev2 for project B. Oh, and we don’t know if Project A will go live before Project B, or vice versa. We have source control, so we should be able to branch the application and have separate teams working on each branch, right?

We said, “no way”. Trying to merge changes from a branch of an APEX app into an existing APEX app is not going to work, practically speaking. The merged script would most likely fail to run at all, or if it somehow magically runs, it’d probably break something.

So we pushed back a bit, and the terms of the project were changed so that development of project A would be done first, and the development of project B would follow straight after. So at least now we know that v1.2 can be built on top of v1.1 with no merge required. However, we still had the problem that production defect fixes would still need to be done on a separate version of the application in dev, and that they needed to continue being deployed to sit/uat/prod without carrying any changes from our projects.

The solution we have used is to have two copies of dev, each with its own schema, APEX application and version control folder: I’ll call them APP and APP2. We took an export of APP and created APP2, and instructed the developer who was tasked with production defect fixes to manually duplicate his changes in both APP and APP2. That way the defect fixes were “merged” in a manual fashion as we went along – also, it meant that the project development would gain the benefit of the defect fixes straight away. The downside was that everything worked and acted as if they were two completely different and separate applications, which made things tricky for integration.

Next, for developing project A and project B, we needed to be able to make changes for both projects in parallel, but we needed to be able to deploy just Project A to SIT/UAT/PROD without carrying the changes from project B with it. The solution was to use APEX’s Build Option feature (which has been around for donkey’s years but I never had a use for it until now), in combination with Conditional Compilation on the database schema.

I created a build option called (e.g.) “Project B”. I set Status = “Include”, and Default on Export = “Exclude”. What this means is that in dev, my Project B changes will be enabled, but when the app is exported for deployment to SIT etc the build option’s status will be set to “Exclude”. In fact, my changes will be included in the export script, but they just won’t be rendered in the target environments.

When we created a new page, region, item, process, condition, or dynamic action for project B, we would mark it with our build option “Project B”. If an existing element was to be removed or replaced by Project B, we would mark it as “{NOT} Project B”.

Any code on the database side that was only for project B would be switched on with conditional compilation, e.g.:

$IF $$projectB $THEN

PROCEDURE my_proc (new_param IN ...) IS...

$ELSE

PROCEDURE my_proc IS...

$END

When the code is compiled, if the projectB flag has been set (e.g. with ALTER SESSION SET PLSQL_CCFLAGS='projectB:TRUE';), the new code will be compiled.

Build Options can be applied to:

- Pages & Regions

- Items & Buttons

- Branches, Computations & Processes

- Lists & List Entries

- LOV Entries

- Navigation Bar & Breadcrumb Entries

- Shortcuts

- Tabs & Parent Tabs

This works quite well for 90% of the changes required. Unfortunately it doesn’t handle the following scenarios:

1. Changed attributes for existing APEX components – e.g. some layout changes that would re-order the items in a form cannot be isolated to a build option.

2. Templates and Authorization Schemes cannot be marked with a build option.

On the database side, it is possible to detect at runtime if a build option has been enabled or not. In our case, a lot of our code was dependent on schema structural changes (e.g. new table columns) which would not compile in the target environments anyway – so conditional compilation was a better solution.

Apart from these caveats, the use of Build Options and Conditional Compilation have made the parallel development of these two projects feasible. Not perfect, mind you – but feasible. The best part? There’s a feature in APEX that allows you to view a list of all the components that have been marked with a Build Option – this is accessible from Shared Components -> Build Options -> Utilization (tab).

Enhancement Requests:

1. If Build Options could be improved to allow the scenarios listed above, I’d be glad. In a perfect world, I should be able to go into APEX, select “Project B”, and all my changes (adding/modifying/removing items, regions, pages, LOVs, auth schemes, etc) would be marked for Project B. I could switch to “Project A”, and my changes for Project B would be hidden. I think this would require the APEX engine to be able to have multiple definitions of each item, region or page, one for each build option. Merging changes between build options would need to be made possible, somehow – I don’t hold any illusions that this would be a simple feature for the APEX team to deliver.

2. Make the items/regions/pages listed in the Utilization tab clickable, so I can easily click through and change properties on them.

3. Another thing I’d like to see from the APEX team is builtin GUI support for exporting applications as a collection of individual scripts, each independently runnable – one for each page and shared component. I’m aware there is a Java tool for this purpose, but the individual scripts it generates cannot be run on their own. For example, if I export a page, I should be able to import that page into another copy of the same application (but with a different application ID) to replace the existing version of that page. I should be able to check in a change to an authorization scheme or an LOV or a template, and deploy just the script for that component to other applications, even in other workspaces. The export feature for all this should be available and supported using a PL/SQL API so that we can automate the whole thing and integrate it with our version control and deployment software.

4. What would be really cool, would be if the export scripts from APEX were structured in such a way that existing source code merge tools could merge different versions of the same APEX script and result in a usable APEX script. This already works quite well for our schema scripts (table scripts, views, packages, etc), so why not?

Further Reading:

Recently I had a bit of a saga with a public-facing website running on Oracle APEX (www.foothillschurch.org.au, if you’re curious) getting hammered by Googlebot. We went live with a new version of the site, but I’d forgotten to make sure that all the links set the Session ID to 0 (zero).

What is this session ID?

Every visit to an APEX application needs to have a session. Each unique session is recorded in the database, and keeps track of the state of all the variables for the various pages you visit. Normally, the session is identified by a Session ID which is embedded in the “p” parameter of the URL.

For example, if you visit the page:

http://www.foothillschurch.org.au/apex/f?p=102:1

You’ll notice that the “p” parameter only specifies the app ID (102) and the page ID (1). What apex does is responds with a 302 temporary redirect, that tells the client to redirect to a new URL containing a newly generated session ID, e.g.:

http://www.foothillschurch.org.au/apex/f?p=102:1:45164531548964:::::

Behind the scenes, it’s not just changing the URL – it’s also sending a cookie to the client to be used for subsequent calls. I’ll get back to this later.

Whenever you navigate around a normal apex site, the session ID gets copied into each link so that the user’s session is preserved. If you manually change or remove the session ID from the URL, apex will redirect you to a newly created session ID.

In case you’re wondering, there’s no significant security risk behind the exposed session ID – no-one can “hijack” your session, even if they copy your session ID directly. That’s because there’s a cookie behind the scenes with a secret value that must match up with the session ID, and only someone with sufficient access to the database server could get enough data to do that.

If you store the URL containing a session ID (e.g. in your bookmarks) and re-use it much later, your session will have expired – in which case APEX will create a new session, and 302 temporary redirect you to a new URL with the new session ID. Therefore, users can safely bookmark any page in apex.

But now we come, finally, to Googlebot, that little rascal. Now, we would like our public-facing site to be indexed by Google, so we need all the pages of the site that have relevant info to be crawlable.

The way Googlebot works, normally, is that it starts from a link to your page (e.g. on another website, or in a sitemap you submit to Google), e.g.

http://www.foothillschurch.org.au/apex/f?p=102:1

It checks that the URL is not forbidden by your robots.txt, and sends a request to your server for it. If the response is 200 OK and has a body, Googlebot indexes the page contents, extracts any links from it, and crawls them. Actually, it doesn’t crawl them straight away – it just adds them onto the end of a queue somewhere to be crawled later.

If the response is a 4xx (permanent error) or 5xx (temporary error), Googlebot seems to put the URL back on the queue for a few more goes before it gives up.

If the response is a 3xx redirect, and this is the kicker, Googlebot does not always perform the redirect straight away. It may take the new URL and just add it onto the end of the queue to be crawled later. It seems to me (based on what I’ve seen in my apache logs) that if the URL is different from the first, Googlebot will queue it up for later; but if the URL is identical, it will usually try it straight away.

You may see the problem here: Googlebot visits:

http://www.foothillschurch.org.au/apex/f?p=102:1

Our site creates a session, and responds with a 302 temporary redirect to:

http://www.foothillschurch.org.au/apex/f?p=102:1:48327482923832:::::

Googlebot dutifully notes this new URL and queues it up to crawl later. Meanwhile, our server is waiting patiently for it to get back, but it never does – so the session automatically expires. Much later, Googlebot visits:

http://www.foothillschurch.org.au/apex/f?p=102:1:48327482923832:::::

Our site sees the expired session, creates a new one, and responds with another 302 temporary redirect to:

http://www.foothillschurch.org.au/apex/f?p=102:1:9783829383342:::::

Googlebot dutifully notes this new URL and queues it up to crawl later, etc. etc. etc. Also, it’s not even as benign as that: each URL is not tried just once, but many many times (depending on what speed setting you’ve got the crawler on) – and every single time, our server responds with a brand-new, unique session ID. I hope you can now see why our little site crashed under the load – it quickly filled up the apache logs, it quickly filled up the debug logs in apex, and it quickly overflowed the poorly-configured archive log.

The way to solve this problem is to stop exposing these session IDs in the URL – and in Apex you can do that by setting the session ID to zero, e.g.:

http://www.foothillschurch.org.au/apex/f?p=CHURCH:1:0

Behind the scenes, Apex still creates a session, but whenever it generates a URL with #SESSION# it substitutes zero instead of the internal session ID. This method is great for people who wish to bookmark a page in an application that doesn’t require authentication. It also seems to work for the Googlebot crawler.

The above URL will still cause a 302 temporary redirect, however; apex will redirect it to:

http://www.foothillschurch.org.au/apex/f?p=CHURCH:1:0:::::

You might think that this final URL would stop the redirects, wouldn’t you? Well, it doesn’t. You can see what happens if you open this URL in Google Chrome in incognito mode. First, open an incognito window, then choose the Tools menu, Developer Tools. Select the Network tab. Then, paste the URL into the address bar and press Enter.

You will find that the first call to apex/f receives a 302 (temporary redirect). If you click this entry and choose Headers, you’d see something like this:

Request URL:http://www.foothillschurch.org.au/apex/f?p=CHURCH:1:0:::::

Request Method:GET

Status Code:302 Found

Request Headers:

Accept:text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8

Accept-Charset:ISO-8859-1,utf-8;q=0.7,*;q=0.3

Accept-Encoding:gzip,deflate,sdch

Accept-Language:en-US,en;q=0.8

Connection:keep-alive

Host:www.foothillschurch.org.au

User-Agent:Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.187 Safari/535.1

Response Headers:

Cache-Control:max-age=0

Connection:Keep-Alive

Content-Length:0

Content-Type:text/html; charset=UTF-8

Date:Thu, 06 Oct 2011 22:54:10 GMT

Expires:Thu, 06 Oct 2011 22:54:10 GMT

Keep-Alive:timeout=10, max=100

Location:f?p=CHURCH:1:0:::::

Server:Oracle XML DB/Oracle Database

Set-Cookie:WWV_CUSTOM-F_5238514445419534_102=D6E147387BD4C9DA

WWV_PUBLIC_SESSION_102=2140144576372238

X-DB-Content-length:0

Notice that the request sent no cookies, and the response was a 302 including some cookies (WWV_CUSTOM-F_blablabla and WWV_PUBLIC_SESSION_102).

If you click on the next line (the second call to apex/f) and look at the Headers view, you’ll see this interaction instead:

Request URL:http://www.foothillschurch.org.au/apex/f?p=CHURCH:1:0:::::

Request Method:GET

Status Code:200 OK

Request Headers:

Accept:text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8

Accept-Charset:ISO-8859-1,utf-8;q=0.7,*;q=0.3

Accept-Encoding:gzip,deflate,sdch

Accept-Language:en-US,en;q=0.8

Connection:keep-alive

Cookie:WWV_CUSTOM-F_5238514445419534_102=D6E147387BD4C9DA; WWV_PUBLIC_SESSION_102=2140144576372238

Host:www.foothillschurch.org.au

User-Agent:Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.187 Safari/535.1

Response Headers:

Cache-Control:max-age=0

Connection:Keep-Alive

Content-Type:text/html; charset=UTF-8

Date:Thu, 06 Oct 2011 22:54:10 GMT

Expires:Thu, 06 Oct 2011 22:54:10 GMT

Keep-Alive:timeout=10, max=99

Server:Oracle XML DB/Oracle Database

Transfer-Encoding:chunked

X-DB-Content-length:11291

This time, the request included the cookies, and the apex engine matched them against a valid and current session; so it responds with the desired 200 (OK) response and the body of the web page.

Gradually, as I was working all this out, I fixed individual problems one by one – turning off debug mode in the application, setting the crawler to a slower speed, and fixing the archive logging. I also added a rel=canonical link in the header of every page. However, the root cause was these URLs being tried by Googlebot, which were quickly escalating as time went by. I didn’t want to use robots.txt to stop it completely, although that might be a valid solution for some cases, because that would remove our Google listing.

I raised a question on the Google webmaster forum to see if there was some way to remove all these URLs from the Googlebot queue that had session IDs. The only way to remove URLs is to (a) add them to your robots.txt, and (b) submit individual URLs to be removed via Google webmaster tools.

In the end, with some help some contributors to the forum, I worked out how to set up Apache to stop these session IDs in their tracks, by doing a 301 (permanent redirect) to a URL with a 0 (zero) session ID. The magic words, added to my apache conf file, were:

RewriteCond %{QUERY_STRING} ^p=102:([a-z0-9]*):([0-9]*):(.*)$ [NC,OR]

RewriteCond %{QUERY_STRING} ^p=CHURCH:([a-z0-9]*):([1-9][0-9]*):(.*)$ [NC]

RewriteRule /apex/f /apex/f?p=CHURCH:%1:0:%3 [R=permanent,L]

What these do is look for any URL with a parameter p where the app ID is 102 or “CHURCH”, and redirects to a new URL that is identical except that it uses the app alias (CHURCH) and sets the session ID to 0.

Breaking it down:

RewriteCond introduces a condition for the following RewriteRule. It’s like an “IF” test.

%{QUERY_STRING} means we want to test the query string (i.e. the part of the URL following the ?).

^p=102:([a-z0-9]*):([0-9]*):(.*)$ this is the regular expression that is used to test for a match. In this case, it is looking for a query string that starts with “p=102:“, followed by a string of letters or digits (the page ID or alias), followed by “:“, followed by a string of digits (the session ID), followed by “:“, and ending with any string of characters. The parts in parentheses – i.e. ([a-z0-9]*) and ([0-9]*) and (.*) will be available for later reuse as substitution variables %1, %2 and %3.

^p=CHURCH:([a-z0-9]*):([1-9][0-9]*):(.*)$ is a similar regular expression, with a slightly different rule for the middle part (the session ID) – it only matches where the session ID is not zero – ([1-9][0-9]*) only matches a string of digits that starts with 1-9. This is because we don’t want our rewrite rule triggering if the app alias is already “CHURCH” and the session ID is already zero.

[NC] “not case sensitive” flag – so that “church” and “Church” will match as well.

[OR] “OR” flag – the condition should be “OR”-ed with the following condition. If either of the RewriteCond directives match, then we want to use the same RewriteRule.

RewriteRule directs the engine to modify the request URI.

/apex/f identifies the part of the request to be rewritten.

/apex/f?p=CHURCH:%1:0:%3 is the rewritten URL. %1 and %3 extract the page ID/alias, and the part of the query string following the session ID, respectively.

[R=permanent] “Redirect” flag – by default this would do a 302 (temporary) redirect, but we have specified “permanent” so that it will do a 301 permanent redirect.

[L] “Last” flag – no further rewrite rules (if any) should be applied.

It works! Everything is now back to sane levels, and I can now enjoy life.

UPDATE Oct 2020

Introduced in APEX 20.1 is the new Friendly URLs option which changes things a lot for this topic – Friendly URLs on a public page no longer require a session ID.

If the page is public and the URL has no session parameter, APEX immediately returns a 200 OK response with the page – which is perfect for Googlebot.

There is officially now no good excuse for not running your own instance of Oracle 11g and getting into APEX 4 development, or just playing with SQL and PL/SQL.

Note: another easy-to-follow guide to this process was published back in April by Alex Gorbachev here: http://www.pythian.com/news/22045/how-to-get-started-with-amazon-ec2-oracle-11g-xe-example/, which may suit your needs – although it is based on an AMI with a beta release of 11gXE pre-installed. Some of the instructions below are virtually identical to his.

1. Gather your software.

You’ll need:

What, no OS, you ask? Nah – the easiest way to get started is to start with an AMI (Amazon Machine Image) that has linux installed.

Install PuTTY and your SCP/SFTP client on your computer, then:

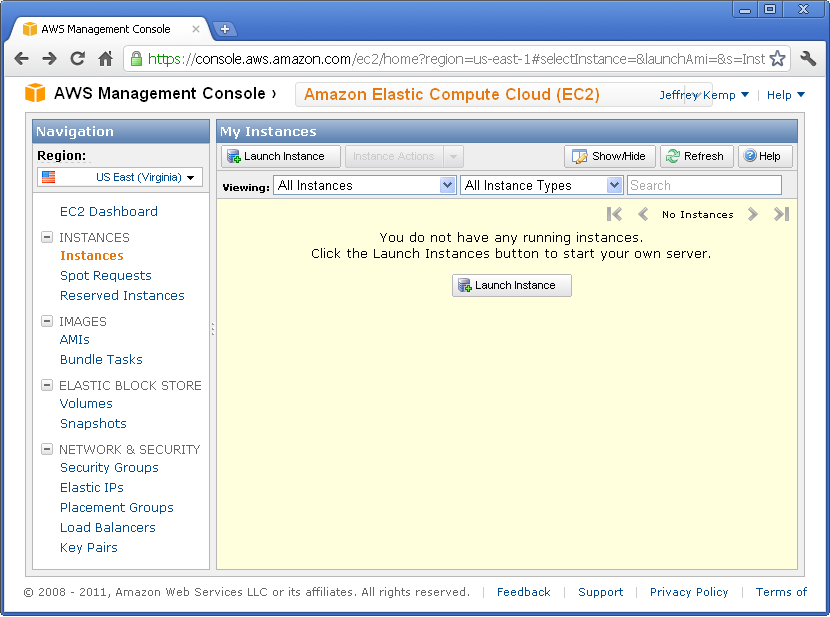

2. Get your Amazon EC2 account.

Go to

http://aws.amazon.com/ and click “Sign Up Now”. You’ll need an EC2 account and you’ll need to give them some credit card details.

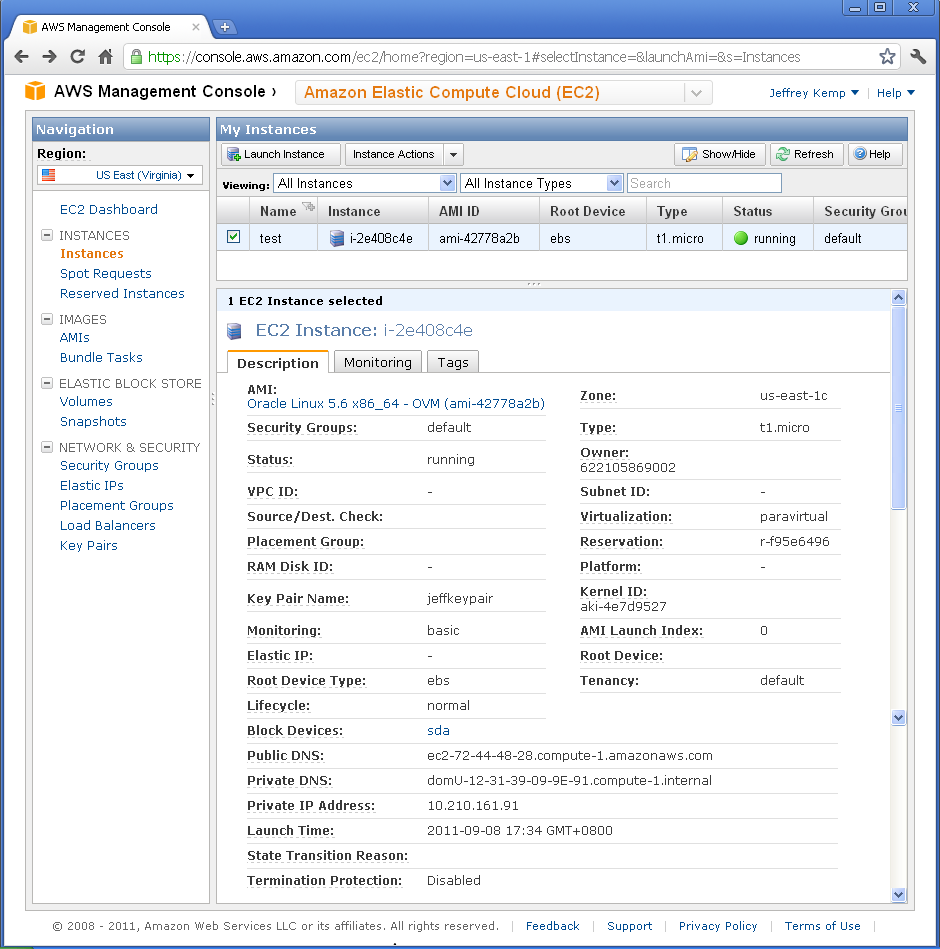

Navigate to the Amazon Elastic Compute Cloud (EC2) in the AWS Management Console.

3. Launch Instance.

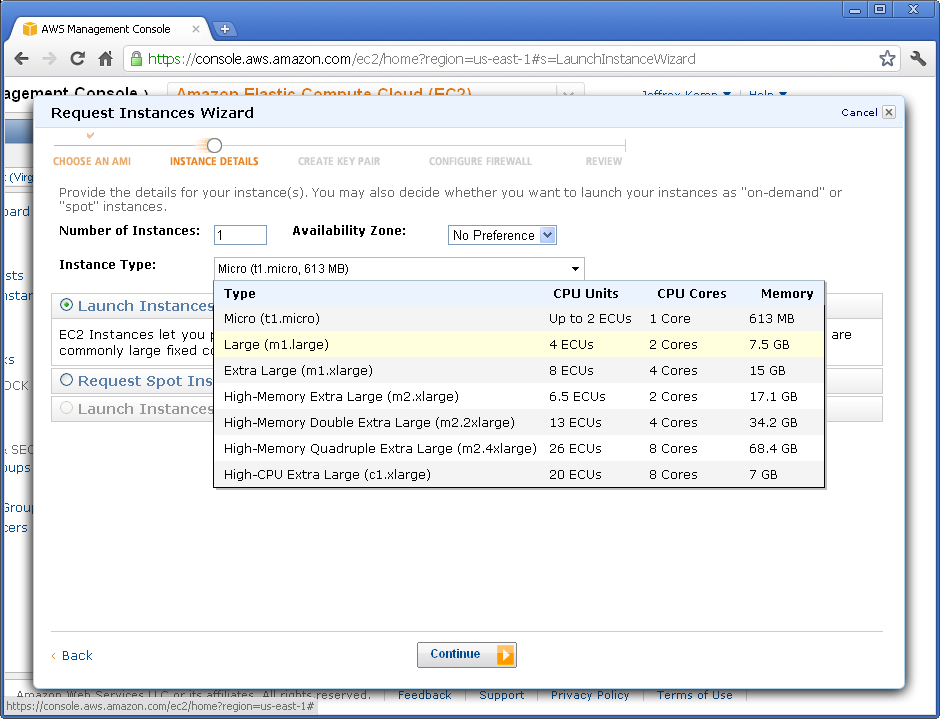

Click the “Launch Instance” button. Go to “Community AMIs“. Change the “Viewing” filter to “64-bit“. In the search field type “oracle“. You should see a long list of AMIs to choose from. What you’re looking for is an EBS-backed AMI running Oracle Enterprise Linux 5. The actual name and AMI ID will vary from region to region, but in US-East you could use “493731438004/Oracle Linux 5.6 x86_64 – OVM”, AMI ID ami-42778a2b (you could enter this ID into the search field to go directly to it), or if you’re using the Singapore region you could use AMI ID ami-bc2f56ee.

Next, you get to choose the size of the instance. The bigger the machine, the more grunt you get, but also slightly more expensive to run. You could choose Micro, but you might find it a bit slow at times. Of course, there’s nothing stopping you from trying one, and if you don’t like what it offers, just trade up!

On the “Advanced Instance Options” page, accept all the defaults and Continue.

On the next page, you can put a name on your instance if you like. This is just a label, and comes in handy when you run many instances.

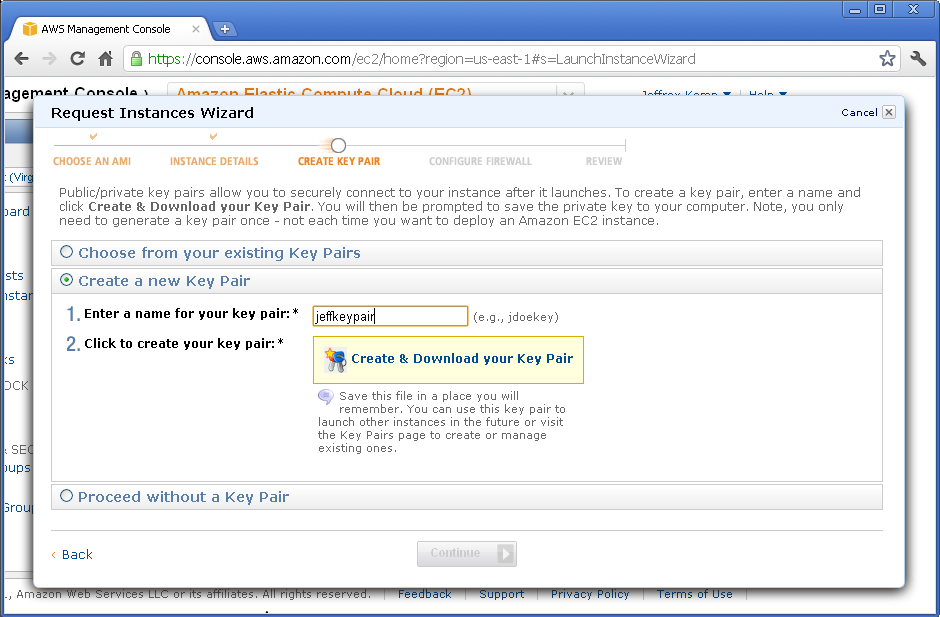

On the “Create Key Pair” page, you need to select a Key Pair. You can create one if you don’t already have one.

Enter a name for your key pair, then click “Create & Download your Key Pair“. Make sure you keep the file (it’ll be a .PEM file) in a safe place – if you lose it, you’ll lose access to your instance (in which case you’d just have to terminate it and start again).

The next page is the “Configure Firewall” step, where you select a Security Group. You could create a new one if you like – open up TCP ports 22, 8080 and 1521 so you can upload files, access the Apex web interface, and connect to the database (e.g. from SQL Developer).

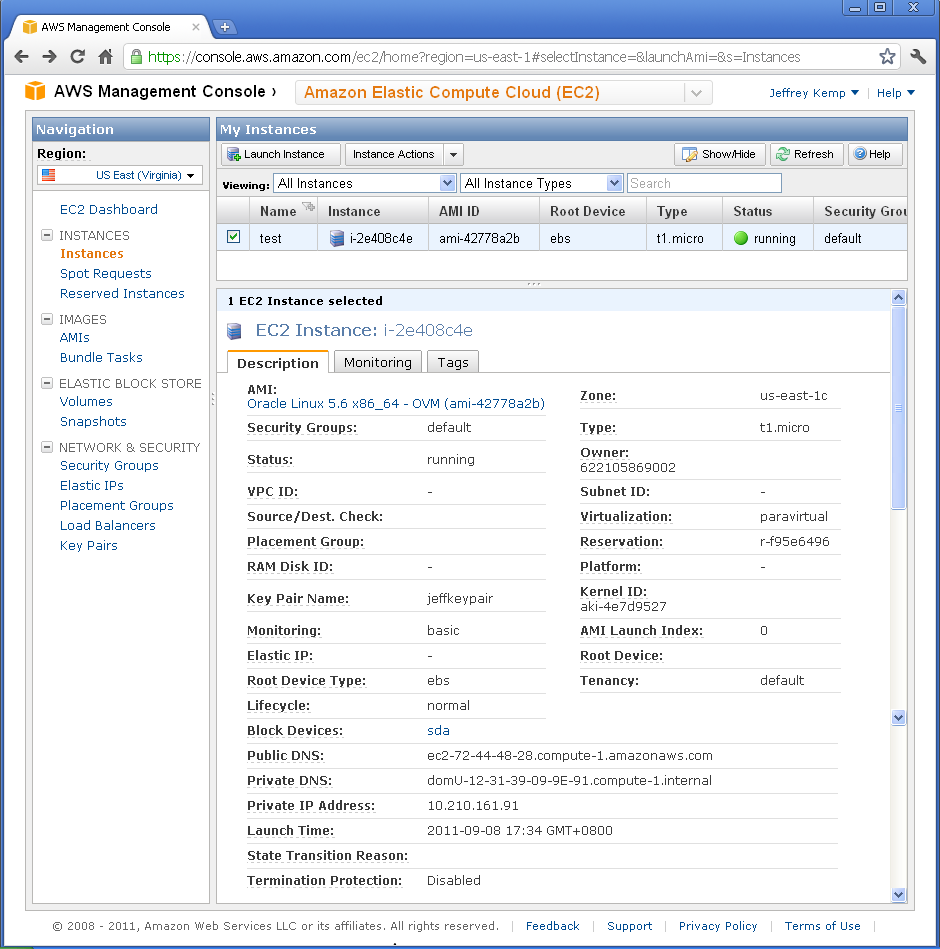

On the final page, click “Launch”. After maybe a minute or two, your instance will have been started and running, ready for you to connect to it and do stuff on it:

Here, you can find the following useful information:

Status: this will say “Pending”, “Running”, or some other status.

Public DNS: this is the URL you can use to connect to your instance: in my case, it is ec2-72-44-48-28.compute-1.amazonaws.com.

4. Set up your key pair.

Before you can connect to your instance with PuTTY or your SCP client, you’ll need a PPK file. You generate this from the PEM file you got before. In the folder where PuTTY is installed is a little tool called PUTTYGEN.EXE. Run this, select Conversions -> Import Key, select your PEM file, then click Save Private Key. It might warn you about a passphrase – if you want to protect the key in case someone manages to steal it then you could put one in, but personally I don’t. Save it somewhere safe as a PPK file.

5. Upload the Oracle installer to the instance.

Open WinSCP. Click New. Copy the Public DNS into Host (e.g. ec2-72-44-48-28.compute-1.amazonaws.com). Set User name to root. Leave the Password blank. Select your Private Key file (PPK). Save the settings then click Login. If you get a warning about the “server’s host key not in the cache”, just click “Yes” to continue.

If you get timeout errors, check that port 22 has been open (and your changes saved) on your AWS Security Group.

Upload the Oracle installer (oracle-xe-11.2.0-1.0.x86_64.rpm.zip).

6. Login to your instance.

Open PuTTY. Copy the Public DNS into Host Name, and select your Private Key file (PPK) (this option is tucked away under Connection/SSH/Auth). You can save the session if you want. When you Open the connection, it will prompt for the login. Login as root.

7. Install Oracle.

If you just want to know what to type, here is basically all you need (type the commands after the “#” – don’t type the “#” itself though):

# unzip /root/oracle-xe-11.2.0-1.0.x86_64.rpm.zip

# rpm -ivh /root/Disk1/oracle-xe-11.2.0-1.0.x86_64.rpm

# /etc/init.d/oracle-xe configure

This script will prompt you for the APEX port and the database port – just press Enter to accept the defaults (8080 and 1521, respectively). It will then ask for the password for SYS and SYSTEM. Finally, it will ask you if you want it to run on startup. Accept the default (yes).

8. Disable the firewall.

# chkconfig iptables off

# service iptables stop

This disables the internal firewall running in your linux instance.

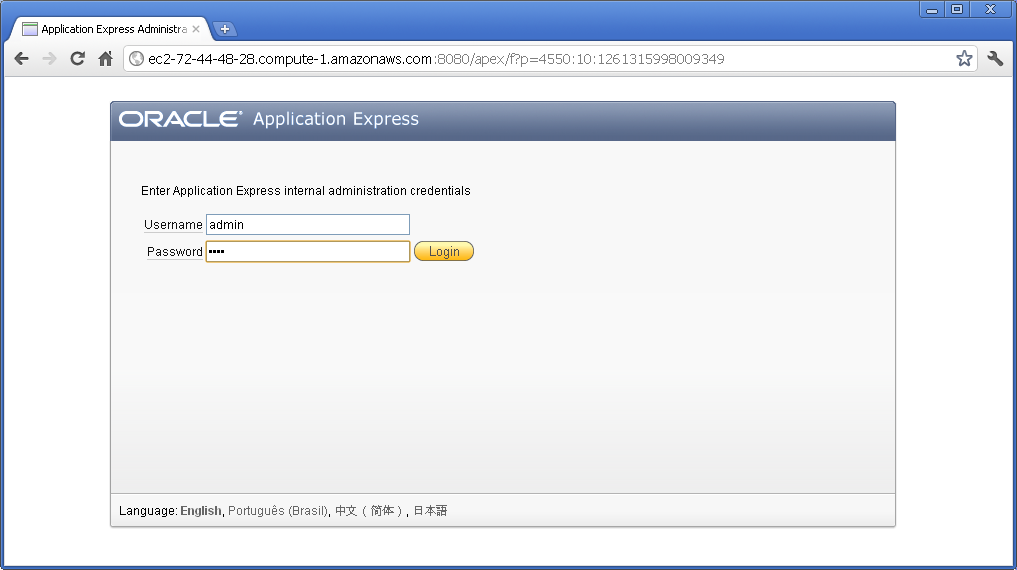

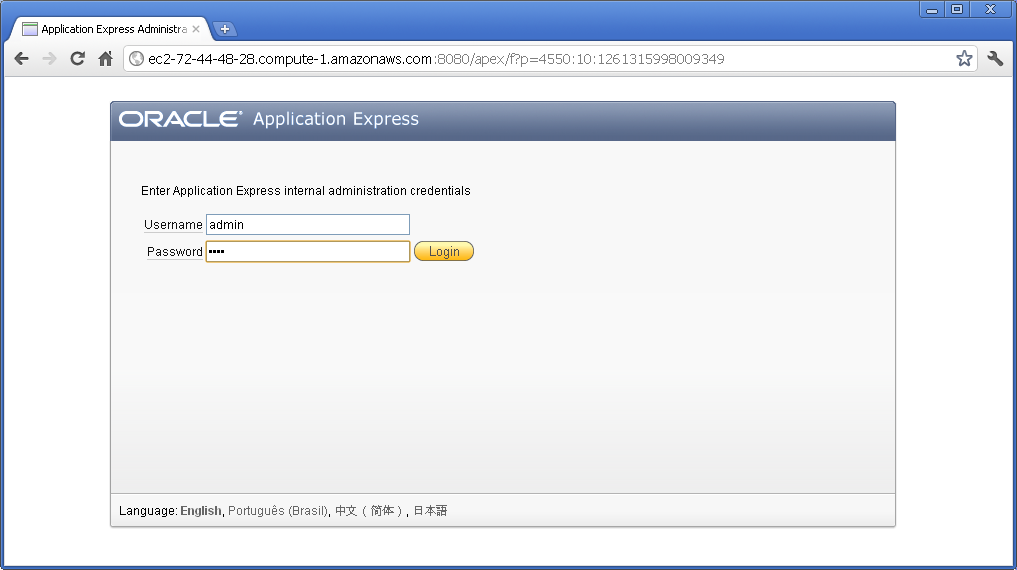

9. Log into APEX.

Open your browser and point it at your instance. Take the Public DNS and add :8080/apex/apex_admin, e.g.:

http://ec2-72-44-48-28.compute-1.amazonaws.com:8080/apex/apex_admin

The login name is admin, and the password is (for now) the same password you entered for the SYS and SYSTEM accounts earlier.

It will prompt you to change the password. It has to be a fairly secure – you’ll need at least a letter, number and symbol, and you can’t include the login name. After changing your password, you’ll need to log in again but using the new password.

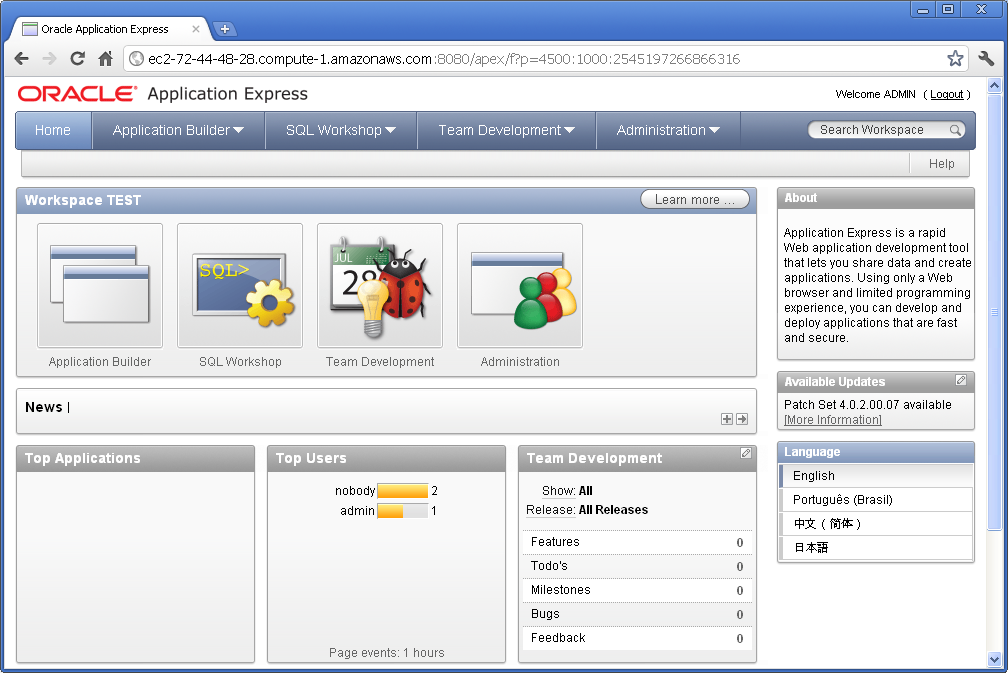

You now have a fully-fledged Oracle Application Express environment, version 4.0.

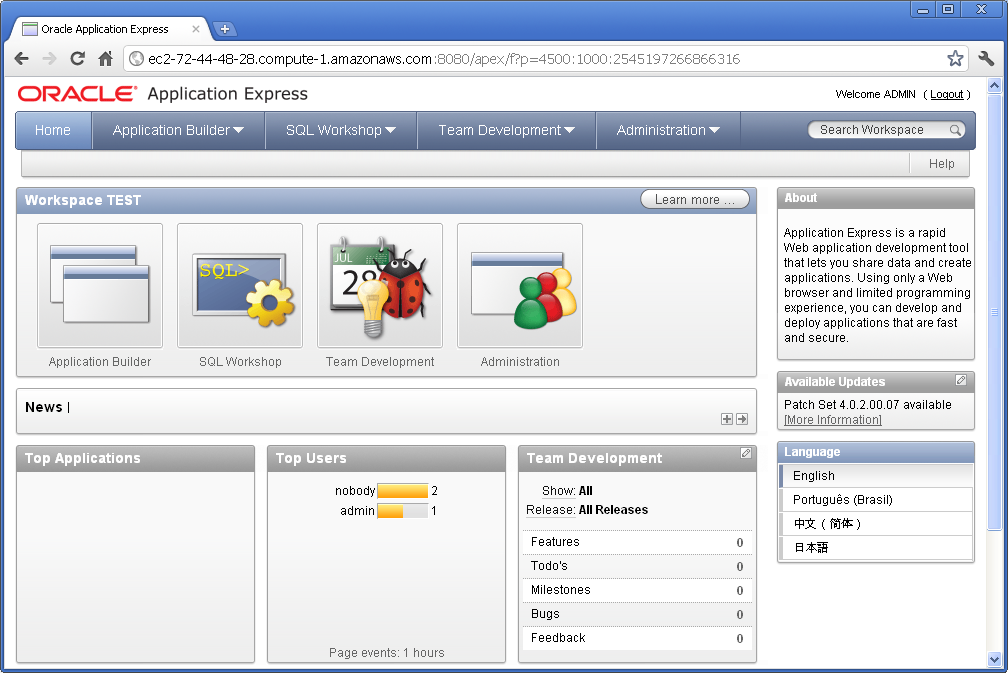

10. Create a Workspace.

The last thing you need to do before you can start creating applications and stuff is to create a workspace. Click “Create Workspace” and answer the prompts to create the workspace along with its own schema and admin user account.

Log out of APEX Administration, and log into the newly created workspace – and you will now be able to start creating applications, tables, and what-have-you. You can also use APEX to write code (such as procedures and packages), but I suggest you use Oracle SQL Developer for this kind of thing because it’s a bit more powerful.

From now on, to get back into your APEX development environment, you can use this url:

http://ec2-72-44-48-28.compute-1.amazonaws.com:8080/apex

Have fun!

Oh, by the way: when you’ve had your fun, and you want to stop your instance (which at about 34c/h may cost around $60 per week if you leave it running 24×7), don’t forget to terminate it from AWS Management Console. Before you do, however, you can take a snapshot of it in its current state so you can restore from it later. (Before this, you might want to shut down the Oracle database within the instance first – although I’ve found it quite easy to recover an instance which was snapshotted while the database was up and running). To do that, right-click on the instance in AWS Management Console, and select “Create Image (EBS AMI)”. It may take some time for the image to be created. When the image creation is complete, you can then Terminate the instance. The AMI will take up a bit of storage that will cost a dollar or two per month; and you can now spin up as many copies of your machine whenever you want.

Update:

Lew posted an excellent introduction to PuTTY and SSH for AWS Newbies that I heartily recommend. It was posted in 2009 but still applies.

For a long time my disaster recovery procedure for my Amazon EC2-based web site was:

- Find an Oracle AMI that has 10g XE with APEX pre-installed, and start up an instance with it.

- Create a volume from a backup snapshot and attach it to the new instance.

- Log into the instance, shut down apache and oracle, then delete all the oracle data files, apache config files, and a few other bits and pieces.

- Create symbolic links for the deleted bits and pieces (including the oracle data files) that point to the attached volume.

- Start up oracle and apache and test.

- Switch the elastic IP over to the new instance.

This procedure has been tested and retested multiple times, and came in useful once when I almost lost the site (actually, it just went unresponsive due to some general problems at Amazon, but at the time I thought it’d had gone down).

Last week I logged in to the AWS Management Console to do a routine backup-and-restore test, but discovered a problem: it couldn’t find the Oracle 10g XE AMI. Searches on the image ID and various keywords, across all the Amazon regions, returned no results. Searches on “oracle” brought back a number of options but none of them close to what I required. I enquired with Amazon and they responded that the AMIs are supplied by Oracle and had been removed. I discovered this meant that not only could I not start up an instance using one of these images, I also couldn’t point to my running instance and “start up another like this” – because this requires access to the original AMI that was used to start it.

The machine image which I was trying to find is (at least, as of today 5 Jul 2011) still referred to here: http://aws.amazon.com/amis/Oracle/1720 “Oracle Database 10g Release 2 Express Edition – 32 Bit” so I raised a question on the oracle forum (http://forums.oracle.com/forums/thread.jspa?messageID=9707298�) and sent an email to Bill Hodak at Oracle who was named in the description of the AMI. He replied he would see if he could find out what had happened to it.

At this point I was hoping that my running instance wouldn’t go down, because I didn’t know if I’d be able to restore from backup. My backup consisted solely of snapshots of just the data – the rest of the OS was supposed to be provided by the AMI.

Meanwhile, asam replied to my oracle forum thread, suggesting I create my own AMI. A bit of googling yielded this result, which proved very helpful: http://webkist.wordpress.com/2010/03/16/creating-an-amazon-ec2-ebs-ami-from-a-running-instance/ “Creating an Amazon EC2 EBS AMI from a running instance”. I followed the instructions, slightly modified as follows:

- Use AWS Management Console to create a new volume

- Attach the volume to my running instance and mount it:

# mkdir /u03

# mount -t ext3 /dev/sdf /u03

- Move everything from the old volume so that it all sits under / again instead of via symbolic links

- Synchronize the filesystem to the new volume:

# rsync -a --delete --progress -x / /u03

- When rsync has completed, fix up the devices:

# MAKEDEV -d /u03/dev -x console

# MAKEDEV -d /u03/dev -x zero

# MAKEDEV -d /u03/dev -x null

- Unmount the volume:

# umount /u03

- Get the EC2 X.509 cert and private key from the “Security Credentials” area under “Account” in AWS Management Console.

- Download the Amazon EC2 API tools:

http://aws.amazon.com/developertools/351

- I needed java to run the API tools, so download the rpm:

jre-6u26-linux-i586.rpm – e.g. from http://www.oracle.com/technetwork/java/javase/downloads/jre-6u26-download-400751.html

- Upload the EC2 X.509 cert and private key, the Amazon EC2 API tools, and the java rpm to the instance. Unzip and install the API tools and the java rpm.

- Set up all the required environment variables (replace xxxwith the appropriate bits from the relevant file names):

# export EC2_CERT=/root/cert-xxx.pem

# export EC2_PRIVATE_KEY=/root/pk-xxx.pem

# export EC2_HOME=(path-to-ec2-stuff)

# export JAVA_HOME=(path-to-java-stuff)

# export PATH=$PATH:$EC2_HOME/bin

- Set up a symbolic link so that the EC2 tools can find java:

# ln -s (path-to-java-stuff) /usr/bin/java

- Back in the AWS Management Console, create a snapshot of the volume.

- In the instance, run this command (this is the only command you can’t do in the management console, which is what all that rigmarole about installing the API tools was all about):

# ec2-register --snapshot snap-xxx --description "my ami description" --name "my ami name"

--ramdisk ari-yyy --kernel aki-zzz --region ap-southeast-1

You can get the snapshot, ramdisk and kernel identifiers from the AWS Management Console. (my instance was running in Singapore, so my region is ap-southeast-1)

- Back in AWS Management Console, I see my new AMI has been created. All I have to do now is select it, click Launch Instance, and a copy of my site is up and running.

I startup the database and see if it’s working. Unfortunately it isn’t – a bit more investigation revealed that the Oracle listener was not responding to requests. lsnrctl status reveals that it is still using the old internal IP address from the original instance – but this is a new instance with a different internal IP address.

To fix this, I edit listener.ora to correct the IP:

# cd /usr/lib/oracle/xe/app/oracle/product/10.2.0/server/network/admin

# chmod +w listener.ora

# vi listener.ora

The IP address is listed as the “Private IP Address” on the instance in AWS Management Console.

# lsnrctl start

After that, it’s all working – and very soon I will have a much simpler (and hopefully somewhat less reliant on the kindness of big corporations) disaster recovery process. I just need to work out the simplest way to restore the data from backup to the new instance. I’ll probably just create a new volume from a backup snapshot, attach it to the instance, and copy all the data across.

UPDATE: With an EBS-backed volume, I can now create a new AMI from the running instance whenever I want – it takes a complete snapshot of the instance, from which I can then create new instances. So my disaster recovery procedure is much simpler than it was before 🙂

Whereas APEX 3.2.1 (and earlier, I think) required one of:

Whereas APEX 3.2.1 (and earlier, I think) required one of:

- Microsoft Internet Explorer 6.0 or later version

- Firefox 1.0 or later

APEX 4.0 requires:

- Microsoft Internet Explorer 7.0 or later version

- Mozilla Firefox 3.5 or later version

- Google Chrome 4.0 or later version

- Apple Safari 4.0 or later version

While they’ve dropped support for IE 6, it does mention that you can still develop applications that will work perfectly well on the older version. Also, it’s good to see Oracle officially supporting more browsers, including Chrome. I’ve been using Chrome to develop APEX applications for quite a while now, and the only problem I’ve encountered is that the procedure editor wouldn’t work. I’ve tried it in APEX 4.0 using EPG and it seems to work fine – so that’s good. I doubt APEX will ever really replace SQL Developer but it’s already useful for many development and maintenance tasks.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

Whereas APEX 3.2.1 (and earlier, I think) required one of:

Whereas APEX 3.2.1 (and earlier, I think) required one of: