My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

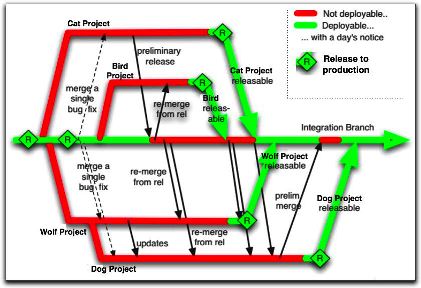

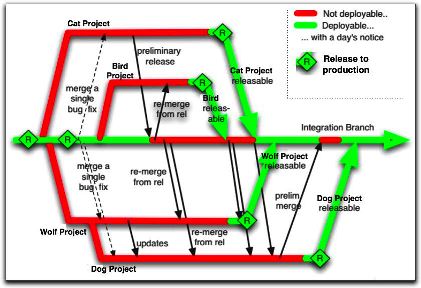

They always have a number of projects on the go with it, and they wanted us to develop two major revisions to it in parallel. In other words, we’d have v1.0 (so to speak) in Production, which still needed support and urgent defect fixing, v1.1 in Dev1 for project A, and v1.2 in Dev2 for project B. Oh, and we don’t know if Project A will go live before Project B, or vice versa. We have source control, so we should be able to branch the application and have separate teams working on each branch, right?

We said, “no way”. Trying to merge changes from a branch of an APEX app into an existing APEX app is not going to work, practically speaking. The merged script would most likely fail to run at all, or if it somehow magically runs, it’d probably break something.

So we pushed back a bit, and the terms of the project were changed so that development of project A would be done first, and the development of project B would follow straight after. So at least now we know that v1.2 can be built on top of v1.1 with no merge required. However, we still had the problem that production defect fixes would still need to be done on a separate version of the application in dev, and that they needed to continue being deployed to sit/uat/prod without carrying any changes from our projects.

The solution we have used is to have two copies of dev, each with its own schema, APEX application and version control folder: I’ll call them APP and APP2. We took an export of APP and created APP2, and instructed the developer who was tasked with production defect fixes to manually duplicate his changes in both APP and APP2. That way the defect fixes were “merged” in a manual fashion as we went along – also, it meant that the project development would gain the benefit of the defect fixes straight away. The downside was that everything worked and acted as if they were two completely different and separate applications, which made things tricky for integration.

Next, for developing project A and project B, we needed to be able to make changes for both projects in parallel, but we needed to be able to deploy just Project A to SIT/UAT/PROD without carrying the changes from project B with it. The solution was to use APEX’s Build Option feature (which has been around for donkey’s years but I never had a use for it until now), in combination with Conditional Compilation on the database schema.

I created a build option called (e.g.) “Project B”. I set Status = “Include”, and Default on Export = “Exclude”. What this means is that in dev, my Project B changes will be enabled, but when the app is exported for deployment to SIT etc the build option’s status will be set to “Exclude”. In fact, my changes will be included in the export script, but they just won’t be rendered in the target environments.

When we created a new page, region, item, process, condition, or dynamic action for project B, we would mark it with our build option “Project B”. If an existing element was to be removed or replaced by Project B, we would mark it as “{NOT} Project B”.

Any code on the database side that was only for project B would be switched on with conditional compilation, e.g.:

$IF $$projectB $THEN

PROCEDURE my_proc (new_param IN ...) IS...

$ELSE

PROCEDURE my_proc IS...

$END

When the code is compiled, if the projectB flag has been set (e.g. with ALTER SESSION SET PLSQL_CCFLAGS='projectB:TRUE';), the new code will be compiled.

Build Options can be applied to:

- Pages & Regions

- Items & Buttons

- Branches, Computations & Processes

- Lists & List Entries

- LOV Entries

- Navigation Bar & Breadcrumb Entries

- Shortcuts

- Tabs & Parent Tabs

This works quite well for 90% of the changes required. Unfortunately it doesn’t handle the following scenarios:

1. Changed attributes for existing APEX components – e.g. some layout changes that would re-order the items in a form cannot be isolated to a build option.

2. Templates and Authorization Schemes cannot be marked with a build option.

On the database side, it is possible to detect at runtime if a build option has been enabled or not. In our case, a lot of our code was dependent on schema structural changes (e.g. new table columns) which would not compile in the target environments anyway – so conditional compilation was a better solution.

Apart from these caveats, the use of Build Options and Conditional Compilation have made the parallel development of these two projects feasible. Not perfect, mind you – but feasible. The best part? There’s a feature in APEX that allows you to view a list of all the components that have been marked with a Build Option – this is accessible from Shared Components -> Build Options -> Utilization (tab).

Enhancement Requests:

1. If Build Options could be improved to allow the scenarios listed above, I’d be glad. In a perfect world, I should be able to go into APEX, select “Project B”, and all my changes (adding/modifying/removing items, regions, pages, LOVs, auth schemes, etc) would be marked for Project B. I could switch to “Project A”, and my changes for Project B would be hidden. I think this would require the APEX engine to be able to have multiple definitions of each item, region or page, one for each build option. Merging changes between build options would need to be made possible, somehow – I don’t hold any illusions that this would be a simple feature for the APEX team to deliver.

2. Make the items/regions/pages listed in the Utilization tab clickable, so I can easily click through and change properties on them.

3. Another thing I’d like to see from the APEX team is builtin GUI support for exporting applications as a collection of individual scripts, each independently runnable – one for each page and shared component. I’m aware there is a Java tool for this purpose, but the individual scripts it generates cannot be run on their own. For example, if I export a page, I should be able to import that page into another copy of the same application (but with a different application ID) to replace the existing version of that page. I should be able to check in a change to an authorization scheme or an LOV or a template, and deploy just the script for that component to other applications, even in other workspaces. The export feature for all this should be available and supported using a PL/SQL API so that we can automate the whole thing and integrate it with our version control and deployment software.

4. What would be really cool, would be if the export scripts from APEX were structured in such a way that existing source code merge tools could merge different versions of the same APEX script and result in a usable APEX script. This already works quite well for our schema scripts (table scripts, views, packages, etc), so why not?

Further Reading:

An enhancement request I was assigned was worded thus:

“User will optionally enter the Phone number (IF the phone was blank the system will default the store’s area code).”

I interpret this to mean that the Customer Phone number (land line) field should remain optional, but if entered, it should check if the local area code had been entered, and if not, default it according to the local store’s area code. We can assume that the area code has already been entered if the phone number starts with a zero (0).

This is for a retail chain with stores throughout Australia and New Zealand, and the Apex session knows the operator’s store ID. I can look up the country code and phone number for their store with a simple query, which will return values such as (these are just made up examples):

Country AU, Phone: +61 8 9123 4567 – area code should be 08

Country AU, Phone: 08 91234567 – area code should be 08

Country AU, Phone: +61 2 12345678 – area code should be 02

Country AU, Phone: 0408 123 456 – no landline area code

Country NZ, Phone: +64 3 123456 – area code should be 03

Country NZ, Phone: 0423 456 121 – area code should be 04

They only want to default the area code for landlines, so if the store’s phone number happens to be a mobile phone number it should not do any defaulting.

Step 1: create a database function (in a database package, natch) to return the landline area code for any given store ID.

FUNCTION get_store_landline_area_code (p_store_id IN VARCHAR2) RETURN VARCHAR2 IS

v_area_code VARCHAR2(2);

v_country_code stores_vw.country_code%TYPE;

v_telephone_number stores_vw.telephone_number%TYPE;

BEGIN

IF p_store_code IS NOT NULL THEN

BEGIN

SELECT country_code

,telephone_number

INTO v_country_code

,v_telephone_number

FROM stores_vw

WHERE store_id = p_store_id;

v_area_code

:= CASE

-- Australian International land line

WHEN p_country_code = 'AU'

AND REGEXP_LIKE(p_telephone_number, '^\+61( ?)[2378]')

--e.g. +61 8 9752 6100

THEN '0' || SUBSTR(REPLACE(p_telephone_number,' '), 4, 1)

-- Australian Local land line

WHEN p_country_code = 'AU'

AND REGEXP_LIKE(p_telephone_number, '^0[2378]')

THEN SUBSTR(p_telephone_number, 1, 2)

-- New Zealand International land line

WHEN p_country_code = 'NZ'

AND REGEXP_LIKE(p_telephone_number, '^\+64( ?)[34679]')

-- e.g. +64 3 1234 567

THEN '0' || SUBSTR(REPLACE(p_telephone_number,' '), 4, 1)

-- New Zealand Local land line

WHEN p_country_code = 'NZ'

AND REGEXP_LIKE(p_telephone_number, '^0[34679]')

THEN SUBSTR(p_telephone_number, 1, 2)

ELSE

NULL

END;

EXCEPTION

WHEN NO_DATA_FOUND OR TOO_MANY_ROWS THEN

NULL;

END;

END IF;

RETURN v_area_code;

END get_store_landline_area_code;

Phone number references:

http://en.wikipedia.org/wiki/Telephone_numbers_in_Australia

http://en.wikipedia.org/wiki/Telephone_numbers_in_New_Zealand

Step 2: add a Dynamic Action to prepend the area code to the phone number, if it wasn’t entered already:

Event: Change

Selection Type: Item(s)

Item(s): P1_CUSTOMER_PHONE_NUMBER

Condition: Javascript expression

Value: $v("P1_CUSTOMER_PHONE_NUMBER").length > 0 && $v("P1_CUSTOMER_PHONE_NUMBER").charAt(0) != "0"

True Action: Set Value

Set Type: PL/SQL Expression

PL/SQL Expression: my_util_pkg.get_store_landline_area_code(:F_USER_STORE_ID) || :P1_CUSTOMER_PHONE_NUMBER

Now, when the user types in a local land line but forget the prefix, the system will automatically add it in as soon as they tab out of the field. If the phone number field is unchanged, or is left blank, this will do nothing.

It assumes that the customer’s phone number uses the same prefix as the store, which in most cases will be true. Ultimately the user will still need to check that the phone number is correct for the customer.

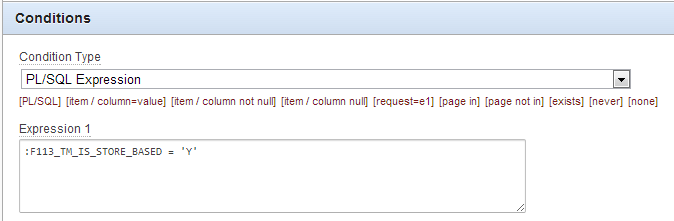

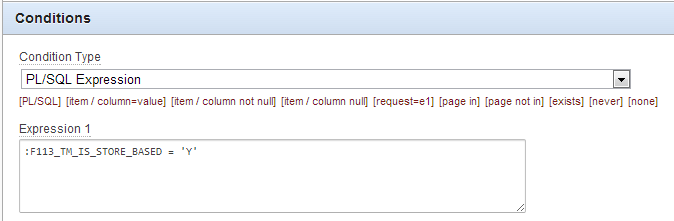

Just a little tip I picked up at the InSync13 conference from listening to Scott Wesley. If you have a lot of conditions that look like this:

(conditions based on a PL/SQL Expression, where the PL/SQL itself doesn’t actually call anything outside of APEX – it’s only dependent on variables that Apex already knows)

(conditions based on a PL/SQL Expression, where the PL/SQL itself doesn’t actually call anything outside of APEX – it’s only dependent on variables that Apex already knows)

Because it’s a PL/SQL expression, the APEX engine must execute this as dynamic PL/SQL – requiring a parse/execute/fetch. This might take maybe 0.03 seconds or so. If there’s only one condition like this on a page, it won’t make any difference. But if there are 50 conditions on a page, it can make a difference to the overall page performance – adding up to 1 whole second or more to the page request, which can be noticeable.

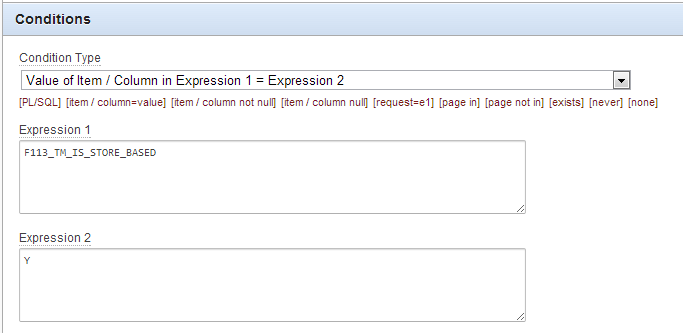

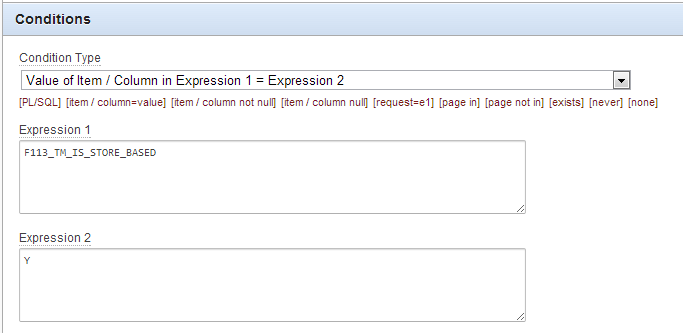

The better alternative is to use the condition type Value of Item / Column in Expression 1 = Expression 2, e.g.:

This condition type requires no dynamic PL/SQL – no parsing – which can reduce the time required to an almost negligible amount.

I love the APEX UI, it makes development so much easier and more convenient – and makes it easy to impress clients when I can quickly fix issues right there and then, using nothing but their computer and their browser, no additional software tools needed.

I love the APEX UI, it makes development so much easier and more convenient – and makes it easy to impress clients when I can quickly fix issues right there and then, using nothing but their computer and their browser, no additional software tools needed.

However, at my main client they have a fairly strict “scripted releases only” policy which is a really excellent policy – deployments must always be provided as a script to run on the command line. This makes for less errors and a little less work for the person who runs the deployment.

In APEX it’s easy to create deployment scripts that will run right in SQL*Plus. You can export a workspace, an application, images, etc. as scripts that will run in SQL*Plus with almost no problem. There’s just a few little things to be aware of, and that’s the subject of this post.

1. Setting the session workspace

Normally if you log into APEX and import an application export script, it will be imported without problem. Also, if you log into SQL*Plus and try to run it, it will work fine as well.

The only difference comes if you want to deploy it into a different workspace ID to the one the application was exported from – e.g. if you want to have two workspaces on one database, one for dev, one for test, when you log into your test schema and try to run it, you’ll see something like this:

SQL> @f118.sql

APPLICATION 118 - My Wonderful App

Set Credentials...

Check Compatibility...

Set Application ID...

begin

*

ERROR at line 1:

ORA-20001: Package variable g_security_group_id must be set.

ORA-06512: at "APEX_040100.WWV_FLOW_API", line 73

ORA-06512: at "APEX_040100.WWV_FLOW_API", line 342

ORA-06512: at line 4

Side note: if you’re using Windows, the SQL*Plus window will disappear too quickly for you to see the error (as the generated apex script sets it to exit on error) – so you should SPOOL to a log file to see the output.

To fix this issue, you need to run a little bit of PL/SQL before you run the export, to override the workspace ID that the script should use:

declare

v_workspace_id NUMBER;

begin

select workspace_id into v_workspace_id

from apex_workspaces where workspace = 'TESTWORKSPACE';

apex_application_install.set_workspace_id (v_workspace_id);

apex_util.set_security_group_id

(p_security_group_id => apex_application_install.get_workspace_id);

apex_application_install.set_schema('TESTSCHEMA');

apex_application_install.set_application_id(119);

apex_application_install.generate_offset;

apex_application_install.set_application_alias('TESTAPP');

end;

/

This will tell the APEX installer to use a different workspace – and a different schema, application ID and alias as well, since 118 already exists on this server. If your app doesn’t have an alias you can omit that last step. Since we’re changing the application ID, we need to get all the other IDs (e.g. item and button internal IDs) throughout the application changed as well, so we call generate_offset which makes sure they won’t conflict.

2. Installing Images

This is easy. Same remarks apply as above if you’re installing the image script into a different workspace.

3. Installing CSS Files

If you export your CSS files using the APEX export facility, these will work just as well as the above, and the same considerations apply if you’re installing into a different workspace.

If you created your CSS export file manually using Shared Components -> Cascading Style Sheets and clicking on your stylesheet and clicking “Display Create File Script“, you will find it doesn’t quite work as well as you might expect. It does work, except that the file doesn’t include a COMMIT at the end. Which normally wouldn’t be much of a problem, until you discover late that the person deploying your scripts didn’t know they should issue a commit (which, of course, would have merely meant the file wasn’t imported) – and they didn’t actually close their session straight away either, but just left it open on their desktop while they went to lunch or a meeting or something.

This meant that when I sent the test team onto the system, the application looked a little “strange”, and all the text was black instead of the pretty colours they’d asked for – because the CSS file wasn’t found. And when I tried to fix this by attempting to re-import the CSS, my session hung (should that be “hanged”? or “became hung”?) – because the deployment person’s session was still holding the relevant locks. Eventually they committed their session and closed it, and the autocommit nature of SQL*Plus ended up fixing the issue magically for us anyway. Which made things interesting the next day as I was trying to work out what had gone wrong, when the system was now working fine, as if innocently saying to me, “what problem?”.

4. A little bug with Data Load tables

We’re on APEX 4.1.1 If you have any CSV Import function in your application using APEX’s Data Loading feature, if you export the application from one schema and import into another schema, you’ll find that the Data Load will simply not work, because the export incorrectly hardcodes the owner of the data load table in the call to create_load_table. This bug is described here: http://community.oracle.com/message/10309103?#10307103 and apparently there’s a patch for it.

wwv_flow_api.create_load_table(

p_id =>4846012021772170+ wwv_flow_api.g_id_offset,

p_flow_id => wwv_flow.g_flow_id,

p_name =>'IMPORT_TABLE',

p_owner =>'MYSCHEMA',

p_table_name =>'IMPORT_TABLE',

p_unique_column_1 =>'ID',

p_is_uk1_case_sensitive =>'Y',

p_unique_column_2 =>'',

p_is_uk2_case_sensitive =>'N',

p_unique_column_3 =>'',

p_is_uk3_case_sensitive =>'N',

p_wizard_page_ids =>'',

p_comments =>'');

The workaround I’ve been using is, before importing into a different schema, I just edit the application script to fix the p_owner in the calls to wwv_flow_api.create_load_table.

5. Automating the Export

I don’t know if this is improved in later versions, but at the moment you can only export Applications using the provided API – no other objects (such as images or CSS files). Just a sample bit of code (you’ll need to put other bits around this to do what you want with the clob – e.g. my script spits it out to serverout so that SQL*Plus will write it to a sql file):

l_clob := WWV_FLOW_UTILITIES.export_application_to_clob

(p_application_id => &APP_ID.

,p_export_ir_public_reports => 'Y'

,p_export_ir_private_reports => 'Y'

,p_export_ir_notifications => 'Y'

);

That’s all my tips for scripting APEX deployments for now. If I encounter any more I’ll add them here.

EDIT:

Related: “What’s the Difference” – comparing exports to find diffs on an APEX application – http://blog.sydoracle.com/2011/11/whats-difference.html

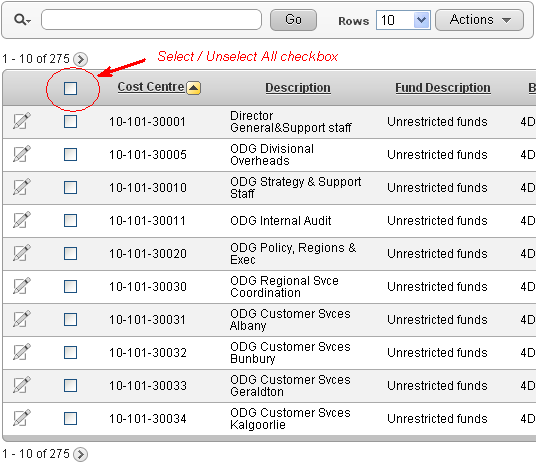

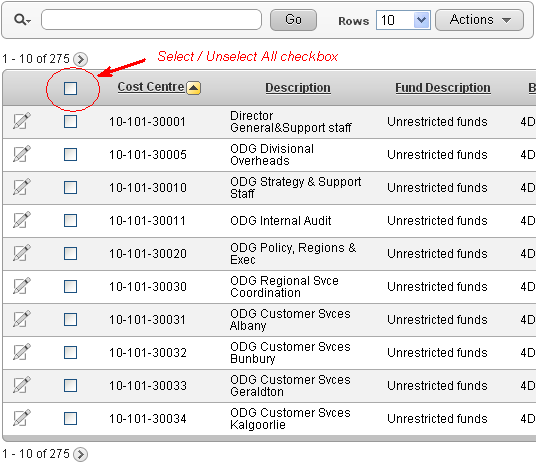

I want a checkbox in an Interactive Report (IR), and I want the users to be able to quickly Select All or Unselect All of them (but only for rows that were rendered on the page). I don’t want two big clunky buttons to do this, I just want a single checkbox in the header like I see on cool peoples’ web sites.

To do this:

1. In the Region Definition for the IR, add the checkbox to the query, e.g.:

SELECT ...,

apex_item.checkbox(1, record_id) selected

FROM ...

Also, set the region Static ID to some value, e.g. myreport This will be referred to by the dynamic action.

2. In the Report Attributes for the IR, modify the attributes of column “SELECTED”:

Heading =

<input type="checkbox" id="selectunselectall">

Escape Special Characters = No

Enable Users To = (uncheck all options, including Hide, Sort, etc.)

3. In the page definition, add a Dynamic Action:

Event = Change

Selection Type = jQuery Selector

jQuery Selector = #selectunselectall

Event Scope = Dynamic

Static Container (jQuery Selector) = #myreport

True Action = Execute JavaScript Code

Fire On Page Load = (uncheck)

Code =

if ($('#myreport #selectunselectall' ).is(':checked') ) {

$('#myreport input[type=checkbox][name=f01]').prop('checked',true);

} else {

$('#myreport input[type=checkbox][name=f01]').prop('checked',false);

}

The only issue with this is if the user clicks “Action” and “Select Columns”, the checkbox item shows the html code (”

UPDATE 18/5/2017: updated for multiple IRs on same page (APEX 5+)

UPDATE 23/5/2017: updated to recommended prop instead of attr

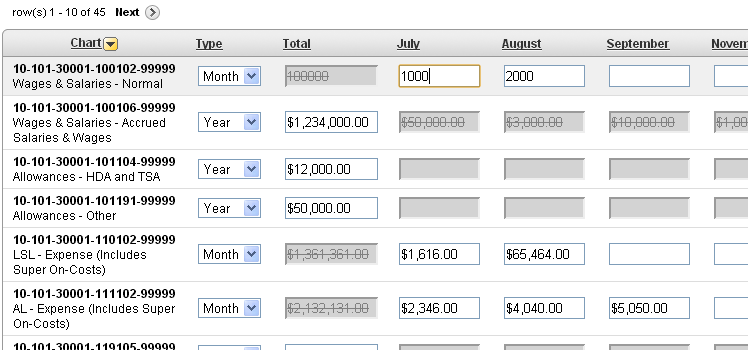

I want to visually enable/disable certain items in each row of a tabular form depending on the value of another item in that row. I’m using APEX 4.1.

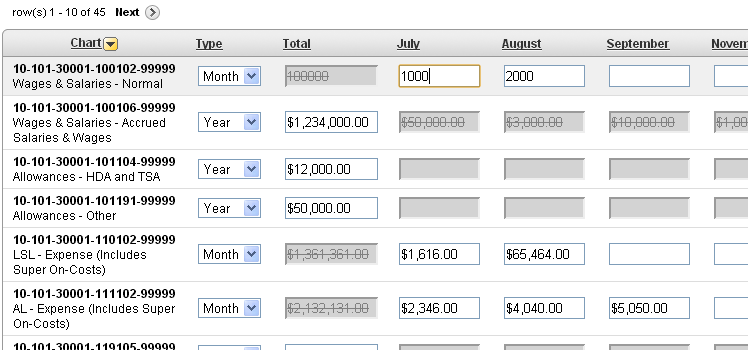

My tabular form has a number of editable fields representing budgets. Each line might be an Annual budget (AMOUNT_TYPE = ‘YEAR’) with a single amount for the year, or a Monthly budget (AMOUNT_TYPE = ‘MONTH’) with separate amounts for each of the twelve months.

The first editable item (internal id f02) is AMOUNT_TYPE which is a Select List with an LOV. The second editable item (internal id f03) is the Annual Amount and should only be enabled if AMOUNT_TYPE = ‘YEAR’. The 3rd, 4th … 14th items (internal ids f04..f15) are the Monthly Amounts and should only be enabled if AMOUNT_TYPE = ‘MONTH’.

To do this:

1. Define a visual style to be applied to items that are disabled.

Add this to the Page’s “HTML Header” attribute:

<style>

.textinputdisabled {

color:grey;

background-color:lightgrey;

text-decoration:line-through;

}

</style>

In this instance, I’ve set the background color to a light grey, the text color to darker grey, and I’ve added a strikethrough effect.

2. Set the class on the AMOUNT_TYPE item

Edit the Column Attributes on the AMOUNT_TYPE column, set Element Attributes to:

class="typeselect"

3. Define the Dynamic Action

Event = Change

Selection Type = jQuery Selector

jQuery Selector = .typeselect

Condition = – No Condition –

True Action = Execute JavaScript Code

Fire On Page Load = yes

Code =

row_id = $(this.triggeringElement ).attr('id').substr(4);

if( $(this.triggeringElement ).val() == 'MONTH')

{

$( "#f03_" + row_id ).prop( 'readOnly', 'readonly');

$( "#f03_" + row_id ).prop( 'class', 'textinputdisabled');

for (var i=4;i<16;i++)

{

column_id = ("0" + i).slice(-2);

$( "#f" + column_id + "_" + row_id ).prop( 'readOnly', false);

$( "#f" + column_id + "_" + row_id ).prop( 'class', false);

}

}

else

{

$( "#f03_" + row_id ).prop( 'readOnly', false);

$( "#f03_" + row_id ).prop( 'class', false);

for (var i=4;i<16;i++)

{

column_id = ("0" + i).slice(-2);

$( "#f" + column_id + "_" + row_id ).prop( 'readOnly', 'readonly');

$( "#f" + column_id + "_" + row_id ).prop( 'class', 'textinputdisabled');

}

}

The above code first determines the id for the row; $(this.triggeringElement).attr(‘id’) returns ‘f02_nnnn’ where nnnn is the row number left-padded with zeroes. For Oracle peeps, substr(4) is equivalent to SUBSTR(x,5).

If the value of the triggering item is MONTH, we want to disable the Annual amount item and re-enable (in case they were previously disabled) the Month amount items. And vice-versa.

To disable an item, we set the readOnly property (note the capital O: this is case sensitive!) to the value “readonly” (all lowercase); this makes it so that the user cannot modify the value in the field. Note that we don’t set the “disabled” property because that would stop the item being posted to the database, which will break the tabular form processing.

Side Note: at first, I was using the .attr and .removeAttr jquery functions to set/unset readOnly as per some notes I’d found on the web; this worked for Chrome, but it made all the items permanently read-only in IE7; after some googling I found this is a feature, not a bug; and that .prop is the correct function to use in this instance.

We also set the class to the CSS style we defined earlier.

Because I have 12 items in a row to modify, I use a Javascript loop. The expression to generate the column id (“0” + i).slice(-2) does the same job as the Oracle expression TO_CHAR(i, ‘fm00’).

Next, I want to enhance this page further, so that when the user is entering monthly amounts, the Total field automatically calculates the sum of all the months (while still saving the original annual amount, if any, on the database). I had to get outside help [stackoverflow] to get this working.

UPDATE (31/7/2015): to make this work if the tabular form has an “Add Row” button, you need to use a jquery “on” event handler instead of using the Dynamic Action, and refer to the item using just “this” instead of “this.triggeringElement”, e.g. put this into the forms Execute when Page Loads:

$(document).on("change", ".typeselect", function(){

row_id = $(this).attr('id').substr(4);

... etc. ...

});

When you deploy a procedure, function or package that has a compilation error, the object is still created, and you can still apply grants on them. This is convenient when deploying a large number of objects, meaning you don’t have to get them all in the right order. After deploying your schema, you can just recompile the invalid objects.

Unfortunately, this doesn’t work for views. Now, normally if you create a view with compilation errors, the view will not be created at all; for a deployment script, however, you could use CREATE FORCE VIEW that means the view will be created (but marked invalid).

Let’s say you have a view that depends on a table that doesn’t exist yet – and won’t exist until much later in your deployment scripts. So you create the view with the FORCE option – success. Then, you apply the GRANTs for the view, and get this:

ORA-04063: view "x" has errors

Why? If you try to grant on a procedure, function or package that has errors, it works fine. For views, apparently, this is not allowed.

Obviously, to solve this you might do the hard work and reorder your deployment scripts so that they create every object in the perfectly correct order, avoiding compilation errors entirely. If you have a large number of objects to deploy, this might be more trouble than you want. Well, there is a workaround:

1. Create the view, minus the bit that causes a compilation error.

2. Apply the grant.

3. Recreate the view, compilation error and all. The grant will remain.

Why might this be useful? In my case, we have two databases connected by database links (on both sides); and we need to deploy a large number of objects to both instances. They are managed by different teams, so we want to be able to deploy the changes to each independently. For the most part, the objects on “our side” compile fine, except for some views that refer to objects on the other side of the database link; but they won’t exist until the other team deploys their changes. We could even have a chicken-and-egg problem, when their views refer to objects on our instance; either way, some of the objects cannot be created error-free until both deployments have been completed.

As it stands, we have two options: deploy everything as best we can, then afterwards (when both deployments have completed), recompile the invalid objects and apply the view grants. An alternative is to use this workaround.

Demonstration

TEST CASE #1: cannot grant on a view with errors

SQL> create or replace force view testview as

select 1 as col from bla;

Warning: View created with compilation errors.

SQL> grant select on testview to someone;

ORA-04063: view "USER.TESTVIEW" has errors

SQL> select grantee, privilege from user_tab_privs

where table_name = 'TESTVIEW';

no rows selected

TEST CASE #2: grant on a view with errors

SQL> create or replace view testview as

select 1 as col from dual;

View created.

SQL> grant select on testview to someone;

Grant succeeded.

SQL> create or replace force view testview as

select 1 as col from bla;

Warning: View created with compilation errors.

SQL> select grantee, privilege from user_tab_privs

where table_name = 'TESTVIEW';

GRANTEE PRIVILEGE

======= =========

SOMEONE SELECT

The above has been tested on Oracle 10gR2 and 11gR2. Should this mean that Oracle should not really raise ORA-04063 in this case? I think so.

If you have a table that represents time-varying info, e.g. with From and To date/time columns, you have a few options with regards to the problem of overlapping dates:

1. Check for overlapping dates in the application layer.

2. Use an off-the-shelf product to generate the appropriate triggers, e.g. Oracle CDM*RuleFrame or Toon Koppelaars’ RuleGen.

3. Roll your own, in the database.

4. Use a different data model that can use a unique constraint.

5. Forget about it.

One reason it’s difficult is that this is an example of a cross-row constraint, i.e. one that cannot merely be checked for the current row by itself. Oracle supports a few cross-row constraints, i.e. Primary Key, Unique and Foreign Key constraints; but it doesn’t natively support arbitrary assertions, which would allow us to easily declare this sort of constraint.

The real challenge comes from the fact that Oracle is a multi-user system and concurrent sessions cannot see the uncommitted data from other sessions; so some form of serialization will be required to ensure that when one session wishes to insert/update the data for a particular entity, no other session is allowed to start working on the same entity until the first session commits (or issues a rollback).

The problem is not new; it’s been around for a long time, and tripped many a new (and old) programmer.

There are two problems with option #1 (code in the application layer): firstly, you have to repeat the code for each different type of client (e.g. you might have a Java UI on one side, as well as some batch script somewhere else); secondly, usually the programmer involved will not understand the concurrency problem mentioned above and will not take it into account.

Option #2 is probably the best, most of the time. The solution is implemented at the database level, and is more likely to work correctly and efficiently.

Option #4 (change the data model) involves not storing the From and To dates, but instead dividing up all time ranges into discrete chunks, and each row represents a single chunk of time. This solution is valid if the desired booking ranges are at predictable discrete ranges, e.g. daily. You can then use an ordinary unique constraint to ensure that each chunk of time is only booked by one entity at any one time. This is the solution described here.

Option #5 (forget about it) is also a viable option, in my opinion. Basically it entails designing the rest of the application around the fact that overlapping date ranges might exist in the table – e.g. a report might simply merge the date ranges together prior to output.

Option #3, where you implement the triggers yourself on the database, has the same advantage as Option #2, where it doesn’t matter which application the data is coming from, the constraint will hold true. However, you have to be really careful because it’s much easier to get it wrong than it is to get right, due to concurrency.

I hear you scoffing, “Triggers?!?”. I won’t comment except to refer you to this opinion, which I couldn’t say it better myself: The fourth use-case for Triggers.

There is another Option #3 using a materialized view instead of triggers; I’ll describe this alternative at the end of this post.

So, here is a small example showing how an overlapping date constraint may be implemented. It is intentionally simple to illustrate the approach: it assumes that the From and To dates cannot be NULL, and its rule for detecting overlapping dates requires that the dates not overlap at all, to the nearest second.

- Create the tables

CREATE TABLE room

(room_no NUMBER NOT NULL

,CONSTRAINT room_pk PRIMARY KEY (room_no)

);

CREATE TABLE room_booking

(room_no NUMBER NOT NULL

,booked_from DATE NOT NULL

,booked_to DATE NOT NULL

,CONSTRAINT room_booking_pk

PRIMARY KEY (room_no, booked_from)

,CONSTRAINT room_booking_fk

FOREIGN KEY (room_no) REFERENCES room (room_no)

);

- Create the validation trigger (note – I’ve used an Oracle 11g compound trigger here, but it could easily be rewritten for earlier versions by using two triggers + a database package):

CREATE OR REPLACE TRIGGER room_booking_trg

FOR INSERT OR UPDATE OF room_no, booked_from, booked_to

ON room_booking

COMPOUND TRIGGER

TYPE room_no_array IS TABLE OF CHAR(1)

INDEX BY BINARY_INTEGER;

room_nos room_no_array;

PROCEDURE lock_room (room_no IN room.room_no%TYPE) IS

dummy room.room_no%TYPE;

BEGIN

SELECT r.room_no

INTO dummy

FROM room r

WHERE r.room_no = lock_room.room_no

FOR UPDATE;

END lock_room;

PROCEDURE validate_room (room_no IN room.room_no%TYPE) IS

overlapping_booking EXCEPTION;

dummy CHAR(1);

BEGIN

-- check for overlapping date/time ranges

BEGIN

SELECT 'X' INTO dummy

FROM room_booking rb1

,room_booking rb2

WHERE rb1.room_no = validate_room.room_no

AND rb2.room_no = validate_room.room_no

AND rb1.booked_from != rb2.booked_from

AND (

rb1.booked_from BETWEEN rb2.booked_from

AND rb2.booked_to

OR

rb1.booked_to BETWEEN rb2.booked_from

AND rb2.booked_to

)

AND ROWNUM = 1;

RAISE overlapping_booking;

EXCEPTION

WHEN NO_DATA_FOUND THEN

-- good, no constraint violations

NULL;

END;

EXCEPTION

WHEN overlapping_booking THEN

RAISE_APPLICATION_ERROR(-20000,

'Overlapping booking for room #' || room_no);

END validate_room;

PROCEDURE validate_rooms IS

room_no room.room_no%TYPE;

BEGIN

room_no := room_nos.FIRST;

LOOP

EXIT WHEN room_no IS NULL;

validate_room (room_no);

room_no := room_nos.NEXT(room_no);

END LOOP;

room_nos.DELETE;

EXCEPTION

WHEN OTHERS THEN

room_nos.DELETE;

RAISE;

END validate_rooms;

BEFORE EACH ROW IS

BEGIN

-- lock the header record (so other sessions

-- can't modify the bookings for this room

-- at the same time)

lock_room(:NEW.room_no);

-- remember the room_no to validate later

room_nos(:NEW.room_no) := 'Y';

END BEFORE EACH ROW;

AFTER STATEMENT IS

BEGIN

validate_rooms;

END AFTER STATEMENT;

END room_booking_trg;

/

That’s all you need. The trigger locks the header record for the room, so only one session can modify the bookings for a particular room at any one time. If you don’t have a table like “room” in your database that you can use for this purpose, you could use DBMS_LOCK instead (similarly to that proposed in the OTN forum discussion here).

It would not be difficult to adapt this example for alternative requirements, e.g. where the From and To dates may be NULL, or where the overlapping criteria should allow date/time ranges that coincide at their endpoints (e.g. so that the date ranges (1-Feb-2000 to 2-Feb-2000) and (2-Feb-2000 to 3-Feb-2000) would not be considered to overlap). You’d just need to modify the comparison in the query in validate_room to take these requirements into account.

Test case #1

INSERT INTO room (room_no) VALUES (101);

INSERT INTO room (room_no) VALUES (201);

INSERT INTO room (room_no) VALUES (301);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (101, DATE '2000-01-01', DATE '2000-01-02' - 0.00001);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (101, DATE '2000-01-02', DATE '2000-01-03' - 0.00001);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-02-01', DATE '2000-02-05' - 0.00001);

Expected: no errors

Test case #2

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-02-01', DATE '2000-02-02' - 0.00001);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-02-02', DATE '2000-02-04' - 0.00001);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-02-03', DATE '2000-02-05' - 0.00001);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-02-03', DATE '2000-02-06' - 0.00001);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-01-31', DATE '2000-02-01');

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-01-31', DATE '2000-02-02' - 0.00001);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-01-31', DATE '2000-02-06' - 0.00001);

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (201, DATE '2000-02-05' - 0.00001, DATE '2000-02-06' - 0.00001);

UPDATE room_booking SET booked_to = '2000-01-02' - 0.00001

WHERE room_no = 101 AND booked_from = DATE '2000-01-02';

Expected: constraint violation on each statement

Test case #3

in session #1:

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (301, DATE '2000-01-01', DATE '2000-02-01' - 0.00001);

in session #2:

INSERT INTO room_booking (room_no, booked_from, booked_to)

VALUES (301, DATE '2000-01-15', DATE '2000-01-16' - 0.00001);

Expected: session #2 will wait until session #1 issues a COMMIT or ROLLBACK. If session #1 COMMITs, session #2 will then report a constraint violation. If session #2 rolls back, session #2 will complete without error.

The No-Trigger option #3: Materialized View

This is similar to a solution proposed by Rob Van Wijk. It uses a constraint on a materialized view to stop overlapping date ranges.

So, instead of the trigger, you would do something like this:

CREATE MATERIALIZED VIEW LOG ON room_booking WITH ROWID;

CREATE MATERIALIZED VIEW room_booking_date_ranges

REFRESH FORCE ON COMMIT

AS SELECT 'X' AS dummy

FROM room_booking rb1

,room_booking rb2

WHERE rb1.room_no = rb2.room_no

AND rb1.booked_from != rb2.booked_from

AND (

rb1.booked_from BETWEEN rb2.booked_from

AND rb2.booked_to

OR

rb1.booked_to BETWEEN rb2.booked_from

AND rb2.booked_to

);

ALTER TABLE room_booking_date_ranges

ADD CONSTRAINT no_overlapping_dates_ck

CHECK ( dummy = 'Z' );

The nice thing about this solution is that it is simpler to code, and seems more “declarative” in nature. Also, you don’t have to worry about concurrency at all.

The constraint is checked at COMMIT-time when the materialized view is refreshed; so it behaves like a deferred constraint, which may be an advantage for some scenarios.

I believe it may perform better than the trigger-based option when large volumes of data are inserted or updated; however it may perform worse than the trigger-based option when you have lots of small transactions. This is because, unfortunately, the query here cannot be a “REFRESH FAST ON COMMIT” (if you know how this could be changed into a REFRESH FAST MV, please let me know!).

What do you think? If you see any potential issues with the above solutions please feel free to comment.

EDIT 30/8: added some more test cases

I recently saw this question on StackOverflow (“Is there any way to determine if a package has state in Oracle?”) which caught my attention.

I recently saw this question on StackOverflow (“Is there any way to determine if a package has state in Oracle?”) which caught my attention.

You’re probably already aware that when a package is recompiled, any sessions that were using that package won’t even notice the change; unless that package has “state” – i.e. if the package has one or more package-level variables or constants. The current value of these variables and constants is kept in the PGA for each session; but if you recompile the package (or modify something on which the package depends), Oracle cannot know for certain whether the new version of the package needs to reset the values of the variables or not, so it errs on the side of caution and discards them. The next time the session tries to access the package in any way, Oracle will raise ORA-04068, and reset the package state. After that, the session can try again and it will work fine.

Side Note: There are a number of approaches to solving the ORA-04068 problem, some of which are given as answers to this question here. Not all of them are appropriate for every situation. Another approach not mentioned there is to avoid or minimize it – move all the package variables to a separate package, which hopefully will be invalidated less often.

It’s quite straightforward to tell whether a given package has “state” and thus has the potential for causing ORA-04068: look for any variables or constants declared in the package specification or body. If you have a lot of packages, however, you might want to get a listing of all of them. To do this, you can use the new PL/Scope feature introduced in Oracle 11g.

select object_name AS package,

type,

name AS variable_name

from user_identifiers

where object_type IN ('PACKAGE','PACKAGE BODY')

and usage = 'DECLARATION'

and type in ('VARIABLE','CONSTANT')

and usage_context_id in (

select usage_id

from user_identifiers

where type = 'PACKAGE'

);

If you have compiled the packages in the schema with PL/Scope on (i.e. alter session set plscope_settings='IDENTIFIERS:ALL';), this query will list all the packages and the variables that mean they will potentially have state.

Before this question was raised, I hadn’t used PL/Scope for real; it was quite pleasing to see how easy it was to use to answer this particular question. This also illustrates a good reason why I like to hang out on Stackoverflow – it’s a great way to learn something new every day.

Ever since I downloaded the Alexandria PL/SQL library, I haven’t been able to put it down. Just recently I decided I wanted to serve up a whole lot of media files directly from Amazon’s S3 simple storage service, instead of serving them from within my EC2 (elastic compute) instance. They were just wasting my linux server’s time responding to http requests.

So, I quickly wrote the following code to transfer them:

DECLARE

l_blob BLOB;

BEGIN

/* initialise my AWS session */

ALEX.amazon_aws_auth_pkg.init

( 'yyy-my-aws-id-yyy'

, 'xxx-not-telling-xxx'

, p_gmt_offset => -8);

FOR rec IN (

SELECT id, filename, mime_type, location

FROM myfiles

WHERE location = 'http://myserver/media/'

) LOOP

/* read the file from its current location */

l_blob := ALEX.http_util_pkg.get_blob_from_url

(rec.location || rec.filename);

IF DBMS_LOB.getLength(l_blob) > 0 THEN

/* upload the file to Amazon S3 */

ALEX.amazon_aws_s3_pkg.new_object

( 'mybucket'

, rec.filename

, l_blob

, rec.mime_type

, ALEX.amazon_aws_s3_pkg.g_acl_public_read);

UPDATE myfiles

SET location = 'http://mybucket.s3-ap-southeast-1.amazonaws.com/'

WHERE id = rec.id;

COMMIT;

END IF;

END LOOP;

END;

After a short while, all the files had been copied across to my bucket on S3, and my table updated so that my web site now points people’s browsers to the new location for those files.

Of course, I could have used UTL_FILE to read the files from disk, but then I’d have to first create a directory, and write a loop to read the file in chunks into the BLOB. Why bother with all that when I can just call http_util_pkg.get_blog_from_url and get it all in one go?

That’s the trouble with powerful utilities like Alexandria: they’re too easy to use, make tasks like this trivial, and you start finding all sorts of uses for them. All of a sudden, Alexandria is your hammer, and the world is full of nails.

See also: this quick intro to using Alexandria’s API for Amazon S3.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.