This is just a post to document a standard library that I like to create in Oracle Forms, if equivalents are not already available. Suggestions or critiques are welcome.

“No warranty is express or implied about the suitability of this code for any purpose.”

Specification

PACKAGE PKG_FORM IS

PROCEDURE centre_window (windown IN VARCHAR2);

PROCEDURE check_record_is_saved;

PROCEDURE commit;

FUNCTION current_record (blockn IN VARCHAR2) RETURN INTEGER;

FUNCTION current_record_status (blockn IN VARCHAR2) RETURN VARCHAR2;

PROCEDURE delay_action (timern IN VARCHAR2);

PROCEDURE set_current_record_status

(blockn IN VARCHAR2

,istatus IN NUMBER);

PROCEDURE set_mdi_window_title (clock IN BOOLEAN := TRUE);

-- gets the date/time from the app server

FUNCTION system_datetime RETURN DATE;

END PKG_FORM;

Package Body

PACKAGE PKG_FORM IS

cAPP_TITLE CONSTANT VARCHAR2(100) := '[insert app title here]';

PROCEDURE centre_window (windown IN VARCHAR2) IS

cMAIN_WINDOW CONSTANT VARCHAR2(100) := '[insert main window name here]';

x NUMBER;

y NUMBER;

main_win WINDOW := FIND_WINDOW(cMAIN_WINDOW);

window_id WINDOW := FIND_WINDOW(windown);

BEGIN

x := GET_WINDOW_PROPERTY(main_win,X_POS)

+ (GET_WINDOW_PROPERTY(main_win,WIDTH )

- GET_WINDOW_PROPERTY(window_id,WIDTH )) / 2;

y := GET_WINDOW_PROPERTY(main_win,Y_POS)

+ (GET_WINDOW_PROPERTY(main_win,HEIGHT)

- GET_WINDOW_PROPERTY(window_id,HEIGHT)) / 2;

SHOW_WINDOW(window_id, x, y);

END centre_window;

PROCEDURE check_record_is_saved IS

BEGIN

IF :SYSTEM.FORM_STATUS = 'CHANGED' THEN

PKG_MESSAGE.error('Please save or cancel your changes first.');

END IF;

END check_record_is_saved;

PROCEDURE commit IS

msglevel VARCHAR2(2) := :SYSTEM.MESSAGE_LEVEL;

BEGIN

msg('PKG_FORM.commit');

-- set message level to avoid FRM-40401 "No changes to save."

:SYSTEM.MESSAGE_LEVEL := '5';

COMMIT_FORM;

:SYSTEM.MESSAGE_LEVEL := msglevel;

IF :SYSTEM.FORM_STATUS != 'QUERY' THEN

PKG_MESSAGE.error('Unable to save changes.');

END IF;

END commit;

FUNCTION current_record (blockn IN VARCHAR2) RETURN INTEGER IS

BEGIN

RETURN GET_BLOCK_PROPERTY(blockn, CURRENT_RECORD);

END current_record;

FUNCTION current_record_status (blockn IN VARCHAR2) RETURN VARCHAR2 IS

BEGIN

RETURN GET_RECORD_PROPERTY(

GET_BLOCK_PROPERTY(blockn, CURRENT_RECORD),

blockn,

STATUS);

END current_record_status;

PROCEDURE delay_action (timern IN VARCHAR2) IS

-- requires a suitable WHEN-TIMER-EXPIRED form-level trigger

timer_id TIMER;

BEGIN

IF ID_NULL(FIND_TIMER(timern)) THEN

msg('CREATE_TIMER('||timern||')');

timer_id := CREATE_TIMER(timern,1,NO_REPEAT);

END IF;

END delay_action;

PROCEDURE set_current_record_status

(blockn IN VARCHAR2

,istatus IN NUMBER) IS

BEGIN

SET_RECORD_PROPERTY(

GET_BLOCK_PROPERTY(blockn, CURRENT_RECORD),

blockn,

STATUS,

istatus);

END set_current_record_status;

PROCEDURE set_mdi_window_title (clock IN BOOLEAN := TRUE) IS

--scaling factor is the number of milliseconds in a second

cSCALING_FACTOR CONSTANT NUMBER := 1000;

--don't update the time more than once every 10 seconds

cMIN_UPDATE_FREQUENCY CONSTANT NUMBER := 10;

date_time DATE;

timer_id TIMER;

seconds PLS_INTEGER;

ms PLS_INTEGER;

BEGIN

IF clock THEN

date_time := system_datetime;

SET_WINDOW_PROPERTY(FORMS_MDI_WINDOW, TITLE,

cAPP_TITLE || ' - '

|| TO_CHAR(date_time,'Dy FMDD Mon YYYY HHFM:MIpm'));

IF ID_NULL(FIND_TIMER('CLOCK')) THEN

--get the seconds portion of the current time

seconds := (date_time - TRUNC(date_time,'MI')) * 86400;

--update the time at the end of the minute (or thereabouts)

ms := GREATEST((61 - seconds) * cSCALING_FACTOR

,cMIN_UPDATE_FREQUENCY * cSCALING_FACTOR);

timer_id := CREATE_TIMER('CLOCK', ms, NO_REPEAT);

END IF;

ELSE

SET_WINDOW_PROPERTY(FORMS_MDI_WINDOW, TITLE, cAPP_TITLE);

END IF;

END set_mdi_window_title;

FUNCTION system_datetime RETURN DATE IS

-- gets the date/time from the app server

-- should perform better than calling SYSDATE all the time

-- WARNING: this assumes that the builtin date format includes the time component!

BEGIN

RETURN TO_DATE(:SYSTEM.EFFECTIVE_DATE,

GET_APPLICATION_PROPERTY(BUILTIN_DATE_FORMAT));

END system_datetime;

END PKG_FORM;

Example form-level trigger: when-timer-expired

DECLARE

timern VARCHAR2(100) := GET_APPLICATION_PROPERTY(TIMER_NAME);

BEGIN

msg('when-timer-expired ' || timern);

CASE timern

WHEN 'CLOCK' THEN

PKG_FORM.set_mdi_window_title;

WHEN 'PKGNAME_XYZ' THEN

PKGNAME.xyz;

-- ... etc. ...

ELSE

PKG_MESSAGE.error('Unexpected timer name: ' || timern, 'System Error');

END CASE;

END;

This is just a post to document a standard library that I like to create in Oracle Forms, if equivalents are not already available. Suggestions or critiques are welcome.

“No warranty is express or implied about the suitability of this code for any purpose.”

Specification

The main point of this package is to (a) easily make form items more proactive regarding business requirements, e.g. by making it simple to make items conditionally editable, readonly or mandatory; and (b) mitigate the pain of diagnosing bugs with the way items work (by logging when most of these procedures are called).

PACKAGE PKG_ITEM IS

-- set item to value, without changing item state if possible

PROCEDURE assign (itemn IN VARCHAR2, value IN VARCHAR2);

FUNCTION current_record (itemn IN VARCHAR2) RETURN INTEGER;

PROCEDURE disable_button (itemn IN VARCHAR2);

PROCEDURE enable_button (itemn IN VARCHAR2);

PROCEDURE go_next (itemn IN VARCHAR2);

PROCEDURE go_prev (itemn IN VARCHAR2);

PROCEDURE hide (itemn IN VARCHAR2);

FUNCTION is_navigable (itemn IN VARCHAR2) RETURN BOOLEAN;

PROCEDURE set_editable

(itemn IN VARCHAR2

,rec IN INTEGER := NULL

,editable IN BOOLEAN := TRUE

,set_null IN BOOLEAN := FALSE);

PROCEDURE set_readonly (itemn IN VARCHAR2, rec IN INTEGER := NULL);

PROCEDURE set_required (itemn IN VARCHAR2, rec IN INTEGER := NULL);

PROCEDURE set_valid (itemn IN VARCHAR2);

PROCEDURE set_visattr (itemn IN VARCHAR2, currec IN BOOLEAN);

PROCEDURE show (itemn IN VARCHAR2);

END PKG_ITEM;

Package Body

PACKAGE PKG_ITEM IS

cVISATTR_NORMAL CONSTANT VARCHAR2(100) := 'NORMAL_ITEM';

cVISATTR_CURRENT CONSTANT VARCHAR2(100) := 'CURRENT_RECORD';

cVISATTR_DISPLAY CONSTANT VARCHAR2(100) := 'DISPLAY_ITEM';

cVISATTR_REQUIRED CONSTANT VARCHAR2(100) := 'REQUIRED_ITEM';

PROCEDURE assign (itemn IN VARCHAR2, value IN VARCHAR2) IS

-- set item to value, without changing item state if possible

BEGIN

IF NAME_IN(itemn) != value

OR (NAME_IN(itemn) IS NULL AND value IS NOT NULL)

OR (NAME_IN(itemn) IS NOT NULL AND value IS NULL) THEN

PKG_DEBUG.msg(itemn || ':=' || value);

COPY(value, itemn);

END IF;

END assign;

FUNCTION current_record (itemn IN VARCHAR2) RETURN INTEGER IS

--gets the record number for the block for the given item

BEGIN

RETURN GET_BLOCK_PROPERTY

(SUBSTR(itemn,1,INSTR(itemn,'.')-1)

,CURRENT_RECORD);

END current_record;

PROCEDURE disable_button (itemn IN VARCHAR2) IS

BEGIN

msg('PKG_ITEM.disable_button('||itemn||')');

IF :SYSTEM.CURSOR_ITEM = UPPER(itemn) THEN

DO_KEY('NEXT_ITEM');

check_package_failure;

END IF;

SET_ITEM_PROPERTY(itemn, ENABLED, PROPERTY_FALSE);

END disable_button;

PROCEDURE enable_button (itemn IN VARCHAR2) IS

item_id ITEM := FIND_ITEM(itemn);

BEGIN

msg('PKG_ITEM.enable_button('||itemn||')');

SET_ITEM_PROPERTY(item_id, ENABLED, PROPERTY_TRUE);

SET_ITEM_PROPERTY(item_id, NAVIGABLE, PROPERTY_TRUE);

END enable_button;

PROCEDURE go_next (itemn IN VARCHAR2) IS

--This is used when doing a GO_ITEM in order to implement a NEXT_ITEM.

--This will issue a NEXT_ITEM if the item we've just gone to

--is not currently navigable on the current record.

BEGIN

msg('PKG_ITEM.go_next('||itemn||')');

GO_ITEM(itemn);

check_package_failure;

IF GET_ITEM_INSTANCE_PROPERTY(:SYSTEM.CURSOR_ITEM

,GET_BLOCK_PROPERTY(:SYSTEM.CURSOR_BLOCK, CURRENT_RECORD)

,NAVIGABLE) = 'FALSE' THEN

NEXT_ITEM;

check_package_failure;

END IF;

END go_next_if_not_navigable;

PROCEDURE go_prev (itemn IN VARCHAR2) IS

--This is used when doing a GO_ITEM in order to implement a PREVIOUS_ITEM.

--This will issue a PREVIOUS_ITEM if the item we've just gone to

--is not currently navigable on the current record.

BEGIN

msg('PKG_ITEM.go_prev('||itemn||')');

GO_ITEM(itemn);

check_package_failure;

IF GET_ITEM_INSTANCE_PROPERTY(:SYSTEM.CURSOR_ITEM

,GET_BLOCK_PROPERTY(:SYSTEM.CURSOR_BLOCK, CURRENT_RECORD)

,NAVIGABLE) = 'FALSE' THEN

PREVIOUS_ITEM;

check_package_failure;

END IF;

END go_prev_if_not_navigable;

PROCEDURE hide (itemn IN VARCHAR2) IS

BEGIN

msg('PKG_ITEM.hide('||itemn||')');

IF :SYSTEM.CURSOR_ITEM = UPPER(itemn) THEN

NEXT_ITEM;

check_package_failure;

END IF;

SET_ITEM_PROPERTY(itemn, VISIBLE, PROPERTY_FALSE);

END hide;

FUNCTION is_navigable (itemn IN VARCHAR2) RETURN BOOLEAN IS

BEGIN

RETURN GET_ITEM_PROPERTY(itemn, NAVIGABLE) = 'TRUE';

END is_navigable;

PROCEDURE set_editable

(itemn IN VARCHAR2

,rec IN INTEGER := NULL

,editable IN BOOLEAN := TRUE

,set_null IN BOOLEAN := FALSE) IS

item_id ITEM;

BEGIN

msg('PKG_ITEM.set_editable('||itemn||','||rec||')');

IF editable THEN

item_id := FIND_ITEM(itemn);

IF rec IS NULL THEN

IF :SYSTEM.CURSOR_ITEM != UPPER(itemn) THEN

SET_ITEM_PROPERTY(item_id, VISIBLE, PROPERTY_TRUE);

SET_ITEM_PROPERTY(item_id, ENABLED, PROPERTY_TRUE);

END IF;

SET_ITEM_PROPERTY(item_id, NAVIGABLE, PROPERTY_TRUE);

SET_ITEM_PROPERTY(item_id, INSERT_ALLOWED, PROPERTY_TRUE);

SET_ITEM_PROPERTY(item_id, UPDATE_ALLOWED, PROPERTY_TRUE);

SET_ITEM_PROPERTY(item_id, VISUAL_ATTRIBUTE, cVISATTR_NORMAL);

ELSE

SET_ITEM_INSTANCE_PROPERTY(item_id, rec, NAVIGABLE, PROPERTY_TRUE);

SET_ITEM_INSTANCE_PROPERTY(item_id, rec, INSERT_ALLOWED, PROPERTY_TRUE);

SET_ITEM_INSTANCE_PROPERTY(item_id, rec, UPDATE_ALLOWED, PROPERTY_TRUE);

SET_ITEM_INSTANCE_PROPERTY(item_id, rec, VISUAL_ATTRIBUTE, cVISATTR_NORMAL);

END IF;

ELSE

set_readonly(itemn, rec);

--clear any data the user may have entered into the item (current record only)

IF set_null AND NAME_IN(itemn) IS NOT NULL THEN

msg(itemn||':=NULL');

COPY(NULL, itemn);

END IF;

END IF;

END set_editable;

PROCEDURE set_readonly (itemn IN VARCHAR2, rec IN INTEGER := NULL) IS

item_id ITEM := FIND_ITEM(itemn);

BEGIN

msg('PKG_ITEM.set_readonly('||itemn||','||rec||')');

IF rec IS NULL THEN

SET_ITEM_PROPERTY(item_id, NAVIGABLE, PROPERTY_FALSE);

SET_ITEM_PROPERTY(item_id, INSERT_ALLOWED, PROPERTY_FALSE);

SET_ITEM_PROPERTY(item_id, UPDATE_ALLOWED, PROPERTY_FALSE);

SET_ITEM_PROPERTY(item_id, VISUAL_ATTRIBUTE, cVISATTR_DISPLAY);

ELSE

SET_ITEM_INSTANCE_PROPERTY(item_id, rec, NAVIGABLE, PROPERTY_FALSE);

SET_ITEM_INSTANCE_PROPERTY(item_id, rec, INSERT_ALLOWED, PROPERTY_FALSE);

SET_ITEM_INSTANCE_PROPERTY(item_id, rec, UPDATE_ALLOWED, PROPERTY_FALSE);

SET_ITEM_INSTANCE_PROPERTY(item_id, rec, VISUAL_ATTRIBUTE, cVISATTR_DISPLAY);

END IF;

END set_readonly;

PROCEDURE set_required (itemn IN VARCHAR2, rec IN INTEGER := NULL) IS

BEGIN

msg('PKG_ITEM.set_required('||itemn||','||rec||')');

IF rec IS NULL THEN

SET_ITEM_PROPERTY(itemn, REQUIRED, PROPERTY_TRUE);

SET_ITEM_PROPERTY(itemn, VISUAL_ATTRIBUTE, cVISATTR_REQUIRED);

ELSE

SET_ITEM_INSTANCE_PROPERTY(itemn, rec, REQUIRED, PROPERTY_TRUE);

SET_ITEM_INSTANCE_PROPERTY(itemn, rec, VISUAL_ATTRIBUTE, cVISATTR_REQUIRED);

END IF;

END set_required;

PROCEDURE set_valid (itemn IN VARCHAR2) IS

BEGIN

msg('PKG_ITEM.set_valid('||itemn||')');

SET_ITEM_PROPERTY(itemn, ITEM_IS_VALID, PROPERTY_TRUE);

END set_valid;

PROCEDURE set_visattr (itemn IN VARCHAR2, currec IN BOOLEAN) IS

rec INTEGER;

BEGIN

msg('PKG_ITEM.set_visattr('||itemn||')');

rec := item_current_record(itemn);

IF currec THEN

SET_ITEM_INSTANCE_PROPERTY(itemn, rec, VISUAL_ATTRIBUTE, cVISATTR_CURRENT);

ELSE

SET_ITEM_INSTANCE_PROPERTY(itemn, rec, VISUAL_ATTRIBUTE, cVISATTR_NORMAL);

END IF;

END set_current_item_visattr;

PROCEDURE show (itemn IN VARCHAR2) IS

BEGIN

msg('PKG_ITEM.show('||itemn||')');

SET_ITEM_PROPERTY(itemn, VISIBLE, PROPERTY_TRUE);

END show;

END PKG_ITEM;

Example usage:

PKG_ITEM.set_editable('EMP.SALARY'

,rec => PKG_FORM.current_record('MYBLOCK')

,editable => (:EMP.ROLE != 'CEO')

);

The above example makes the SALARY item readonly if the employee’s role is ‘CEO’ – you would call this from the post-query trigger on the block, so on rows where the role is not ‘CEO’, SALARY will be editable.

Setting the visual attributes at the row level like this on multi-row blocks, however, has one side-effect. If you have a Current Record Visual Attribute (CRVA) set on the block (or the form), this code will overwrite that, so the CRVA will not be effective for these items. There is a workaround (to be published).

For too long I’ve muddled my way through using the built-in “explain plan” feature of the IDE-that-shall-remain-nameless provided by the client; but now that they’ve successfully upgraded from 9i to 11g, I’m now in a much better place. No longer will I wonder which table each step in a plan refers to, or what filters are being applied where.

For too long I’ve muddled my way through using the built-in “explain plan” feature of the IDE-that-shall-remain-nameless provided by the client; but now that they’ve successfully upgraded from 9i to 11g, I’m now in a much better place. No longer will I wonder which table each step in a plan refers to, or what filters are being applied where.

DBMS_XPLAN is the best!

--get plan for a query

set pagesize 0 linesize 999 heading off feedback off echo on

explain plan for

SELECT bla FROM mytable WHERE bla;

set echo off

select * from table(dbms_xplan.display(format=>'ALL'));

--get plan for cursor in cache

set pagesize 0 linesize 999 heading off feedback off

select * from table(dbms_xplan.display_cursor(

sql_id=>'fnumywukcm2ng', cursor_child_no=> 0, format=>'ALL'));

A colleague asked me a trick* question today which I failed 🙂

* whether it was a “trick” question is probably in the eye of the beholder, though…

“What are the differences, if any, between the following two approaches to inserting multiple rows (assume v1 and v2 have different values):

INSERT INTO mytable (a)

SELECT :v1 FROM DUAL;

INSERT INTO mytable (a)

SELECT :v2 FROM DUAL;

OR:

INSERT INTO mytable (a)

SELECT :v1 FROM DUAL

UNION

SELECT :v2 FROM DUAL;

I quickly answered:

- The first approach requires one extra parse;

- The second approach requires a Sort Distinct (albeit of only two rows)

- A UNION ALL would be better, which would not require a Sort, nor would require the extra parse.

My colleague responded, there’s one very important thing I missed: Triggers! The first approach would execute the BEFORE STATEMENT and AFTER STATEMENT triggers once for each row. The second approach would only execute these triggers once.

What’s sad is that the application we’re working on has row-level logic in the BEFORE/AFTER statement triggers. If we try to optimise the code to insert more than one row in one INSERT statement, the application only runs the row-level logic for the first row inserted. Bad code! Very very bad!

I like the “Vertical Images List” in APEX, which allows me to create a navigation bar of icons to give users quick access to various pages in my site. It’s easy to customise each item – you can select any image, add attributes for the image if necessary, and each item in the list has a URL which can point to another page in the application, or to an arbitrary URL.

My problem, however, was that some of the URLs in my list took the user to another site, or opened a PDF, and these would open in the same window. I wanted these particular items to open a new window, but the navigation item properties don’t allow this.

To solve this, I modified the Vertical Images List template, and used one of the User Defined Attributes to add “target=_blank” to the items that I wanted. While I was in there, I made a few tweaks to customise the template further to my liking.

A. Modify the Vertical Images List template.

- Go to Shared Components and open the Templates (under User Interface).

- Scroll down to Vertical Images List (in the Lists category) and open it for editing.

- Modify the Template Definition (WARNING: the code for different Apex templates may differ slightly; you’ll have to use a bit of nouse to customise it to your requirements) – you can add bits like #A01#, #A02#, etc – in my case I’ve used the following convention:

#A01# = extra text to appear below the icon & link;

#A02# = tooltip text for the hyperlink;

#A03# = extra attributes for the link (HTML <A> tag).

I’ve done this in both the “List Template Current” and “List Template Noncurrent” sections.

For example, for “List Template Noncurrent, I’ve modified the template code as follows:

<tr><td><a href=”#LINK#” TITLE=”#A02#” #A03#>

<img src=”#IMAGE_PREFIX##IMAGE#” #IMAGE_ATTR# />#TEXT#</a>

<BR>#A01#</td></tr>

B. Set User Defined Attributes.

- Open the Navigation List for editing (Shared Components -> Navigation -> Lists).

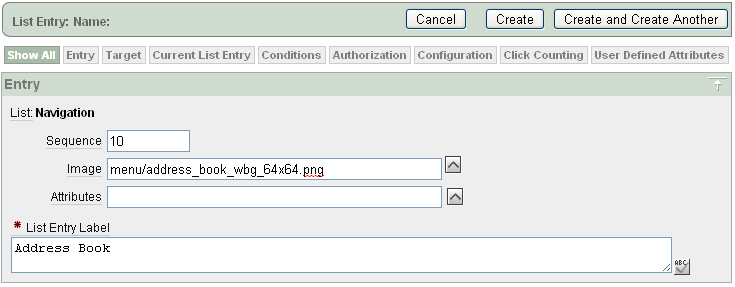

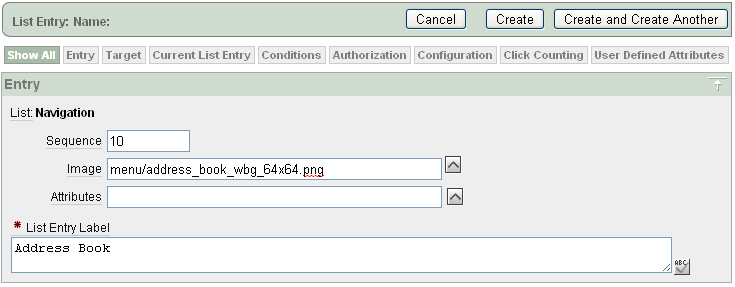

- Open the list item for editing that you wish to customise (or create a new one).

- In User Defined Attributes, attribute 1, add any text you wish to show beneath the link (but not highlighted as part of the ink)

- For attribute 2, add the title you wish to show up as a tooltip.

- For attribute 3, add the html attribute “target=_blank” if you wish this navigation entry to open a new window when invoked.

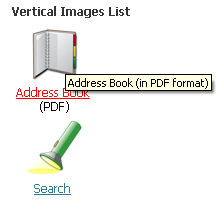

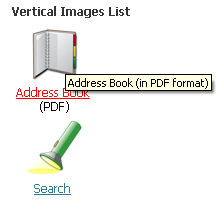

This is how it looks in a sample application:

If the user clicks on “Address Book”, the “target=_blank” attribute instructs the browser to open in a new window (or tab, in some cases).

My web site accepts applications for a sports team, and the last step is the applicant needs to print out a form to be signed. To make things as simple as possible, I want this form to send itself to their printer as soon as they open it. Now, I’m not a javascript expert; but instead of googling for the code, I stole it by doing a View Source on Google Mail’s print feature.

To get any APEX page to print when it is opened, all you need to do is add two bits to the page definition:

HTML Header

function Print(){document.body.offsetHeight;window.print()}

Page HTML Body Attribute

onload="Print()"

Isn’t javascript easy? I’m not sure what the “document.body.offsetHeight” is all about but I suspect it’s something to do with waiting for the whole page to load and render before the print starts.

A colleague needed to get the average from a set of data, but disregarding the top 25% and the bottom 25%. We didn’t know of any builtin Oracle function that would do this, but a review of the wikipedia page for Average yielded the technical term: truncated (or trimmed) mean. So we searched the Oracle docs and Google for ways to implement this function and didn’t come up with anything very useful. There were some SQL Server scripts which would have required two or three passes over the dataset.

After browsing through the aggregate functions documentation, I hit upon the NTILE function which I’ve used before, and realised that was the answer. The NTILE function takes a set of ordered data and divides it evenly (or as evenly as possible) between a number of buckets. In our case, we wanted to discard the top 25% and bottom 25%, so we simply divide the set into 4 buckets and discard the 1st and the 4th buckets; then take the standard average of the remainder:

SELECT AVG(mystat)

FROM (

SELECT mystat,

NTILE(4) OVER (ORDER BY mystat) n

FROM (SELECT mystat

FROM mytable)

)

WHERE n IN (2,3);

The benefit of this query is that it only does one pass over the data, and was easily modified to partition the data set into groups; a count was also added so that the average could be taken over the entire data set for any groups that had less than 4 items.

To get the truncated mean in groups, except for groups with <4 items (for which we’ll report the average over the entire group):

SELECT mycat, AVG(mystat)

FROM (

SELECT mycat, mystat,

NTILE(4) OVER (PARTITION BY mycat

ORDER BY mystat) n,

COUNT(1) OVER (PARTITION BY mycat) c

FROM (SELECT mycat, mystat

FROM mytable)

)

WHERE n IN (2,3) OR c < 4

GROUP BY mycat

ORDER BY mycat;

Comment out all “DROP TABLE” commands in my scripts.

(I accidentally hit F5 when the focus was in the wrong window – which happened to contain a “DROP TABLE / CREATE TABLE” script – my Toad session goes and happily drops the table that I’d been gradually accumulating statistics into for the past 3 days – and no, there’s no flashback table in 9i)

At least I kept all my scripts – rerunning them all now…

Is it possible to change the ORDER BY on a query, if the SQL is defined in a wrapped PL/SQL package? A colleague asked me this interesting question today – and the answer was quite simply, Yes and No.

If the SQL already has an ORDER BY, then the answer is No. Fortunately, in our case, the SQL didn’t have any ORDER BY clause:

SELECT a.addressid

FROM address a, addresstype t

WHERE a.locationid = :locationid

AND a.typeid = t.typeid

AND t.typecode = 'STREET';

The plan currently being picked by the CBO (Oracle 9.2.0.8) is:

NESTED LOOPS

TABLE ACCESS BY ROWID addresstype

INDEX RANGE SCAN pkx_addresstype

INDEX RANGE SCAN ix_address

For background, here are the relevant schema details:

TABLE address

(addressid NUMBER PRIMARY KEY

,locationid NUMBER NOT NULL

,typeid NUMBER NOT NULL --FOREIGN KEY to addresstype

);

INDEX idx_address (locationid, typeid, addressid);

TABLE addresstype

(typeid NUMBER PRIMARY KEY

,typecode VARCHAR2(30) NOT NULL

);

typecode is actually unique, but no constraint has been declared to the database.

Because the first step in the plan (the TABLE ACCESS on addresstype) only ever gets one row (in practice), the index range scan on ix_address causes this query to return the rows in ascending order of addressid.

The process that runs this query gets rows one at a time, does some work to “check” it against some other data, and once it finds a “match”, it stops getting more rows.

This design probably seemed like a good idea at the time, but now the business would like this process changed so that in the (rare) occurrence when more than one row would “match”, the process should favour a more recent address rather than an old one. For our purposes, “recent” may be approximated by assuming that bigger addressids are more recent than smaller addressids.

We can’t change the code without a lengthy and costly call to the application vendor; it would be better if we can change it ourselves. We cannot change the PL/SQL package in which this query is defined; so what can we do? Can we change the order in which rows are returned from this query?

The answer is yes – firstly, the query has no ORDER BY, so the order of rows is affected only by the query plan. If we change the query plan, which we can do with a stored outline, the order of rows can be changed.

So in this case, all we have to do is create a stored outline to add an INDEX_DESC hint:

SELECT /*+INDEX_DESC(a)*/ a.addressid

FROM address a, addresstype t

WHERE a.locationid = :locationid

AND a.typeid = t.typeid

AND t.typecode = 'STREET';

This causes the following plan to be used:

NESTED LOOPS

TABLE ACCESS BY ROWID addresstype

INDEX RANGE SCAN pkx_addresstype

INDEX RANGE SCAN DESCENDING ix_address

With the stored outline, the query now returns the rows in descending order of addressid.

NOTE:

If you want to use this approach, it is highly dependant on being able to find a query plan that produces the ordering that you want. This may mean creating a suitable index designed strategically for this purpose, if one doesn’t exist already. Even creating indexes won’t guarantee that you’ll be able to find a plan that gets the order that you want; generally I’d say this approach will only be feasible for relatively simple queries.

This will create a file called “emp.xls” with a nicely formatted spreadsheet based on the query specified. The header row is based on the column names, and is frozen. The column widths are set according to the max width of the data.

import cx_Oracle

from table2Excel import write_cursor_to_excel

orcl = cx_Oracle.connect('scott/tiger')

curs = orcl.cursor()

curs.execute("""

SELECT e.ename "Employee",

e.job "Job",

e.hiredate "Hire Date",

e.sal "Salary",

e.comm "Commission",

d.dname "Department",

(SELECT ename FROM emp WHERE emp.empno = e.mgr) "Manager"

FROM emp e, dept d

WHERE e.deptno = d.deptno

""")

write_cursor_to_excel(curs, 'emp.xls', 'Employees')

You can get the source for table2Excel from here [table2Excel.py.txt]. Feel free to adapt it to your needs.