This is just a story about a really weird bit of code. It was the type of code you look at and say, “that’s obviously wrong” and delete straight away.

One of the requirements was to rebuild an Oracle form, a data-entry form for receipts (cash, cheque, credit card, etc). Some of the cashiers use these cheque readers to read the numbers off the MICR band, and with the first release of the new form they reported a defect – “the cheque readers don’t work”.

I didn’t have access to a cheque reader when I was developing the form, but assumed that it would be a normal cheque reader – they put the cheque in, it reads it, it sends a series of digits to the computer. The form should work no different than if the operator keyed in the numbers manually (which they have to do, anyway, if the cheque reader doesn’t work for a particular cheque).

So to investigate the defect I requisitioned a cheque reader, along with some test cheques; after some difficulty (turns out these things don’t work alongside my USB keyboard, I had to get a PS2 keyboard), it was working.

It didn’t take long to discover that the cheque reader was sending the cheque number and BSB in the wrong order, as far as the form is concerned; thus why the validation was failing.

I opened up the old form again, and had a good hard look at the fields. Turns out, what I missed originally is that there is a custom KEY-NEXT-ITEM trigger on the bank code field (which is where the operator puts the focus before using the cheque reader). It looks something like this:

DECLARE

v_data VARCHAR2(50) := REPLACE(REPLACE(:rct.bak_code

,' ','')

,CHR(9),'');

BEGIN

IF LENGTH(v_data) > 4 THEN

IF LENGTH(v_data) < 14 THEN

NULL;

ELSE

:rct.cheque_no := SUBSTR(v_data,1,6);

:rct.bak_code := SUBSTR(v_data,7,3);

:rct.branch := SUBSTR(v_data,10,3);

go_field('RCT.CHEQUE_TYPE');

END IF;

ELSE

go_field('RCT.BRANCH');

END IF;

END;

It turns out that:

(a) the REPLACE(REPLACE( code to remove spaces and tab characters (CHR(9)) is redundant, since the cheque reader never sends spaces, and when it sends a TAB, Oracle Forms doesn’t put a CHR(9) into the field anyway; instead it fires the KEY-NEXT-ITEM trigger

(b) if the length of the data is between 5 and 13, the KEY-NEXT-ITEM trigger does nothing; which means the focus stays in the bak_code field

It’s (b) that is the reason this worked. The trigger fires three times when the cheque reader is used; the third time the trigger fires, it’s got enough digits lumped together in the bak_code field, which it then splits apart, and moves the relevant bits to the cheque_no and branch fields.

A normal, sane person, building this form, would have designed the form to accept the cheque number, bank code and branch in the same order that they are read from the cheque reader; that way, no special code is required – the cheque reader just tabs through the fields, filling them in as it goes.

Oh well – it’s too late to do a screen redesign now, so I’ve had to pretty much replicate the same behaviour in the new form; except that my new code is a little bit smarter – it can also read money orders, which I’ve been told will make the cashiers very happy.

There’s no difference between the effects of the following two statements, are there:

INSERT INTO mytable (col1, col2) VALUES ('hello','world');

INSERT INTO mytable (col1, col2) SELECT 'hello', 'world' FROM DUAL;

Well, as it turns out, it is possible for the first statement to succeed where the second statement would fail – in the presence of a suitably crafted Before Insert trigger, the second will raise “ORA-04091 table is mutating, trigger/function may not see it”:

http://oraclequirks.blogspot.com/2010/09/ora-04091-table-stringstring-is.html

An interesting discussion on the PL/SQL Challenge blog here has led to me changing my mind about “the best way” to loop through a sparse PL/SQL associative array.

Normally, if we know that an array has been filled, with no gaps in indices, we would use a simple FOR LOOP:

DECLARE

TYPE t IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

a t;

BEGIN

SELECT x BULK COLLECT INTO a FROM mytable;

FOR i IN a.FIRST..a.LAST LOOP

-- process a(i)

END LOOP;

END;

If, however, the array may be sparsely filled (i.e. there might be one or more gaps in the sequence), this was “the correct way” to loop through it:

Method A (First/Next)

DECLARE

TYPE t IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

a t;

i BINARY_INTEGER;

BEGIN

...

i := a.FIRST;

LOOP

EXIT WHEN i IS NULL;

-- process a(i)

i := a.NEXT(i);

END LOOP;

END;

Method A takes advantage of the fact that an associative array in Oracle is implemented internally as a linked list – the fastest way to “skip over” any gaps is to call the NEXT operator on the list for a given index.

Alternatively, one could still just loop through all the indices from the first to the last index; but the problem with this approach is that if an index is not found in the array, it will raise the NO_DATA_FOUND exception. Well, Method B simply catches the exception:

Method B (Handle NDF)

DECLARE

TYPE t IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

a t;

BEGIN

...

FOR i IN a.FIRST..a.LAST LOOP

BEGIN

-- process a(i)

EXCEPTION

WHEN NO_DATA_FOUND THEN

NULL;

END;

END LOOP;

END;

This code effectively works the same (with one important proviso*) as Method A. The difference, however, is in terms of relative performance. This method is much faster than Method A, if the array is relatively dense. If the array is relatively sparse, Method A is faster.

* It must be remembered that the NO_DATA_FOUND exception may be raised by a number of different statements in a program: if you use code like this, you must make sure that the exception was only raised by the attempt to access a(i), and not by some other code!

A third option is to loop through as in Method B, but call the EXISTS method on the array to check if the index is found, instead of relying on the NO_DATA_FOUND exception.

Method C (EXISTS)

DECLARE

TYPE t IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

a t;

BEGIN

...

FOR i IN a.FIRST..a.LAST LOOP

IF a.EXISTS(i) THEN

-- process a(i)

END IF;

END LOOP;

END;

The problem with this approach is that it effectively checks the existence of i in the array twice: once for the EXISTS check, and if found, again when actually referencing a(i). For a large array which is densely populated, depending on what processing is being done inside the loop, this could have a measurable impact on performance.

Bottom line: there is no “one right way” to loop through a sparse associative array. But there are some rules-of-thumb about performance we can take away:

- When the array is likely often very sparsely populated with a large index range, use Method A (First/Next).

- When the array is likely often very densely populated with a large number of elements, use Method B (Handle NDF). But watch how you catch the NO_DATA_FOUND exception!

- If you’re not sure, I’d tend towards Method A (First/Next) until performance problems are actually evident.

You probably noticed that I haven’t backed up any of these claims about performance with actual tests or results. You will find some in the comments to the afore-mentioned PL/SQL Challenge blog post; but I encourage you to log into a sandpit Oracle environment and test it yourself.

The cost of providing Virtual Machines to all your developers can be quite high, especially in terms of initially setting it all up (e.g. a typical developer may require two VMs running concurrently, one for the database server, one for the app server; their desktops will require enough grunt to run these while they also run their dev tools, or they’ll need at least two computers on their desks; also, you’ll need a means of scaling down the data volumes if your database is too big); but you will gain a whole lot more productivity, sanity, happiness and love from your developers.

The cost of providing Virtual Machines to all your developers can be quite high, especially in terms of initially setting it all up (e.g. a typical developer may require two VMs running concurrently, one for the database server, one for the app server; their desktops will require enough grunt to run these while they also run their dev tools, or they’ll need at least two computers on their desks; also, you’ll need a means of scaling down the data volumes if your database is too big); but you will gain a whole lot more productivity, sanity, happiness and love from your developers.

10. Fail safe.

If everything goes terribly wrong, simply restore to snapshot (duh, obviously).

9. Handle shifting conditions outside your project.

As often as I can (e.g. after a few weeks if a lot of development has gone on), I re-run the scripts on a VM based on a fresh copy of Prod – any changes that anyone else has made without my knowing it, which affect my scripts adversely, get picked up early.

8. Upgrade scripts never raise an error (unless something unexpected occurs).

It is normal for a typical upgrade script to raise many errors (e.g. “object not found” when running a standard “DROP x, CREATE x” script). However, I wrap any command like this with a custom exception handler that swallows the errors that I know are expected or benign. That way, when I hand over my upgrade scripts to the DBA, I can say, “if you get any error messages, something’s gone wrong”, which is a bit better than handing over a list of error messages they can safely ignore. Even better, I can add WHEN SQLERROR EXIT to the top of my upgrade script, so it exits out straightaway if the upgrade fails at any point.

7. Sanity Restore.

You’ve been beating your head against the wall for five minutes, and no-one’s around to add a second eye to your problem; you’re starting to wonder if the bug was something you introduced, or has always been there; and you can’t just log into production to test it. VM to the rescue – undo all your changes by restoring to an earlier snapshot, then see if your problem was a pre-existing issue.

6. Other Developers.

Let’s face it. Things would go a lot smoother if not for all the efforts of other developers to impede your progress by making random changes in the dev environment. Am I right? Well, with your private VM this is no longer a problem. Of course, with a private VM, if anything goes wrong, it’s incontrovertibly your fault now…

5. “Did you turn it off and on again?”

Finally, no need to nag the DBA to bounce the database server, or flush the shared pool, or in fact anything that requires more access than you’d usually get as a lowly developer. Need to increase that tablespace? Drop a couple hundred tables? No problem.

4. Real size estimates.

This works really well when you’re working with relatively small databases (i.e. where an entire copy of prod can be practically run in a VM). Run your upgrade script, which fails halfway through with a tablespace full error. Restore to snapshot, resize the appropriate datafile, re-run the upgrade. Rinse, repeat until no more “out of space” errors; now you know with a high degree of confidence how much extra space your upgrade requires, and for which datafiles.

3. Reduced down-time.

Dev server down? Being upgraded? Been appropriated by another department? No worries – your dev team can continue working, because they’ve got their VMs.

2. Did I say “Fail Safe” already?

I can’t emphasize this one enough. Also, the other side of “Other Developers” is that you are an Other Developer; and the mistakes you will, inevitably, make will never see the light of day (and draw everyone’s ire) if they’re made in your private VM.

1. Smug.

My last deployment to Test of a major release of an application executed perfectly, 100%, correct, first time. I couldn’t believe my eyes – I was so accustomed to my scripts failing for random reasons (usually from causes outside my control, natch). It was all thanks to my use of VMs to develop my fault-tolerant upgrade scripts. I was smug.

This is a comment on jagatheesh.ramakrishnan@oracle.com’s article “Generate DML/DDL/QUERY from SQL” – comments are not enabled on their blog so here’s my addendum.

The following query is offered as a means of generating a script to ONLINE all datafiles in a database:

select 'ALTER DATABASE DATAFILE '''|| name || ''' online ;'

from v$datafile;

I suggest an alternative method, which is both easier to write and easier to maintain:

select REPLACE(q'[

ALTER DATABASE DATAFILE '#NAME#' online ;

]','#NAME#',name) from v$datafile;

This way, the syntax within the DDL is unmuddied by the syntax required by the query to generate it. It’s immediately obvious that only single quotes will surround the name of the datafile in the generated DDL.

If you’re on a pre-10g database, you can still use this method, but you’ll need to revert to the old quote-escape:

select REPLACE('

ALTER DATABASE DATAFILE ''#NAME#'' online ;

','#NAME#',name) from v$datafile;

So I followed the great advice here to use the new COLLECT function in Oracle 10g to solve the common string-aggregation-in-SQL problem. I chose this solution over the others because it sorts the results as well, which was a user requirement. This is because the COLLECT function optionally takes an ORDER BY clause – even though the 10g documentation forgot to mention this. The 11g documentation was updated to include the additional syntax option, as well as the fact that COLLECT supports the DISTINCT (or UNIQUE) keyword as well to remove duplicates – testing indicates that this works in 10g as well.

This means that if I define a suitable type varchar2_ntt and a suitable function ntt_to_string, I can write a query like this:

SELECT dept,

ntt_to_string(

CAST(

COLLECT(ename ORDER BY ename)

AS varchar2_ntt)

) AS ename_list

FROM emp

GROUP BY dept;

That works fine. But now I want to combine this with some analytic functions, like this:

SELECT DISTINCT

dept,

ntt_to_string(

CAST(

COLLECT(ename ORDER BY ename)

AS varchar2_ntt)

) AS ename_list,

FIRST_VALUE(ename)

OVER (PARTITION BY dept

ORDER BY sal DESC) AS topdog

FROM emp;

This doesn’t work because (a) the COLLECT requires a GROUP BY; and (b) the analytic function cannot be used along with a GROUP BY. What I’d expect to be able to do is use an analytic version of the COLLECT:

SELECT DISTINCT

dept,

ntt_to_string(

CAST(

COLLECT(ename

PARTITION BY dept

ORDER BY ename)

AS varchar2_ntt)

) AS ename_list,

FIRST_VALUE(ename)

OVER (PARTITION BY dept

ORDER BY sal DESC) AS topdogFROM emp;

Us SQL developers are never satisfied, are we?

At this stage my best solution for this is to use a CTE:

WITH q AS (SELECT dept, ename, sal FROM emp)

SELECT DISTINCT

dept,

(SELECT ntt_to_string(

CAST(

COLLECT(q2.ename ORDER BY q2.ename)

AS varchar2_ntt)

)

FROM q q2

WHERE q2.dept = q.dept

) AS ename_list,

FIRST_VALUE(ename)

OVER (PARTITION BY dept

ORDER BY sal DESC) AS topdog

FROM q;

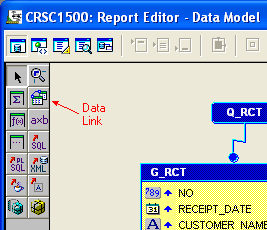

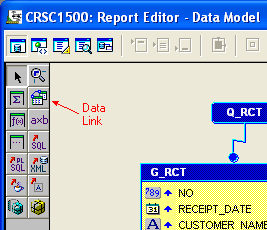

If you’re like me, the above picture will probably have given you a frisson of dread. Just a little. The example here is not a very complicated report, just a few repeating groups based on quite a nice data model. What catches me every time, however, is a few little apparent quirks in Oracle Reports – and I’ve decided to post them here so that I’ll remember them next time I have to maintain someone’s reports. I keep forgetting them because I don’t have to work with Oracle Reports very often.

(These instructions are for Oracle Reports 10g – 9.0.4.0.33)

- When you change the structure of the query, it will often change the order of the labels in the model editor – and the order is important. So, don’t forget to check that the order of the columns matches the order of the columns in the query.

- Don’t forget to add the data links between the query groups. Otherwise, you may find the report takes a looooong time to run – if the status page says something like “Generating page 557,634…” for a report you expected to fit on 1 page, this may be the cause.

- If you modify a query, you may find that some or all data links to that group will now be invalid (e.g. if you add a GROUP BY to a query that is a child group, you may find that at runtime it will simply ignore the data link entirely – leading to the dreaded million-page report). You may have to delete the data links and recreate them. To create a data link:

- Click the Data Link icon (View -> Tool Palette)

- Mouse down on the join column in the parent group

- Drag the line to the join column in the child group, and release.

If the Data Link cannot be created for some reason (e.g. because of a Group By in the child group), Reports will then create a generic “group link” – which means you need to add the join criteria manually to the WHERE clause in the child query.

- When adding a calculated field, don’t forget to change the default data type from Number to Char or Date if applicable. Otherwise, you’ll probably get something like “Invalid value for CF_BLA” at runtime.

- When editing the print layout, don’t forget to review these important modes accessible from the toolbar. In particular: Confine On / Confine Off, Flex On / Flex Off, Select Parent Frame.

For example, to move something from one parent frame to another:

For example, to move something from one parent frame to another:

- Select the object.

- Set Confine Off.

- Move the object to the desired location.

- Set Confine On.

- See if the hierarchy is now all messed up. If so, Undo and try again – make sure the object is fully within the target parent before you set Confine On.

Jeffrey Kemp

11 August 2010

Reports /

UTL_FILE, unfortunately, does not allow you to query the contents of a directory.

If you’re on Oracle 11g you can use the method described here: listing files with the external table preprocessor in 11g.

If you’re on Oracle 10g another option is to create a java routine. Note: this is not my code – a colleague sent the basics to me and I just modified it somewhat to suit my purpose at the time.

CREATE OR REPLACE AND RESOLVE

JAVA SOURCE NAMED "DirectoryLister" AS

import java.io.File;

import java.util.Arrays;

public class DirectoryLister

{

public static String getFileList(String idir, String sep)

{

File aDirectory = new File(idir);

File[] filesInDir = aDirectory.listFiles();

String result = "";

for ( int i=0; i<filesInDir.length; i++ )

{

if ( filesInDir[i].isFile()

&& !filesInDir[i].isHidden() )

{

result = result + sep + filesInDir[i].getName();

}

}

return result;

}

};

/

CREATE OR REPLACE

FUNCTION dirlist_csv

(p_dir IN VARCHAR2, p_sep IN VARCHAR2) RETURN VARCHAR2

AS LANGUAGE JAVA NAME

'DirectoryLister.getFileList

(java.lang.String, java.lang.String)

return String';

/

BEGIN

DBMS_JAVA.grant_permission('USER',

'java.io.FilePermission', '<>', 'read');

DBMS_JAVA.grant_permission('USER',

'SYS:java.lang.RuntimePermission',

'writeFileDescriptor', '');

DBMS_JAVA.grant_permission('USER',

'SYS:java.lang.RuntimePermission',

'readFileDescriptor', '');

END;

/

(replace “USER” in the above commands with the user name)

CREATE OR REPLACE

FUNCTION get_path (dir IN VARCHAR2) RETURN VARCHAR2 IS

o_path ALL_DIRECTORIES.directory_path%TYPE;

BEGIN

SELECT directory_path INTO o_path

FROM ALL_DIRECTORIES d

WHERE d.directory_name = dir;

RETURN o_path;

END get_path;

Sample script:

DECLARE

csv VARCHAR2(32767);

filename VARCHAR2(1000);

BEGIN

csv := dirlist_csv(get_path('MYDIR'), ',');

LOOP

EXIT WHEN csv IS NULL;

filename := SUBSTR(csv, INSTR(csv, ',', -1) + 1);

dbms_output.put_line(filename);

csv := SUBSTR(csv, 1, INSTR(csv, ',', -1) - 1);

END LOOP;

END;

/

Note: If the number of files is expected to be large, it would be better to get the java program to insert the file names into a global temporary table, then query that, instead of returning the list as a CSV string.

Want to purge all the recyclebins for all users in your database?

Can’t (or don’t want to) log in as each user individually to run this, one by one:

PURGE RECYCLEBIN

You could log in as SYSDBA and run:

PURGE DBA_RECYCLEBIN

But, what if you don’t have SYSDBA access? (Yes, this has happened to me, with a client-supplied VM – they told me the SYSTEM password but not the SYS password)

You can use the PURGE TABLESPACE x USER y option – run this to generate the commands to run, then run the output as a script:

SELECT DISTINCT

REPLACE(REPLACE('PURGE TABLESPACE "#TS#" USER "#OWNER#";'

,'#TS#',ts_name)

,'#OWNER#',owner)

FROM dba_recyclebin

WHERE ts_name IS NOT NULL;

PURGE TABLESPACE "USER_DATA" USER "SCOTT";

PURGE TABLESPACE "USER_DATA" USER "LARRY";

WARNING: this command will result in irreversible loss of data for all users on your database.

Tested on Oracle 10gR2.

This is just a post to document a standard utility functions that I like to create in a Oracle Forms library, if equivalents are not already available. Suggestions or critiques are welcome.

“No warranty is express or implied about the suitability of this code for any purpose.”

This package could do with some improvements:

- use a database table type (instead of one defined in the forms package) so that we can just send it straight to the database package

- perhaps a “level” for each log message (Debug/Info/Warning/Error/Fatal) plus the ability to switch the level up or down for each session

Database Objects

CREATE TABLE DEBUG_LOG

(

log_id NUMBER(12,0) NOT NULL

,ts TIMESTAMP NOT NULL

,text VARCHAR2(4000)

,sid NUMBER

DEFAULT SYS_CONTEXT('USERENV','SID')

,sessionid NUMBER

DEFAULT SYS_CONTEXT('USERENV','SESSIONID')

,module VARCHAR2(48)

DEFAULT SYS_CONTEXT('USERENV','MODULE')

,action VARCHAR2(32)

DEFAULT SYS_CONTEXT('USERENV','ACTION')

,client_info VARCHAR2(64)

DEFAULT SYS_CONTEXT('USERENV','CLIENT_INFO')

,username VARCHAR2(30)

DEFAULT SYS_CONTEXT('USERENV','SESSION_USER')

)

CREATE SEQUENCE DEBUG_LOG_SEQ;

CREATE OR REPLACE PACKAGE DB_FORMS_DEBUG IS

TYPE logtable_type IS TABLE OF VARCHAR2(4000)

INDEX BY BINARY_INTEGER;

TYPE date_table_type IS TABLE OF DATE

INDEX BY BINARY_INTEGER;

PROCEDURE msg

(text IN VARCHAR2

,ts IN TIMESTAMP := NULL);

PROCEDURE insert_form_debug_log

(logtable IN logtable_type

,date_table IN date_table_type

,username IN VARCHAR2

,module IN VARCHAR2

);

END DB_FORMS_DEBUG;

CREATE OR REPLACE PACKAGE BODY DB_FORMS_DEBUG IS

PROCEDURE msg

(text IN VARCHAR2

,ts IN TIMESTAMP := NULL) IS

PRAGMA AUTONOMOUS_TRANSACTION;

BEGIN

FOR i IN 0 .. LENGTH(text) / 4000 LOOP

INSERT INTO DEBUG_LOG

(log_id

,ts

,text)

VALUES

(DEBUG_LOG_SEQ.NEXTVAL

,NVL(ts, SYSTIMESTAMP)

,SUBSTR(text, i * 4000 + 1, 4000));

END LOOP;

COMMIT;

END msg;

PROCEDURE insert_form_debug_log

(logtable IN logtable_type

,date_table IN date_table_type

,username IN VARCHAR2

,module IN VARCHAR2

) IS

PRAGMA AUTONOMOUS_TRANSACTION;

BEGIN

FORALL i IN logtable.FIRST..logtable.LAST

INSERT INTO DEBUG_LOG

(log_id

,ts

,text

,module

,username)

VALUES

(DEBUG_LOG_SEQ.NEXTVAL

,date_table(i)

,logtable(i)

,insert_form_debug_log.module

,insert_form_debug_log.username);

COMMIT;

END insert_form_debug_log;

END DB_FORMS_DEBUG;

Form Package Specification

PACKAGE PKG_DEBUG IS

-- store the log contents in a database table, then reset the log

PROCEDURE flush_to_table;

-- debug log message

PROCEDURE msg (text IN VARCHAR2);

-- spit out debug info when an unexpected error ocurs

PROCEDURE on_error;

-- spit out debug info when a message is issued to the user

PROCEDURE on_message;

--popup a window showing the contents of the debug log

PROCEDURE show_log;

-- log current useful status info

PROCEDURE status;

END PKG_DEBUG;

Form Package Body

PACKAGE BODY PKG_DEBUG IS

-- the debug log is a circular buffer of debug log messages

TYPE debug_log_table_type IS TABLE OF VARCHAR2(4000) INDEX BY BINARY_INTEGER;

TYPE log_ts_table_type IS TABLE OF DATE INDEX BY BINARY_INTEGER;

con_debug_log_size CONSTANT PLS_INTEGER := 200;

debug_log_table debug_log_table_type;

log_ts_table log_ts_table_type;

debug_log_pos PLS_INTEGER;

PROCEDURE flush_to_table IS

-- send the debug log buffer to database table DEBUG_LOG

c INTEGER := 0;

i INTEGER := 1;

logtable DB_FORMS_DEBUG.logtable_type;

ts_table DB_FORMS_DEBUG.ts_table_type;

BEGIN

LOOP

EXIT WHEN i IS NULL;

c := c + 1;

logtable(c) := debug_log_table(i);

ts_table(c) := log_ts_table(i);

i := i + 1;

--wrap to the top of the buffer

IF i = con_debug_log_size THEN

i := 1;

END IF;

EXIT WHEN i = debug_log_pos OR NOT debug_log_table.EXISTS(i);

END LOOP;

IF logtable.COUNT > 0 THEN

--this log message will only survive if the db call fails

DB_FORMS_DEBUG.insert_form_debug_log

(logtable => logtable

,date_table => ts_table

,module => NAME_IN('SYSTEM.CURRENT_FORM')

,username => [user name/id...]);

debug_log_table.DELETE;

log_ts_table.DELETE;

debug_log_pos := NULL;

END IF;

END flush_to_table;

PROCEDURE msg (text IN VARCHAR2) IS

-- add debug log message to the scrolling log

BEGIN

debug_log_pos := MOD(NVL(debug_log_pos,0) + 1, con_debug_log_size);

debug_log_table(debug_log_pos) := SUBSTR(text,1,4000);

log_ts_table(debug_log_pos) := PKG_FORM.system_datetime;

END msg;

PROCEDURE on_error IS

-- Catch-all error handler for unexpected errors.

BEGIN

-- This should only ever be called when an *unexpected* error occurs.

--

-- If an error is expected in some circumstance, it should be handled (e.g. by putting

-- code in the on-error trigger to do something intelligent instead of calling this

-- catch-all procedure).

--

msg('DBMS ERROR : ' || DBMS_ERROR_CODE || ' ' || RTRIM(DBMS_ERROR_TEXT,CHR(10)));

status;

flush_to_table; -- so that support personnel may investigate

PKG_MESSAGE.error(ERROR_TYPE || '-' || ERROR_CODE || ' ' || ERROR_TEXT, 'System Error ' || ERROR_TYPE || '-' || ERROR_CODE);

END on_error;

PROCEDURE on_message IS

BEGIN

status;

MESSAGE(SUBSTR(MESSAGE_TYPE || '-' || MESSAGE_CODE || ' ' || MESSAGE_TEXT, 1, 200), ACKNOWLEDGE);

END on_message;

PROCEDURE show_log IS

i INTEGER := debug_log_pos;

BEGIN

-- fill the onscreen item CONTROL.DEBUGLOG starting

-- with the most recent debug line, and go backwards until

-- it is filled or there are no more debug log messages to show

-- It would be better for this to be done in a separate

-- form specifically for this purpose, to avoid problems

-- when we want to show the debug log without

-- firing validation triggers.

:CONTROL.debuglog := NULL;

LOOP

EXIT WHEN i IS NULL;

-- "64K should be enough for everybody"

IF :CONTROL.debuglog IS NOT NULL THEN

:CONTROL.debuglog := SUBSTR(CHR(10) || :CONTROL.debuglog, 1, 65534);

EXIT WHEN LENGTH(:CONTROL.debuglog) = 65534;

END IF;

:CONTROL.debuglog := SUBSTR(

TO_CHAR(i,'FM0000')

|| ' ' || TO_CHAR(log_ts_table(i),'HH24:MI:SS')

|| ' ' || debug_log_table(i)

|| :CONTROL.debuglog

, 1, 65534);

EXIT WHEN LENGTH(:CONTROL.debuglog) = 65534;

i := i - 1;

--wrap to the top of the buffer

IF i = 0 THEN

i := con_debug_log_size - 1;

END IF;

EXIT WHEN i = debug_log_pos OR NOT debug_log_table.EXISTS(i);

END LOOP;

GO_ITEM('CONTROL.debuglog');

check_package_failure;

END show_log;

PROCEDURE status IS

BEGIN

msg('FORM STATUS : ' || :SYSTEM.FORM_STATUS);

msg('RECORD STATUS : ' || :SYSTEM.RECORD_STATUS);

msg('TRIGGER ITEM : ' || NVL(:SYSTEM.TRIGGER_ITEM, :SYSTEM.TRIGGER_BLOCK)

|| ' RECORD #' || :SYSTEM.TRIGGER_RECORD);

msg('CURSOR ITEM : ' || :SYSTEM.CURSOR_ITEM

|| ' RECORD #' || :SYSTEM.CURSOR_RECORD);

END status;

END PKG_DEBUG;

For example, to move something from one parent frame to another:

For example, to move something from one parent frame to another: