Some time back, Connor rightly pointed out that triggers that modify data can get in the way when you need to do out-of-the-ordinary data maintenance, e.g. when you need to fix up a row here or re-insert a row over there. You can’t just disable the trigger or else make your users suffer down-time.

Some time back, Connor rightly pointed out that triggers that modify data can get in the way when you need to do out-of-the-ordinary data maintenance, e.g. when you need to fix up a row here or re-insert a row over there. You can’t just disable the trigger or else make your users suffer down-time.

Now, the only purpose for which I use triggers is to do common things like setting audit columns and incrementing a VERSION_ID column, and in certain special cases for carefully implementing cross-row constraints; also, I use them to populate a journal table with all changes to the table. Mind you, in recent times features have been added and improved in the Oracle database (such as Flashback Query and Flashback Data Archive) to the point where I’m almost ready to stop doing this. However, there are still some minor use-cases where having a separate “journal” table can be useful. Any argument about that assertion is ruled “out of scope” for this article! 🙂

So, assuming we’re sticking with triggers that might change data, a solution to this problem is already built-in to the journal triggers and Table APIs (TAPI) that my PL/SQL code generator creates. This allows me to disable the trigger on any table, just for my current session without affecting any other concurrent activity – and no DDL required.

UPDATED 16/2/2016: now uses a context variable (thanks Connor for the idea)

In the trigger I have this code:

create or replace TRIGGER EMPS$TRG

FOR INSERT OR UPDATE OR DELETE ON EMPS

COMPOUND TRIGGER

BEFORE EACH ROW IS

BEGIN

IF SYS_CONTEXT('SAMPLE_CTX','EMPS$TRG') IS NULL THEN

...etc...

END IF;

END BEFORE EACH ROW;

AFTER EACH ROW IS

BEGIN

IF SYS_CONTEXT('SAMPLE_CTX','EMPS$TRG') IS NULL THEN

...etc...

END IF;

END AFTER EACH ROW;

END EMPS$TRG;

The trigger takes advantage of some extra code that is generated in the Table API:

create or replace PACKAGE EMPS$TAPI AS

/***********************************************

Table API for emps

10-FEB-2016 - Generated by SAMPLE

***********************************************/

...

-- Use these procedures to disable and re-enable the

-- journal trigger just for this session (to disable for

-- all sessions, just disable the database trigger

-- instead).

PROCEDURE disable_journal_trigger;

PROCEDURE enable_journal_trigger;

END EMPS$TAPI;

The package body code is quite simple:

create or replace PACKAGE BODY EMPS$TAPI AS

/***********************************************

Table API for emps

10-FEB-2016 - Generated by SAMPLE

***********************************************/

...

-- may be used to disable and re-enable the journal trigger for this session

PROCEDURE disable_journal_trigger IS

BEGIN

log_start('disable_journal_trigger');

SECURITY.disable_journal_trigger('EMPS$TRG');

log_end;

EXCEPTION

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END disable_journal_trigger;

PROCEDURE enable_journal_trigger IS

BEGIN

log_start('enable_journal_trigger');

SECURITY.enable_journal_trigger('EMPS$TRG');

log_end;

EXCEPTION

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END enable_journal_trigger;

END EMPS$TAPI;

A context variable is set with the name of the trigger to disable it – the default state for a new session (i.e. the context variable not set) means the trigger is enabled.

create or replace PACKAGE BODY SECURITY AS

...

PROCEDURE disable_journal_trigger

(trigger_name IN VARCHAR2

,client_id IN VARCHAR2 := NULL) IS

BEGIN

-- set the context to any non-null value

DBMS_SESSION.set_context

(namespace => 'SAMPLE_CTX'

,attribute => trigger_name

,value => 'DISABLED'

,client_id => NVL(client_id, SYS_CONTEXT('USERENV','CLIENT_IDENTIFIER')));

END disable_journal_trigger;

PROCEDURE enable_journal_trigger

(trigger_name IN VARCHAR2

,client_id IN VARCHAR2 := NULL) IS

BEGIN

-- clear the context

DBMS_SESSION.clear_context

(namespace => 'SAMPLE_CTX'

,attribute => trigger_name

,client_id => NVL(client_id, SYS_CONTEXT('USERENV','CLIENT_IDENTIFIER')));

END enable_journal_trigger;

END SECURITY;

So now, to run some data maintenance, I can simply call the TAPI to disable, then re-enable, the trigger:

BEGIN EMPS$TAPI.disable_journal_trigger; END;

/

... do the data maintenance...

BEGIN EMPS$TAPI.enable_journal_trigger; END;

/

Unless the data maintenance is doing something very unusual, this script should be safe to run while the system is still up and running for users.

Also, it would be a trivial exercise to write a routine which disables or enables all the journal triggers at once.

The point of this, of course, is that you should be able to do all this sort of thing without writing a lot of code for each table in your schema – solve it for one table, and then generate the code for all your tables.

Source code/download: http://bitbucket.org/jk64/jk64-sample-apex-tapi

If you create an APEX form based on a table, APEX automatically creates processes of type Automatic Row Fetch and Automatic Row Processing (DML) as well as one item for each column in the table, each bound to the database column via its Source Type. This design is excellent as it’s fully declarative and is very quick and easy to build a data entry page for all your tables.

The downside to this approach is that if you want to use a Table API (TAPI) to encapsulate all DML activity on your tables, you need to write a whole lot of code to replace the processes that Apex created for you. In order to mitigate this as much as possible, I’ve augmented my code generator with an “APEX API” generator. This generates a second package for each table which can be called from APEX, which in turn calls the TAPI to run the actual DML. In addition, the validations that are performed by the TAPI are translated back into APEX Errors so that they are rendered in much the same way as built-in APEX validations.

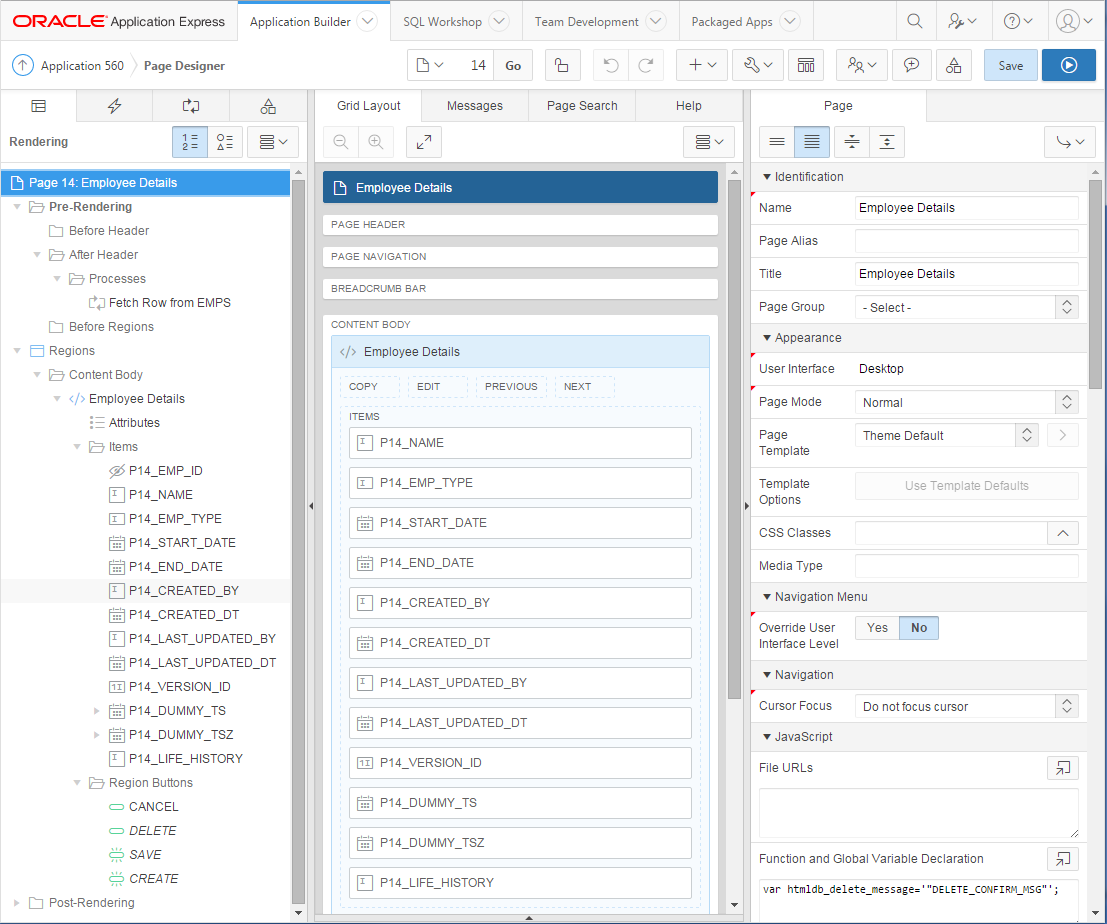

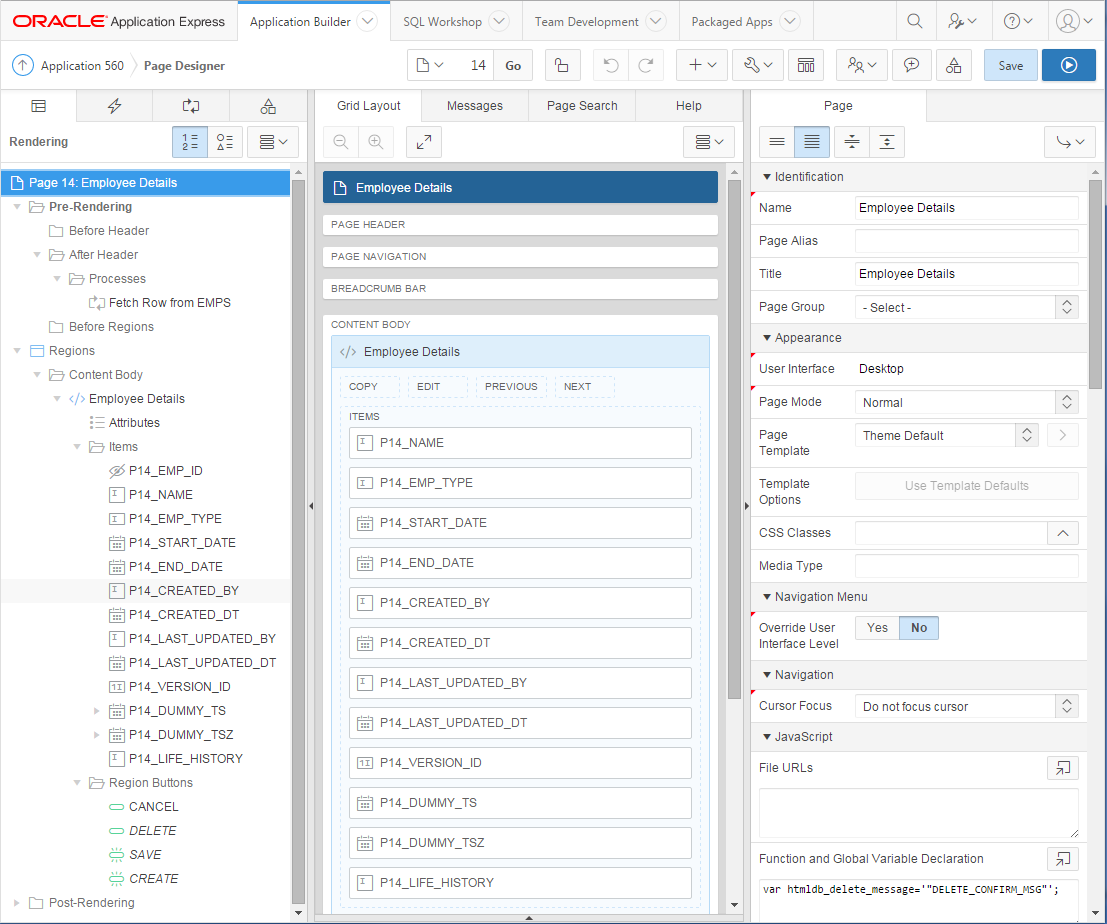

Probably the best way to explain this is to show an example. Here’s my EMPS table (same as from my last article):

CREATE TABLE emps

(emp_id NUMBER NOT NULL

,name VARCHAR2(100 CHAR) NOT NULL

,emp_type VARCHAR2(20 CHAR) DEFAULT 'SALARIED' NOT NULL

,start_date DATE NOT NULL

,end_date DATE

,dummy_ts TIMESTAMP(6)

,dummy_tsz TIMESTAMP(6) WITH TIME ZONE

,life_history CLOB

,CONSTRAINT emps_pk PRIMARY KEY ( emp_id )

,CONSTRAINT emps_name_uk UNIQUE ( name )

,CONSTRAINT emp_type_ck

CHECK ( emp_type IN ('SALARIED','CONTRACTOR')

);

CREATE SEQUENCE emp_id_seq;

By the way, my table creation script calls DEPLOY.create_table to do this, which automatically adds my standard audit columns to the table – CREATED_BY, CREATED_DT, LAST_UPDATED_BY, LAST_UPDATED_DT, and VERSION_ID. My script also calls GENERATE.journal for the table which creates a journal table (EMPS$JN) and a trigger (EMPS$TRG) to log all DML activity against the table.

I then call GENERATE.tapi which creates the Table API (EMPS$TAPI) which has routines for validating, inserting, updating and deleting rows (or arrays of rows using bulk binds) of the EMPS table.

Finally, I call GENERATE.apexapi which creates the APEX API (EMPS$APEX) which looks like this:

Package Spec: EMPS$APEX

create or replace PACKAGE EMPS$APEX AS

/**************************************************

Apex API for emps

10-FEB-2016 - Generated by SAMPLE

**************************************************/

-- page load process

PROCEDURE load;

-- single-record page validation

PROCEDURE val;

-- page submit process

PROCEDURE process;

END EMPS$APEX;

Notice that these routines require no parameters; the API gets all the data it needs directly from APEX.

Package Body: EMPS$APEX

create or replace PACKAGE BODY EMPS$APEX AS

/*******************************************************************************

Table API for emps

10-FEB-2016 - Generated by SAMPLE

*******************************************************************************/

PROCEDURE apex_set (r IN EMPS$TAPI.rowtype) IS

p VARCHAR2(10) := 'P' || UTIL.apex_page_id || '_';

BEGIN

log_start('apex_set');

sv(p||'EMP_ID', r.emp_id);

sv(p||'NAME', r.name);

sv(p||'EMP_TYPE', r.emp_type);

sd(p||'START_DATE', r.start_date);

sd(p||'END_DATE', r.end_date);

st(p||'BLA_TSZ', r.bla_tsz);

st(p||'DUMMY_TS', r.dummy_ts);

sv(p||'CREATED_BY', r.created_by);

sd(p||'CREATED_DT', r.created_dt);

sv(p||'LAST_UPDATED_BY', r.last_updated_by);

sd(p||'LAST_UPDATED_DT', r.last_updated_dt);

sv(p||'VERSION_ID', r.version_id);

log_end;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END apex_set;

FUNCTION apex_get RETURN EMPS$TAPI.rvtype IS

p VARCHAR2(10) := 'P' || UTIL.apex_page_id || '_';

rv EMPS$TAPI.rvtype;

BEGIN

log_start('apex_get');

rv.emp_id := nv(p||'EMP_ID');

rv.name := v(p||'NAME');

rv.emp_type := v(p||'EMP_TYPE');

rv.start_date := v(p||'START_DATE');

rv.end_date := v(p||'END_DATE');

rv.bla_tsz := v(p||'BLA_TSZ');

rv.dummy_ts := v(p||'DUMMY_TS');

rv.version_id := nv(p||'VERSION_ID');

log_end;

RETURN rv;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END apex_get;

FUNCTION apex_get_pk RETURN EMPS$TAPI.rvtype IS

p VARCHAR2(10) := 'P' || UTIL.apex_page_id || '_';

rv EMPS$TAPI.rvtype;

BEGIN

log_start('apex_get_pk');

IF APEX_APPLICATION.g_request = 'COPY' THEN

rv.emp_id := v(p||'COPY_EMP_ID');

ELSE

rv.emp_id := nv(p||'EMP_ID');

rv.version_id := nv(p||'VERSION_ID');

END IF;

log_end;

RETURN rv;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END apex_get_pk;

/*******************************************************************************

PUBLIC INTERFACE

*******************************************************************************/

PROCEDURE load IS

p VARCHAR2(10) := 'P' || UTIL.apex_page_id || '_';

rv EMPS$TAPI.rvtype;

r EMPS$TAPI.rowtype;

BEGIN

log_start('load');

UTIL.check_authorization('Reporting');

rv := apex_get_pk;

r := EMPS$TAPI.get (emp_id => rv.emp_id);

IF APEX_APPLICATION.g_request = 'COPY' THEN

r := EMPS$TAPI.copy(r);

END IF;

apex_set (r => r);

log_end;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END load;

PROCEDURE val IS

p VARCHAR2(10) := 'P' || UTIL.apex_page_id || '_';

rv EMPS$TAPI.rvtype;

dummy VARCHAR2(32767);

item_name_map UTIL.str_map;

BEGIN

log_start('val');

IF APEX_APPLICATION.g_request = 'CREATE'

OR APEX_APPLICATION.g_request LIKE 'SAVE%' THEN

rv := apex_get;

UTIL.pre_val

(label_map => EMPS$TAPI.label_map

,item_name_map => item_name_map);

dummy := EMPS$TAPI.val (rv => rv);

UTIL.post_val;

END IF;

log_end;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END val;

PROCEDURE process IS

p VARCHAR2(10) := 'P' || UTIL.apex_page_id || '_';

rv EMPS$TAPI.rvtype;

r EMPS$TAPI.rowtype;

BEGIN

log_start('process');

UTIL.check_authorization('Operator');

CASE

WHEN APEX_APPLICATION.g_request = 'CREATE' THEN

rv := apex_get;

r := EMPS$TAPI.ins (rv => rv);

apex_set (r => r);

UTIL.success('Emp created.');

WHEN APEX_APPLICATION.g_request LIKE 'SAVE%' THEN

rv := apex_get;

r := EMPS$TAPI.upd (rv => rv);

apex_set (r => r);

UTIL.success('Emp updated.'

|| CASE WHEN APEX_APPLICATION.g_request = 'SAVE_COPY'

THEN ' Ready to create new emp.'

END);

WHEN APEX_APPLICATION.g_request = 'DELETE' THEN

rv := apex_get_pk;

EMPS$TAPI.del (rv => rv);

UTIL.clear_page_cache;

UTIL.success('Emp deleted.');

END CASE;

log_end;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END process;

END EMPS$APEX;

Now, given the above package, we can create an APEX page that allows users to view, create, update, copy and delete a record from the EMPS table, using all the features provided by our TAPI.

- Create Page, select Form, select Form on a Table or view, select the table EMPS.

- Accept the defaults, or change them to taste, and click Next, Next.

- On the Primary Key wizard step, change type to Select Primary Key Column(s) and it should pick up the EMP_ID column automatically. Click Next.*

- For Source Type, leave the default (Existing trigger).** Click Next, Next, Next.

- For Branching, enter page numbers as required. Click Next, then Create.

* the APEX API and Table API generator also handles tables with no surrogate key by using ROWID instead; in this case, you would leave the default option selected (Managed by Database (ROWID)) here.

** note however that our TAPI will handle the sequence generation, not a trigger.

The page should look something like this:

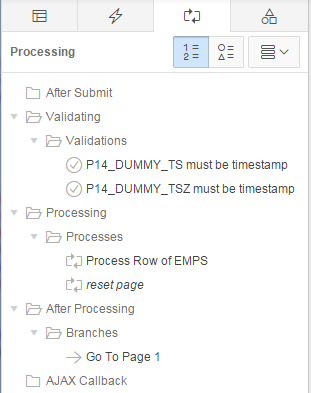

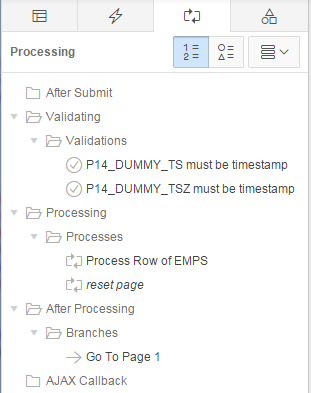

Notice that it has created a Fetch Row from EMPS process for when the page is loaded, as well as the Process Row of EMPS and reset page processes for when the page is submitted. It has also created a few validations.

Notice also that all the items are named consistently with the column names; this is important as my APEX API package generator relies on this one-to-one mapping. You can, of course, add additional non-database items to the page – they won’t be affected by the generator unless the table is altered with columns that match.

Now, this page will work fine, except that it bypasses our TAPI. To change the page so that it uses our TAPI instead, edit the page as follows:

- Delete all the Fetch Row from EMPS, Process Row of EMPS and reset page processes.

- Delete all the validations.

- For all the page items, set Source Type to Null. In Apex 5 this is easy – just Ctrl+Click each item, then make the change to all of them in one step!

- Make the audit column items (CREATED_BY, CREATED_DT, LAST_UPDATED_BY, LAST_UPDATED_DT) Display Only.

- Make the VERSION_ID item Hidden.

- Under Pre-Rendering, add an After Header process that calls

EMPS$APEX.load;.

- In the Page Processing tab, under Validating, add a validation with Type = PL/SQL Function (returning Error Text).

- Set the PL/SQL Function Body Returning Error Text to

EMPS$APEX.val; RETURN null;.

- Set Error Message to “bla” (this is a mandatory field but is never used – I think this is a small bug in Apex 5).

- Under Processing, add a process that calls

EMPS$APEX.process;.

- Set Error Message to

#SQLERRM_TEXT#.

Run the page – you should find that it works just as well as before, with all the TAPI goodness working behind the scenes. Even the validations work, and they will point at the right items on the page.

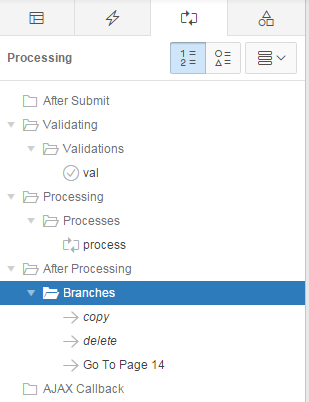

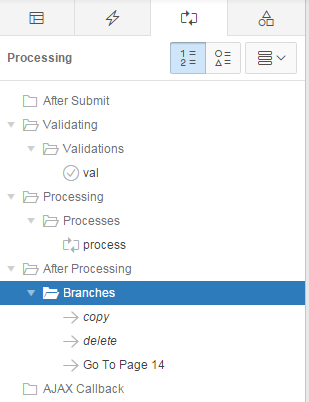

But that’s not all! You can easily add a useful “Copy” function that your users will thank you for because (depending on the use case) it can reduce the amount of typing they have to do.

- Add a button to the region, named SAVE_COPY (this name is important) with the label Copy. Tip: if you want an icon set the Icon CSS Classes to fa-copy.

- Add a hidden item named after the PK item prefixed with “COPY_”, e.g.

P14_COPY_EMP_ID.

- Under After Processing, add a Branch that goes to this same page (e.g. 14, in this example).

- On the branch, set Request (under Advanced) to COPY and assign

&P14_EMP_ID. to the item P14_COPY_EMP_ID.

- Set When Button Pressed to SAVE_COPY.

- Change the order of the branches so that the Copy branch is evaluated before the other branches (see below)

Now, when they click Copy, the page will first save any changes they had made to the record, then go back to the same page with a copy of all the details from the original record. The user can then edit the new record and Create it if they so desire, or Cancel.

An advantage of this design is that, if you want to add a validation that applies whether someone is updating the table from APEX or from some other UI or interface, you can add it in one place – the TAPI (specifically, you would add it to the TAPI template). If you add a column, just add an item to the APEX page and regenerate the TAPI and Apex API. It’s a nice DRY-compliant solution.

Addendum: you may be wondering why we need a P14_COPY_EMP_ID item, instead of simply reusing the P14_EMP_ID item that’s already there. The reason for this is that after saving a copied record, in some cases we may want to copy some or all the child records from the original record to the copy, or do some other operation that needs both the old and the new ID.

Source code/download: https://github.com/jeffreykemp/jk64-sample-apex-xapi

The last few weeks I’ve made quite a few improvements to my TAPI generator which I thought I’d share. I’ve also added an Apex API generator which generates code suitable for interfacing between simple Apex applications and my TAPIs. This reduces the volume of PL/SQL required within Apex to a bare minimum.

The last few weeks I’ve made quite a few improvements to my TAPI generator which I thought I’d share. I’ve also added an Apex API generator which generates code suitable for interfacing between simple Apex applications and my TAPIs. This reduces the volume of PL/SQL required within Apex to a bare minimum.

- Templates are now defined in a package spec, so they are easier to edit in a tool with syntax highlighting (more or less)

- Most dynamic code generation is defined within the template using a simple syntax

- Makes inferences from schema metadata to generate code, including some guesses based on object and column naming conventions.

- Ability to insert table-specific code into the template so that it is retained after re-generating the TAPI.

- As much as possible, allow generated code to follow my preferred code formatting rules as possible.

- The Table API (“TAPI”) package defines two record types; one (rowtype) is based on the table, the other (rvtype) uses mostly VARCHAR2(4000) columns in order to hold a pre-validated record.

Assumptions

My generator makes the following assumptions:

- All tables and columns are named non-case-sensitive, i.e. no double-quote delimiters required.

- (APEX API) All columns are max 26 chars long (in order to accommodate the Apex “P99_…” naming convention)

- (APEX API) Table has no more than 1 CLOB, 1 BLOB and 1 XMLTYPE column (in order to support conversion to/from Apex collections)

If any of the above do not hold true, the TAPI will probably need to be manually adjusted to work. All TAPIs generated should be reviewed prior to use anyway.

Example

For example, given the following schema:

CREATE TABLE emps

(emp_id NUMBER NOT NULL

,name VARCHAR2(100 CHAR) NOT NULL

,emp_type VARCHAR2(20 CHAR) DEFAULT 'SALARIED' NOT NULL

,start_date DATE NOT NULL

,end_date DATE

,dummy_ts TIMESTAMP(6)

,dummy_tsz TIMESTAMP(6) WITH TIME ZONE

,life_history CLOB

,CONSTRAINT emps_pk PRIMARY KEY ( emp_id )

,CONSTRAINT emps_name_uk UNIQUE ( name )

,CONSTRAINT emp_type_ck

CHECK ( emp_type IN ('SALARIED','CONTRACTOR')

);

CREATE SEQUENCE emp_id_seq;

I can run this:

BEGIN GENERATE.tapi('emps'); END;

/

This generates the following package (I’ve removed large portions, the full version is linked below):

create or replace PACKAGE EMPS$TAPI AS

/**********************************************************

Table API for emps

10-FEB-2016 - Generated by SAMPLE

**********************************************************/

SUBTYPE rowtype IS emps%ROWTYPE;

TYPE arraytype IS TABLE OF rowtype INDEX BY BINARY_INTEGER;

TYPE rvtype IS RECORD

(emp_id emps.emp_id%TYPE

,name VARCHAR2(4000)

,emp_type VARCHAR2(4000)

,start_date VARCHAR2(4000)

,end_date VARCHAR2(4000)

,dummy_ts VARCHAR2(4000)

,dummy_tsz VARCHAR2(4000)

,life_history emps.life_history%TYPE

,version_id emps.version_id%TYPE

);

TYPE rvarraytype IS TABLE OF rvtype INDEX BY BINARY_INTEGER;

-- validate the row (returns an error message if invalid)

FUNCTION val (rv IN rvtype) RETURN VARCHAR2;

-- insert a row

FUNCTION ins (rv IN rvtype) RETURN rowtype;

-- insert multiple rows, array may be sparse

-- returns no. records inserted

FUNCTION bulk_ins (arr IN rvarraytype) RETURN NUMBER;

$if false $then/*need to grant DBMS_CRYPTO*/

-- generate a hash for the record

FUNCTION hash (r IN rowtype) RETURN VARCHAR2;

$end

...

END EMPS$TAPI;

create or replace PACKAGE BODY EMPS$TAPI AS

/**********************************************************

Table API for emps

10-FEB-2016 - Generated by SAMPLE

**********************************************************/

FUNCTION val (rv IN rvtype) RETURN VARCHAR2 IS

-- Validates the record but without reference to any other rows or tables

-- (i.e. avoid any queries in here).

-- Unique and referential integrity should be validated via suitable db

-- constraints (violations will be raised when the ins/upd/del is attempted).

-- Complex cross-record validations should usually be performed by a XAPI

-- prior to the call to the TAPI.

BEGIN

log_start('val');

UTIL.val_not_null (val => rv.name, column_name => 'NAME');

UTIL.val_not_null (val => rv.emp_type, column_name => 'EMP_TYPE');

UTIL.val_not_null (val => rv.start_date, column_name => 'START_DATE');

UTIL.val_max_len (val => rv.name, len => 100, column_name => 'NAME');

UTIL.val_max_len (val => rv.emp_type, len => 20, column_name => 'EMP_TYPE');

UTIL.val_date (val => rv.start_date, column_name => 'START_DATE');

UTIL.val_date (val => rv.end_date, column_name => 'END_DATE');

UTIL.val_timestamp (val => rv.dummy_ts, column_name => 'DUMMY_TS');

UTIL.val_timestamp_tz (val => rv.dummy_tsz, column_name => 'DUMMY_TSZ');

--TODO: add more validations if necessary

log_end;

RETURN UTIL.first_error;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END val;

FUNCTION ins (rv IN rvtype) RETURN rowtype IS

r rowtype;

error_msg VARCHAR2(32767);

BEGIN

log_start('ins');

error_msg := val (rv => rv);

IF error_msg IS NOT NULL THEN

raise_error(error_msg);

END IF;

INSERT INTO emps

(emp_id

,name

,emp_type

,start_date

,end_date

,dummy_ts

,dummy_tsz

,life_history)

VALUES(emp_id_seq.NEXTVAL

,rv.name

,rv.emp_type

,UTIL.date_val(rv.start_date)

,UTIL.date_val(rv.end_date)

,UTIL.timestamp_val(rv.dummy_ts)

,UTIL.timestamp_tz_val(rv.dummy_tsz)

,rv.life_history)

RETURNING

emp_id

,name

,emp_type

,start_date

,end_date

,dummy_ts

,dummy_tsz

,life_history

,created_by

,created_dt

,last_updated_by

,last_updated_dt

,version_id

INTO r.emp_id

,r.name

,r.emp_type

,r.start_date

,r.end_date

,r.dummy_ts

,r.dummy_tsz

,r.life_history

,r.created_by

,r.created_dt

,r.last_updated_by

,r.last_updated_dt

,r.version_id;

msg('INSERT emps: ' || SQL%ROWCOUNT);

log_end;

RETURN r;

EXCEPTION

WHEN DUP_VAL_ON_INDEX THEN

UTIL.raise_dup_val_on_index;

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END ins;

FUNCTION bulk_ins (arr IN rvarraytype) RETURN NUMBER IS

rowcount NUMBER;

BEGIN

log_start('bulk_ins');

bulk_val(arr);

FORALL i IN INDICES OF arr

INSERT INTO emps

(emp_id

,name

,emp_type

,start_date

,end_date

,dummy_ts

,dummy_tsz

,life_history)

VALUES (emp_id_seq.NEXTVAL

,arr(i).name

,arr(i).emp_type

,UTIL.date_val(arr(i).start_date)

,UTIL.date_val(arr(i).end_date)

,UTIL.timestamp_val(arr(i).dummy_ts)

,UTIL.timestamp_tz_val(arr(i).dummy_tsz)

,arr(i).life_history);

rowcount := SQL%ROWCOUNT;

msg('INSERT emps: ' || rowcount);

log_end('rowcount=' || rowcount);

RETURN rowcount;

EXCEPTION

WHEN DUP_VAL_ON_INDEX THEN

UTIL.raise_dup_val_on_index;

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END bulk_ins;

$if false $then/*need to grant DBMS_CRYPTO*/

FUNCTION hash (r IN rowtype) RETURN VARCHAR2 IS

sep CONSTANT VARCHAR2(1) := '|';

digest CLOB;

ret RAW(2000);

BEGIN

log_start('hash');

digest := digest || sep || r.emp_id;

digest := digest || sep || r.name;

digest := digest || sep || r.emp_type;

digest := digest || sep || TO_CHAR(r.start_date, UTIL.DATE_FORMAT);

digest := digest || sep || TO_CHAR(r.end_date, UTIL.DATE_FORMAT);

digest := digest || sep || TO_CHAR(r.dummy_ts, UTIL.TIMESTAMP_FORMAT);

digest := digest || sep || TO_CHAR(r.dummy_tsz, UTIL.TIMESTAMP_TZ_FORMAT);

ret := DBMS_CRYPTO.hash(digest, DBMS_CRYPTO.hash_sh1);

log_end(ret);

RETURN ret;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END hash;

$end

...

END EMPS$TAPI;

Example Template

The following is a template which provides the source used to generate the above TAPI. The syntax may look very strange, but if you read on you can read my explanation of the syntax below. My goal was not to invent an all-singing all-dancing general-purpose syntax for code generation – but to have “just enough” expressive power to generate the kind of code I require.

create or replace PACKAGE TEMPLATES AS

$if false $then

<%TEMPLATE TAPI_PACKAGE_SPEC>

CREATE OR REPLACE PACKAGE #TAPI# AS

/**********************************************************

Table API for #table#

#SYSDATE# - Generated by #USER#

**********************************************************/

<%IF EVENTS>

/*Repeat Types*/

DAILY CONSTANT VARCHAR2(100) := 'DAILY';

WEEKLY CONSTANT VARCHAR2(100) := 'WEEKLY';

MONTHLY CONSTANT VARCHAR2(100) := 'MONTHLY';

ANNUALLY CONSTANT VARCHAR2(100) := 'ANNUALLY';

<%END IF>

SUBTYPE rowtype IS #table#%ROWTYPE;

TYPE arraytype IS TABLE OF rowtype INDEX BY BINARY_INTEGER;

TYPE rvtype IS RECORD

(<%COLUMNS EXCLUDING AUDIT INCLUDING ROWID,EVENTS.REPEAT_IND>

#col#--- VARCHAR2(4000)~

#col#--- #table#.#col#%TYPE{ID}~

#col#--- #table#.#col#%TYPE{LOB}~

#col#--- VARCHAR2(20){ROWID}~

#col#--- VARCHAR2(1){EVENTS.REPEAT_IND}~

,<%END>

);

TYPE rvarraytype IS TABLE OF rvtype INDEX BY BINARY_INTEGER;

-- validate the row (returns an error message if invalid)

FUNCTION val (rv IN rvtype) RETURN VARCHAR2;

-- insert a row

FUNCTION ins (rv IN rvtype) RETURN rowtype;

-- insert multiple rows, array may be sparse; returns no. records inserted

FUNCTION bulk_ins (arr IN rvarraytype) RETURN NUMBER;

...

<%IF DBMS_CRYPTO><%ELSE>$if false $then/*need to grant DBMS_CRYPTO*/<%END IF>

-- generate a hash for the record

FUNCTION hash (r IN rowtype) RETURN VARCHAR2;

<%IF DBMS_CRYPTO><%ELSE>$end<%END IF>

END #TAPI#;

<%END TEMPLATE>

<%TEMPLATE TAPI_PACKAGE_BODY>

CREATE OR REPLACE PACKAGE BODY #TAPI# AS

/**********************************************************

Table API for #table#

#SYSDATE# - Generated by #USER#

**********************************************************/

FUNCTION val (rv IN rvtype) RETURN VARCHAR2 IS

-- Validates the record but without reference to any other rows or tables

-- (i.e. avoid any queries in here).

-- Unique and referential integrity should be validated via suitable db

-- constraints (violations will be raised when the ins/upd/del is attempted).

-- Complex cross-record validations should usually be performed by a XAPI

-- prior to the call to the TAPI.

BEGIN

log_start('val');

<%COLUMNS EXCLUDING GENERATED,SURROGATE_KEY,NULLABLE>

UTIL.val_not_null (val => rv.#col#, column_name => '#COL#');~

<%END>

<%IF EVENTS>

IF rv.repeat_ind = 'Y' THEN

UTIL.val_not_null (val => rv.repeat, column_name => 'REPEAT');

UTIL.val_not_null (val => rv.repeat_interval, column_name => 'REPEAT_INTERVAL');

END IF;

<%END IF>

<%COLUMNS EXCLUDING GENERATED,SURROGATE_KEY,LOBS INCLUDING EVENTS.REPEAT_IND>

UTIL.val_ind (val => rv.#col#, column_name => '#COL#');{IND}~

UTIL.val_yn (val => rv.#col#, column_name => '#COL#');{YN}~

UTIL.val_max_len (val => rv.#col#, len => #MAXLEN#, column_name => '#COL#');{VARCHAR2}~

UTIL.val_numeric (val => rv.#col#, column_name => '#COL#');{NUMBER}~

UTIL.val_date (val => rv.#col#, column_name => '#COL#');{DATE}~

UTIL.val_datetime (val => rv.#col#, column_name => '#COL#');{DATETIME}~

UTIL.val_timestamp (val => rv.#col#, column_name => '#COL#');{TIMESTAMP}~

UTIL.val_timestamp_tz (val => rv.#col#, column_name => '#COL#');{TIMESTAMP_TZ}~

UTIL.val_integer (val => rv.#col#, range_low => 1, column_name => '#COL#');{EVENTS.REPEAT_INTERVAL}~

UTIL.val_domain

(val => rv.#col#

,valid_values => t_str_array(DAILY, WEEKLY, MONTHLY, ANNUALLY)

,column_name => '#COL#');{EVENTS.REPEAT}~

~

<%END>

<%IF EVENTS>

UTIL.val_datetime_range

(start_dt => rv.start_dt

,end_dt => rv.end_dt

,label => 'Event Date/Time Range');

<%END IF>

<%IF EVENT_TYPES>

UTIL.val_cond

(cond => rv.event_type = UPPER(rv.event_type)

,msg => 'Event Type Code must be all uppercase'

,column_name => 'EVENT_TYPE');

UTIL.val_cond

(cond => rv.event_type = TRANSLATE(rv.event_type,'X -:','X___')

,msg => 'Event Type Code cannot include spaces, dashes (-) or colons (:)'

,column_name => 'EVENT_TYPE');

UTIL.val_date_range

(start_date => rv.start_date

,end_date => rv.end_date

,label => 'Event Types Date Range');

<%END IF>

--TODO: add more validations if necessary

log_end;

RETURN UTIL.first_error;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END val;

FUNCTION ins (rv IN rvtype) RETURN rowtype IS

r rowtype;

error_msg VARCHAR2(32767);

BEGIN

log_start('ins');

error_msg := val (rv => rv);

IF error_msg IS NOT NULL THEN

raise_error(error_msg);

END IF;

INSERT INTO #table#

(<%COLUMNS EXCLUDING GENERATED>

#col#~

,<%END>)

VALUES(<%COLUMNS EXCLUDING GENERATED>

#seq#.NEXTVAL{SURROGATE_KEY}~

rv.#col#~

UTIL.num_val(rv.#col#){NUMBER}~

UTIL.date_val(rv.#col#){DATE}~

UTIL.datetime_val(rv.#col#){DATETIME}~

UTIL.timestamp_val(rv.#col#){TIMESTAMP}~

UTIL.timestamp_tz_val(rv.#col#){TIMESTAMP_TZ}~

,<%END>)

RETURNING

<%COLUMNS INCLUDING VIRTUAL>

#col#~

,<%END>

INTO <%COLUMNS INCLUDING VIRTUAL>

r.#col#~

,<%END>;

msg('INSERT #table#: ' || SQL%ROWCOUNT);

log_end;

RETURN r;

EXCEPTION

WHEN DUP_VAL_ON_INDEX THEN

UTIL.raise_dup_val_on_index;

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END ins;

FUNCTION bulk_ins (arr IN rvarraytype) RETURN NUMBER IS

rowcount NUMBER;

BEGIN

log_start('bulk_ins');

bulk_val(arr);

FORALL i IN INDICES OF arr

INSERT INTO #table#

(<%COLUMNS EXCLUDING GENERATED>

#col#~

,<%END>)

VALUES (<%COLUMNS EXCLUDING GENERATED>

#seq#.NEXTVAL{SURROGATE_KEY}~

arr(i).#col#~

UTIL.num_val(arr(i).#col#){NUMBER}~

UTIL.date_val(arr(i).#col#){DATE}~

UTIL.datetime_val(arr(i).#col#){DATETIME}~

UTIL.timestamp_val(arr(i).#col#){TIMESTAMP}~

UTIL.timestamp_tz_val(arr(i).#col#){TIMESTAMP_TZ}~

,<%END>);

rowcount := SQL%ROWCOUNT;

msg('INSERT #table#: ' || rowcount);

log_end('rowcount=' || rowcount);

RETURN rowcount;

EXCEPTION

WHEN DUP_VAL_ON_INDEX THEN

UTIL.raise_dup_val_on_index;

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END bulk_ins;

<%IF DBMS_CRYPTO><%ELSE>$if false $then/*need to grant DBMS_CRYPTO*/<%END IF>

FUNCTION hash (r IN rowtype) RETURN VARCHAR2 IS

sep CONSTANT VARCHAR2(1) := '|';

digest CLOB;

ret RAW(2000);

BEGIN

log_start('hash');

<%COLUMNS EXCLUDING GENERATED,LOBS>

digest := digest || sep || r.#col#;~

digest := digest || sep || TO_CHAR(r.#col#, UTIL.DATE_FORMAT);{DATE}~

digest := digest || sep || TO_CHAR(r.#col#, UTIL.DATETIME_FORMAT);{DATETIME}~

digest := digest || sep || TO_CHAR(r.#col#, UTIL.TIMESTAMP_FORMAT);{TIMESTAMP}~

digest := digest || sep || TO_CHAR(r.#col#, UTIL.TIMESTAMP_TZ_FORMAT);{TIMESTAMP_TZ}~

<%END>

ret := DBMS_CRYPTO.hash(digest, DBMS_CRYPTO.hash_sh1);

log_end(ret);

RETURN ret;

EXCEPTION

WHEN UTIL.application_error THEN

log_end('application_error');

RAISE;

WHEN OTHERS THEN

UTIL.log_sqlerrm;

RAISE;

END hash;

<%IF DBMS_CRYPTO><%ELSE>$end<%END IF>

END #TAPI#;

<%END TEMPLATE>

$end

END TEMPLATES;

Template Syntax

You may be wondering what all the <%bla> and #bla# tags mean. These are the controlling elements for my code generator.

All template code is embedded within $if false $then ... $end so that the template package spec can be compiled without error in the schema, while still allowing most syntax highlighters to make the template easy to read and edit. This source is then read by the generator from the TEMPLATES database package.

Each template within the TEMPLATES package is delineated by the following structural codes, each of which must appear at the start of a line:

&amp;amp;amp;amp;lt;%TEMPLATE template_name&amp;amp;amp;amp;gt;

...

&amp;amp;amp;amp;lt;%END TEMPLATE&amp;amp;amp;amp;gt;

Anything in the TEMPLATES package not within these structural elements is ignored by the generator.

Some simple placeholders are supported anywhere in a template:

#SYSDATE# – Today’s date in DD-MON-YYYY format#TABLE# – Table name in uppercase#table# – Table name in lowercase#USER# – User name who executed the procedure#Entity# – User-friendly name based on table name, singular (e.g. EVENTS -> Event)#Entities# – User-friendly name based on table name#TAPI# – Table API package name#APEXAPI# – Apex API package name\n – Insert a linefeed (not often required, since actual linefeeds in the template are usually retained)

These are all case-sensitive; in some cases an UPPERCASE, lowercase and Initcap version is supported for a placeholder.

Code portions that are only required in certain cases may be surrounded with the IF/ELSE/END IF structure:

&amp;amp;amp;amp;lt;%IF condition&amp;amp;amp;amp;gt;

...

&amp;amp;amp;amp;lt;%ELSE&amp;amp;amp;amp;gt;

...

&amp;amp;amp;amp;lt;%END IF&amp;amp;amp;amp;gt;

Currently the list of conditions are limited to LOBS (true if the table has any LOB-type columns), ROWID (true if the table does NOT have a surrogate key (i.e. a primary key matched by name to a sequence), or the name of a table (useful to have some code that is only generated for a specific table), or the name of a DBMS_xxx package (useful to have code that is only generated if the owner has been granted EXECUTE on the named DBMS_xxx package).

To negate a condition, simply leave the first part of the IF/ELSE part empty, e.g.:

&amp;amp;amp;amp;lt;%IF LOBS&amp;amp;amp;amp;gt;&amp;amp;amp;amp;lt;%ELSE&amp;amp;amp;amp;gt; /*this table has no LOBS*/ &amp;amp;amp;amp;lt;%END IF&amp;amp;amp;amp;gt;

Code portions that need to be repeated for each column (or a subset of columns) in the table use the COLUMNS structure:

(&amp;amp;amp;amp;lt;%COLUMNS&amp;amp;amp;amp;gt;

#col#--- =&amp;amp;amp;amp;gt; :#COL#~

,&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;)

The COLUMNS structure looks very weird and might take a while to get used to, but basically it contains a list of sub-templates, delimited by tildes (~). The first sub-template (e.g. #col#--- => :#COL#) is used for each column, and the second sub-template (e.g. ,) is inserted between each column (if there is more than one column). In the above example, our emps table would result in the following generated:

(emp_id =&amp;amp;amp;amp;gt; :EMP_ID

,name =&amp;amp;amp;amp;gt; :NAME

,emp_type =&amp;amp;amp;amp;gt; :EMP_TYPE

,start_date =&amp;amp;amp;amp;gt; :START_DATE

,end_date =&amp;amp;amp;amp;gt; :END_DATE

,dummy_ts =&amp;amp;amp;amp;gt; :DUMMY_TS

,dummy_tsz =&amp;amp;amp;amp;gt; :DUMMY_TSZ

,life_history =&amp;amp;amp;amp;gt; :LIFE_HISTORY)

Notice that #col# is replaced with the column name in lowercase, and #COL# is replaced with the column name in uppercase. In addition, the --- is a special code that causes the generator to insert additional spaces so that the code is aligned vertically. Notice also that the second sub-template (the separator bit with the comma) also includes a carriage return (after ~ and before ,). If we had instead used the following template:

&amp;amp;amp;amp;lt;%COLUMNS&amp;amp;amp;amp;gt;

#col#--- =&amp;amp;amp;amp;gt; :#COL#~,&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

This would have been the result:

emp_id =&amp;amp;amp;amp;gt; :EMP_ID,name =&amp;amp;amp;amp;gt; :NAME,emp_type =&amp;amp;amp;amp;gt; :EMP_TYPE,start_date =&amp;amp;amp;amp;gt; :START_DATE,end_date =&amp;amp;amp;amp;gt; :END_DATE,dummy_ts =&amp;amp;amp;amp;gt; :DUMMY_TS,dummy_tsz =&amp;amp;amp;amp;gt; :DUMMY_TSZ,life_history =&amp;amp;amp;amp;gt; :LIFE_HISTORY

The generator gives you a great deal of control over which columns are included. The COLUMNS structure supports three optional clauses: INCLUDING, EXCLUDING and ONLY.

&amp;amp;amp;amp;lt;%COLUMNS&amp;amp;amp;amp;gt;

(all columns in the table, EXCEPT for virtual columns)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS INCLUDING VIRTUAL&amp;amp;amp;amp;gt;

(all columns in the table, including virtual columns)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS EXCLUDING PK&amp;amp;amp;amp;gt;

(all columns except for Primary Key columns)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS EXCLUDING LOBS&amp;amp;amp;amp;gt;

(all columns except for LOB-type columns)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS EXCLUDING EMPS.NAME&amp;amp;amp;amp;gt;

(all columns - except for the specified column)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS EXCLUDING AUDIT&amp;amp;amp;amp;gt;

(all columns except for the audit columns such as CREATED_BY, etc.)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS ONLY PK&amp;amp;amp;amp;gt;

(only Primary Key columns)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS ONLY PK,NAME&amp;amp;amp;amp;gt;

(only Primary Key columns and columns named NAME)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS INCLUDING ROWID&amp;amp;amp;amp;gt;

(all columns in the table, plus the pseudocolumn ROWID)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS INCLUDING MADEUPNAME&amp;amp;amp;amp;gt;

(all columns in the table, plus a fake column)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS INCLUDING EMPS.MADEUPNAME&amp;amp;amp;amp;gt;

(all columns in the table, plus a fake column for the specified table)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

&amp;amp;amp;amp;lt;%COLUMNS ONLY SURROGATE_KEY,VERSION_ID INCLUDING ROWID&amp;amp;amp;amp;gt;

(multiple criteria may be combined)

&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

Within a sub-template the following placeholders are recognised:

#COL# – column name in uppercase#col# – column name in lowercase#Label# – generated user-friendly label based on column name#MAXLEN# – max length for a CHAR-type column#DATA_DEFAULT# – column default value#SEQ# – surrogate key sequence name#00i# – 001, 002, 003 etc. in order of column id--- – padding (inserts just enough extra spaces depending on length of column name so that code is aligned vertically)

For example, the following generates a comma-delimited list of user-friendly labels for each column in the table:

&amp;amp;amp;amp;lt;%COLUMNS&amp;amp;amp;amp;gt;#Label#~, &amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

Emp, Name, Emp Type, Start, End, Dummy, Dummy, Life History

Side Note: it’s noteworthy that I have no need for a “#datatype#” placeholder; in most cases my templates will anchor to the column’s datatype anyway, so a template just needs to use #col#%TYPE.

Multiple additional sub-templates may be provided within a <%COLUMNS> structure, to be used for certain columns. These must end with a {X} indicator, where X can be a data type or column name. Other indicators are supported for special cases as well.

&amp;amp;amp;amp;lt;%COLUMNS&amp;amp;amp;amp;gt;

Default subtemplate ~

ID column {ID}~

NUMBER column {NUMBER}~

Date/time column {DATETIME}~

Date column {DATE}~

Timestamp column {TIMESTAMP}~

Timestamp with time zone {TIMESTAMP_TZ}~

Indicator (Y or null) column {IND}~

Yes/No (Y or N) column {YN}~

Any other VARCHAR2 column {VARCHAR2}~

Any LOB-type column (e.g. BLOB, CLOB) {LOB}~

Any specific datatype {CLOB}~

Primary key matched to a sequence {SURROGATE_KEY}~

Special case for a specific column {TABLE.COLUMN}~

Extra code to be used if NO columns match {NONE}~

,&amp;amp;amp;amp;lt;%END&amp;amp;amp;amp;gt;

The “data type” for a column is usually just the data type from the schema data dictionary; however, there are some special cases where a special data type is derived from the column name:

- ID: a NUMBER column with a name ending with

_ID

- DATETIME: a DATE column with name ending with

_DT

- IND: a VARCHAR2 column with a name ending with

_IND

- YN: a VARCHAR2 column with a name ending with

_YN

Within a template it is possible to import the code from another template (e.g. to share code between multiple templates, or to facilitate a nested-IF structure) using this structure:

&amp;amp;amp;amp;lt;%INCLUDE OTHERTEMPLATE&amp;amp;amp;amp;gt;

This will cause the generator to find a template named OTHERTEMPLATE, evaluate it, then insert it at the given position.

This method has allowed my code generator to be quite flexible and powerful, makes it easy to add additional code to all my API packages and other generated code, and makes it easy to find and fix errors.

You can download all the source for the template and generator below. Note that a new Sample Apex application is included (f560.sql) which works in Apex 5 and uses the new Apex API. Disclaimer:This is a work in progress!

If you find it useful or you have suggestions for improvement please comment.

Source code/download: https://github.com/jeffreykemp/jk64-sample-apex-xapi

If your schemas are like those I deal with, almost every table has a doppelgänger which serves as a journal table; an “after insert, update or delete” trigger copies each and every change into the journal table. It’s a bit of a drag on performance for large updates, isn’t it?

I was reading through the docs (as one does) and noticed this bit:

Scenario: You want to record every change to hr.employees.salary in a new table, employee_salaries. A single UPDATE statement will update many rows of the table hr.employees; therefore, bulk-inserting rows into employee.salaries is more efficient than inserting them individually.

Solution: Define a compound trigger on updates of the table hr.employees, as in Example 9-3. You do not need a BEFORE STATEMENT section to initialize idx or salaries, because they are state variables, which are initialized each time the trigger fires (even when the triggering statement is interrupted and restarted).

http://docs.oracle.com/cd/B28359_01/appdev.111/b28370/triggers.htm#CIHFHIBH

The example shows how to use a compound trigger to not only copy the records to another table, but to do so with a far more efficient bulk insert. Immediately my journal table triggers sprang to mind – would this approach give me a performance boost?

The answer is, yes.

My test cases are linked below – emp1 is a table with an ordinary set of triggers, which copies each insert/update/delete into its journal table (emp1$jn) individually for each row. emp2 is a table with a compound trigger instead, which does a bulk insert of 100 journal entries at a time.

I ran a simple test case involving 100,000 inserts and 100,000 updates, into both tables; the first time, I did emp1 first followed by emp2; in the second time, I reversed the order. From the results below you’ll see I got a consistent improvement, shaving about 4-7 seconds off of about 21 seconds, an improvement of 19% to 35%. This is with the default value of 100 for the bulk operation; tweaking this might wring a bit more speed out of it (at the cost of using more memory per session).

Of course, this performance benefit only occurs for multi-row operations; if your application is only doing single-row inserts, updates or deletes you won’t see any difference in performance. However, I still think this method is neater (only one trigger) than the alternative so would recommend. The only reason I wouldn’t use this method is if my target might potentially be a pre-11g database, which doesn’t support compound triggers.

Here are the test case scripts if you want to check it out for yourself:

ordinary_journal_trigger.sql

compound_journal_trigger.sql

test_journal_triggers.sql

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

insert emp1 (test run #1)

100000 rows created.

Elapsed: 00:00:21.19

update emp1 (test run #1)

100000 rows updated.

Elapsed: 00:00:21.40

insert emp2 (test run #1)

100000 rows created.

Elapsed: 00:00:16.01

update emp2 (test run #1)

100000 rows updated.

Elapsed: 00:00:13.89

Rollback complete.

insert emp2 (test run #2)

100000 rows created.

Elapsed: 00:00:15.94

update emp2 (test run #2)

100000 rows updated.

Elapsed: 00:00:16.60

insert emp1 (test run #2)

100000 rows created.

Elapsed: 00:00:21.01

update emp1 (test run #2)

100000 rows updated.

Elapsed: 00:00:20.48

Rollback complete.

And here, in all its glory, is the fabulous compound trigger:

CREATE OR REPLACE TRIGGER emp2$trg

FOR INSERT OR UPDATE OR DELETE ON emp2

COMPOUND TRIGGER

FLUSH_THRESHOLD CONSTANT SIMPLE_INTEGER := 100;

TYPE jnl_t IS TABLE OF emp2$jn%ROWTYPE

INDEX BY SIMPLE_INTEGER;

jnls jnl_t;

rec emp2$jn%ROWTYPE;

blank emp2$jn%ROWTYPE;

PROCEDURE flush_array (arr IN OUT jnl_t) IS

BEGIN

FORALL i IN 1..arr.COUNT

INSERT INTO emp2$jn VALUES arr(i);

arr.DELETE;

END flush_array;

BEFORE EACH ROW IS

BEGIN

IF INSERTING THEN

IF :NEW.db_created_by IS NULL THEN

:NEW.db_created_by := NVL(v('APP_USER'), USER);

END IF;

ELSIF UPDATING THEN

:NEW.db_modified_on := SYSDATE;

:NEW.db_modified_by := NVL(v('APP_USER'), USER);

:NEW.version_id := :OLD.version_id + 1;

END IF;

END BEFORE EACH ROW;

AFTER EACH ROW IS

BEGIN

rec := blank;

IF INSERTING OR UPDATING THEN

rec.id := :NEW.id;

rec.name := :NEW.name;

rec.db_created_on := :NEW.db_created_on;

rec.db_created_by := :NEW.db_created_by;

rec.db_modified_on := :NEW.db_modified_on;

rec.db_modified_by := :NEW.db_modified_by;

rec.version_id := :NEW.version_id;

IF INSERTING THEN

rec.jn_action := 'I';

ELSIF UPDATING THEN

rec.jn_action := 'U';

END IF;

ELSIF DELETING THEN

rec.id := :OLD.id;

rec.name := :OLD.name;

rec.db_created_on := :OLD.db_created_on;

rec.db_created_by := :OLD.db_created_by;

rec.db_modified_on := :OLD.db_modified_on;

rec.db_modified_by := :OLD.db_modified_by;

rec.version_id := :OLD.version_id;

rec.jn_action := 'D';

END IF;

rec.jn_timestamp := SYSTIMESTAMP;

jnls(NVL(jnls.LAST,0) + 1) := rec;

IF jnls.COUNT >= FLUSH_THRESHOLD THEN

flush_array(arr => jnls);

END IF;

END AFTER EACH ROW;

AFTER STATEMENT IS

BEGIN

flush_array(arr => jnls);

END AFTER STATEMENT;

END emp2$trg;

Just to be clear: it’s not that it’s a compound trigger that impacts the performance; it’s the bulk insert. However, using the compound trigger made the bulk operation much simpler and neater to implement.

UPDATE 14/08/2014: I came across a bug in the trigger which caused it to not flush the array when doing a MERGE. I found I had to pass the array as a parameter internally.

Here is a short story about a little problem that caused me a bit of grief; but in the end had a simple cause and a simple fix.

I had a dynamic action in my APEX 4.1 app that had to run some PL/SQL – which was working fine, except the PL/SQL kept on getting longer and longer and more complex; so quite naturally I wanted it to be encapsulated in a database procedure.

I did so, but it didn’t work: the page ran without error, but it seemed like the dynamic action wasn’t firing. It was supposed to change the value of some display items on the page in response to the change of a radio button item, but now they weren’t changing! There was no debug warnings or logs to give a hint either. I tried using Chrome’s developer tools to trace it but that just showed me a very high-level view of what the client was doing, and didn’t report any errors or warnings.

I reverted to my original code, and it worked fine. Ok, so that means it’s probably a problem with my procedure.

I checked and rechecked my procedure. Didn’t seem to be anything wrong with it. I added a line into the procedure to raise an exception. The APEX page dutifully reported the PL/SQL error in the Ajax call – which means that my procedure was being called successfully. Also, I included the return values in the exception message, and this proved that my procedure was correctly determining the values. They just weren’t being returned to the items on the page.

I tried raising an exception in the apex dynamic action’s PL/SQL Code. That worked. The exception message correctly showed the new values were being returned; they still weren’t being populated on the page.

I tried removing all the items from the Page Items to Return setting; then I gradually added them back in, one by one. I narrowed it down to just one item. If I included that item, none of the items were being updated when the procedure returned. If I excluded that item, all the other items were correctly being updated when the procedure returned. Of course, that wasn’t a solution, because there was a cascade of other dynamic actions that were dependent on that particular item, so it has to be updated.

After lunch and a short walk, it occurred to me: unlike the other parameters, that particular parameter was anchored to a database column defined as CHAR(1). Could that be a problem?

Sure enough, when I changed the parameter’s data type from column%TYPE (which mapped to a CHAR) to just a plain VARCHAR2, everything worked.

Sure enough, when I changed the parameter’s data type from column%TYPE (which mapped to a CHAR) to just a plain VARCHAR2, everything worked.

Yet another reason to avoid CHAR, I guess.

Some time back, Connor rightly pointed out that triggers that modify data can get in the way when you need to do out-of-the-ordinary data maintenance, e.g. when you need to fix up a row here or re-insert a row over there. You can’t just disable the trigger or else make your users suffer down-time.

Some time back, Connor rightly pointed out that triggers that modify data can get in the way when you need to do out-of-the-ordinary data maintenance, e.g. when you need to fix up a row here or re-insert a row over there. You can’t just disable the trigger or else make your users suffer down-time.

The last few weeks I’ve made quite a few improvements to my

The last few weeks I’ve made quite a few improvements to my

Sure enough, when I changed the parameter’s data type from column%TYPE (which mapped to a CHAR) to just a plain VARCHAR2, everything worked.

Sure enough, when I changed the parameter’s data type from column%TYPE (which mapped to a CHAR) to just a plain VARCHAR2, everything worked.