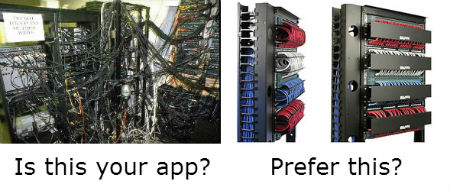

I recently saw this approach used in a complex Apex application built for my current client, and I liked what I saw – so I used a similar one in another project of mine, with good results.

- Pages load and process faster

- Less PL/SQL compilation at runtime

- Code is more maintainable and reusable

- Database object dependency analysis is much more reliable

- Apex application export files are smaller – faster to deploy

- Apex pages can be copied and adapted (e.g. for different interfaces) easier

How did all this happen? Nothing earth-shattering or terribly original. I made the following simple changes – and they only took about a week for a moderately complex 100-page application (that had been built haphazardly over the period of a few years):

- All PL/SQL Process actions moved to database packages

- Each page only has a single Before Header Process, which calls a procedure (e.g.

CTRL_PKG.p1_load;)

- Each page only has a single Processing Process, which calls a procedure (e.g.

CTRL_PKG.p1_process;)

- Computations are all removed, they are now done in the database package

The only changes I needed to make to the PL/SQL to make it work in a database package were that bind variable references (e.g. :P1_CUSTOMER_NAME) needed to be changed to use the V() (for strings and dates) or NV() (for numbers) functions; and I had to convert the Conditions on the Processes into the equivalent logic in PL/SQL. Generally, I would retrieve the values of page items into a local variable before using it in a query.

My “p1_load” procedure typically looked something like this:

PROCEDURE p1_load IS

BEGIN

msg('p1_load');

member_load;

msg('p1_load Finished');

END p1_load;

My “p1_process” procedure typically looked something like this:

PROCEDURE p1_process IS

request VARCHAR2(100) := APEX_APPLICATION.g_request;

BEGIN

msg('p1_process ' || request);

CASE request

WHEN 'CREATE' THEN

member_insert;

WHEN 'SUBMIT' THEN

member_update;

WHEN 'DELETE' THEN

member_delete;

APEX_UTIL.clear_page_cache

(APEX_APPLICATION.g_flow_step_id);

WHEN 'COPY' THEN

member_update;

-- clear the member ID for a new record

sv('P1_MEMBER_ID');

ELSE

NULL;

END CASE;

msg('p1_process Finished');

END p1_process;

I left Validations and Branches in the application. I will come back to the Validations later – this is made easier in Apex 4.1 which provides an API for error messages.

It wasn’t until I went through this exercise that I realised what a great volume of PL/SQL logic I had in my application – and that PL/SQL was being dynamically compiled every time a page was loaded or processed. Moving it to the database meant that it was compiled once; it meant that I could more easily see duplicated code (and therefore modularise it so that the same routine would now be called from multiple pages). I found a number of places where the Apex application was forced to re-evaluate a condition multiple times (as it had been copied to multiple Processes on the page) – now, all those processes could be put together into one IF .. END IF block.

Once all that code is compiled on the database, I can now make a change to a schema object (e.g. drop a column from a table, or modify a view definition) and see immediately what impact it will have across the application. No more time bombs waiting to go off in the middle of a customer demo. I can also query ALL_DEPENDENCIES to see where an object is being used.

I then wanted to make a Mobile version of a set of seven pages. This was made much easier now – all I had to do was copy the pages, set their interface to Mobile, and then on the database, call the same procedures. Note that when you do a page copy, that Apex automatically updates all references to use the new page ID – e.g. if you copy Page 1 to Page 2, a Process that calls “CTRL_PKG.p1_load;” will be changed to call “CTRL_PKG.p2_load;” in the new page. This required no further work since my p1_load and p1_process procedures merely had a one-line call to another procedure, which used the APEX_APPLICATION.g_flow_step_id global to determine the page number when using page items. For example:

PROCEDURE member_load IS

p VARCHAR2(10) := 'P' || APEX_APPLICATION.g_flow_step_id;

member members%ROWTYPE;

BEGIN

msg('member_load ' || p);

member.member_id := nv(p || '_MEMBER_ID');

msg('member_id=' || member.member_id);

IF member.member_id IS NOT NULL THEN

SELECT *

INTO member_page_load.member

FROM members m

WHERE m.member_id = member_load.member.member_id;

sv(p || '_GIVEN_NAME', member.given_name);

sv(p || '_SURNAME', member.surname);

sv(p || '_SEX', member.sex);

sv(p || '_ADDRESS_LINE', member.address_line);

sv(p || '_STATE', member.state);

sv(p || '_SUBURB', member.suburb);

sv(p || '_POSTCODE', member.postcode);

sv(p || '_HOME_PHONE', member.home_phone);

sv(p || '_MOBILE_PHONE', member.mobile_phone);

sv(p || '_EMAIL_ADDRESS', member.email_address);

sv(p || '_VERSION_ID', member.version_id);

END IF;

msg('member_load Finished');

END member_load;

Aside: Note here the use of SELECT * INTO [rowtype-variable]. This is IMO the one exception to the “never SELECT *” rule of thumb. The compromise here is that the procedure will query the entire record every time, even if it doesn’t use some of the columns; however, this pattern makes the code leaner and more easily understood; also, I usually need almost all the columns anyway.

In my database package, I included the following helper functions at the top, and used them throughout the package:

DATE_FORMAT CONSTANT VARCHAR2(30) := 'DD-Mon-YYYY';

PROCEDURE msg (i_msg IN VARCHAR2) IS

BEGIN

APEX_DEBUG_MESSAGE.LOG_MESSAGE

($$PLSQL_UNIT || ': ' || i_msg);

END msg;

-- get date value

FUNCTION dv

(i_name IN VARCHAR2

,i_fmt IN VARCHAR2 := DATE_FORMAT

) RETURN DATE IS

BEGIN

RETURN TO_DATE(v(i_name), i_fmt);

END dv;

-- set value

PROCEDURE sv

(i_name IN VARCHAR2

,i_value IN VARCHAR2 := NULL

) IS

BEGIN

APEX_UTIL.set_session_state(i_name, i_value);

END sv;

-- set date

PROCEDURE sd

(i_name IN VARCHAR2

,i_value IN DATE := NULL

,i_fmt IN VARCHAR2 := DATE_FORMAT

) IS

BEGIN

APEX_UTIL.set_session_state

(i_name, TO_CHAR(i_value, i_fmt));

END sd;

PROCEDURE success (i_msg IN VARCHAR2) IS

BEGIN

msg('success: ' || i_msg);

IF apex_application.g_print_success_message IS NOT NULL THEN

apex_application.g_print_success_message :=

:= apex_application.g_print_success_message || '<br>';

END IF;

apex_application.g_print_success_message

:= apex_application.g_print_success_message || i_msg;

END success;

Another change I made was to move most of the logic embedded in report queries into views on the database. This led to more efficiencies as logic used in a few pages here and there could now be consolidated in a single view.

The challenges remaining were record view/edit pages generated by the Apex wizard – these used DML processes to load and insert/update/delete records. In most cases these were on simple pages with no other processing added; so I left them alone for now.

On a particularly complex page, I removed the DML processes and replaced them with my own package procedure which did the query, insert, update and delete. This greatly simplified things because I now had better control over exactly how these operations are done. The only downside to this approach is that I lose the built-in Apex lost update protection mechanism, which detects changes to a record done by multiple concurrent sessions. I had to ensure I built that logic into my package myself – I did this with a simple VERSION_ID column on the table (c.f. Version Compare in “Avoiding Lost Updates”).

The only downsides with this approach I’ve noted so far are:

- a little extra work when initially creating a page

- page item references are now strings (e.g. “

v('P1_RECORD_ID')“) instead of bind variables – so a typo here and there can result in somewhat harder-to-find bugs

However, my application is now faster, more efficient, and on the whole easier to debug and maintain – so the benefits seem to outweigh the downsides.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

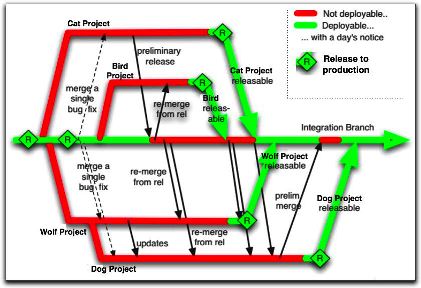

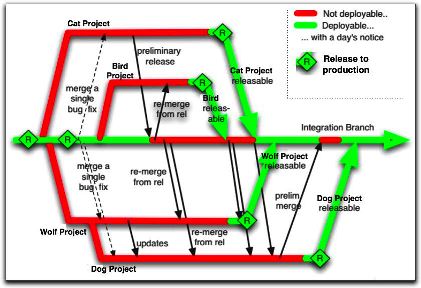

They always have a number of projects on the go with it, and they wanted us to develop two major revisions to it in parallel. In other words, we’d have v1.0 (so to speak) in Production, which still needed support and urgent defect fixing, v1.1 in Dev1 for project A, and v1.2 in Dev2 for project B. Oh, and we don’t know if Project A will go live before Project B, or vice versa. We have source control, so we should be able to branch the application and have separate teams working on each branch, right?

We said, “no way”. Trying to merge changes from a branch of an APEX app into an existing APEX app is not going to work, practically speaking. The merged script would most likely fail to run at all, or if it somehow magically runs, it’d probably break something.

So we pushed back a bit, and the terms of the project were changed so that development of project A would be done first, and the development of project B would follow straight after. So at least now we know that v1.2 can be built on top of v1.1 with no merge required. However, we still had the problem that production defect fixes would still need to be done on a separate version of the application in dev, and that they needed to continue being deployed to sit/uat/prod without carrying any changes from our projects.

The solution we have used is to have two copies of dev, each with its own schema, APEX application and version control folder: I’ll call them APP and APP2. We took an export of APP and created APP2, and instructed the developer who was tasked with production defect fixes to manually duplicate his changes in both APP and APP2. That way the defect fixes were “merged” in a manual fashion as we went along – also, it meant that the project development would gain the benefit of the defect fixes straight away. The downside was that everything worked and acted as if they were two completely different and separate applications, which made things tricky for integration.

Next, for developing project A and project B, we needed to be able to make changes for both projects in parallel, but we needed to be able to deploy just Project A to SIT/UAT/PROD without carrying the changes from project B with it. The solution was to use APEX’s Build Option feature (which has been around for donkey’s years but I never had a use for it until now), in combination with Conditional Compilation on the database schema.

I created a build option called (e.g.) “Project B”. I set Status = “Include”, and Default on Export = “Exclude”. What this means is that in dev, my Project B changes will be enabled, but when the app is exported for deployment to SIT etc the build option’s status will be set to “Exclude”. In fact, my changes will be included in the export script, but they just won’t be rendered in the target environments.

When we created a new page, region, item, process, condition, or dynamic action for project B, we would mark it with our build option “Project B”. If an existing element was to be removed or replaced by Project B, we would mark it as “{NOT} Project B”.

Any code on the database side that was only for project B would be switched on with conditional compilation, e.g.:

$IF $$projectB $THEN

PROCEDURE my_proc (new_param IN ...) IS...

$ELSE

PROCEDURE my_proc IS...

$END

When the code is compiled, if the projectB flag has been set (e.g. with ALTER SESSION SET PLSQL_CCFLAGS='projectB:TRUE';), the new code will be compiled.

Build Options can be applied to:

- Pages & Regions

- Items & Buttons

- Branches, Computations & Processes

- Lists & List Entries

- LOV Entries

- Navigation Bar & Breadcrumb Entries

- Shortcuts

- Tabs & Parent Tabs

This works quite well for 90% of the changes required. Unfortunately it doesn’t handle the following scenarios:

1. Changed attributes for existing APEX components – e.g. some layout changes that would re-order the items in a form cannot be isolated to a build option.

2. Templates and Authorization Schemes cannot be marked with a build option.

On the database side, it is possible to detect at runtime if a build option has been enabled or not. In our case, a lot of our code was dependent on schema structural changes (e.g. new table columns) which would not compile in the target environments anyway – so conditional compilation was a better solution.

Apart from these caveats, the use of Build Options and Conditional Compilation have made the parallel development of these two projects feasible. Not perfect, mind you – but feasible. The best part? There’s a feature in APEX that allows you to view a list of all the components that have been marked with a Build Option – this is accessible from Shared Components -> Build Options -> Utilization (tab).

Enhancement Requests:

1. If Build Options could be improved to allow the scenarios listed above, I’d be glad. In a perfect world, I should be able to go into APEX, select “Project B”, and all my changes (adding/modifying/removing items, regions, pages, LOVs, auth schemes, etc) would be marked for Project B. I could switch to “Project A”, and my changes for Project B would be hidden. I think this would require the APEX engine to be able to have multiple definitions of each item, region or page, one for each build option. Merging changes between build options would need to be made possible, somehow – I don’t hold any illusions that this would be a simple feature for the APEX team to deliver.

2. Make the items/regions/pages listed in the Utilization tab clickable, so I can easily click through and change properties on them.

3. Another thing I’d like to see from the APEX team is builtin GUI support for exporting applications as a collection of individual scripts, each independently runnable – one for each page and shared component. I’m aware there is a Java tool for this purpose, but the individual scripts it generates cannot be run on their own. For example, if I export a page, I should be able to import that page into another copy of the same application (but with a different application ID) to replace the existing version of that page. I should be able to check in a change to an authorization scheme or an LOV or a template, and deploy just the script for that component to other applications, even in other workspaces. The export feature for all this should be available and supported using a PL/SQL API so that we can automate the whole thing and integrate it with our version control and deployment software.

4. What would be really cool, would be if the export scripts from APEX were structured in such a way that existing source code merge tools could merge different versions of the same APEX script and result in a usable APEX script. This already works quite well for our schema scripts (table scripts, views, packages, etc), so why not?

Further Reading:

I love the APEX UI, it makes development so much easier and more convenient – and makes it easy to impress clients when I can quickly fix issues right there and then, using nothing but their computer and their browser, no additional software tools needed.

I love the APEX UI, it makes development so much easier and more convenient – and makes it easy to impress clients when I can quickly fix issues right there and then, using nothing but their computer and their browser, no additional software tools needed.

However, at my main client they have a fairly strict “scripted releases only” policy which is a really excellent policy – deployments must always be provided as a script to run on the command line. This makes for less errors and a little less work for the person who runs the deployment.

In APEX it’s easy to create deployment scripts that will run right in SQL*Plus. You can export a workspace, an application, images, etc. as scripts that will run in SQL*Plus with almost no problem. There’s just a few little things to be aware of, and that’s the subject of this post.

1. Setting the session workspace

Normally if you log into APEX and import an application export script, it will be imported without problem. Also, if you log into SQL*Plus and try to run it, it will work fine as well.

The only difference comes if you want to deploy it into a different workspace ID to the one the application was exported from – e.g. if you want to have two workspaces on one database, one for dev, one for test, when you log into your test schema and try to run it, you’ll see something like this:

SQL> @f118.sql

APPLICATION 118 - My Wonderful App

Set Credentials...

Check Compatibility...

Set Application ID...

begin

*

ERROR at line 1:

ORA-20001: Package variable g_security_group_id must be set.

ORA-06512: at "APEX_040100.WWV_FLOW_API", line 73

ORA-06512: at "APEX_040100.WWV_FLOW_API", line 342

ORA-06512: at line 4

Side note: if you’re using Windows, the SQL*Plus window will disappear too quickly for you to see the error (as the generated apex script sets it to exit on error) – so you should SPOOL to a log file to see the output.

To fix this issue, you need to run a little bit of PL/SQL before you run the export, to override the workspace ID that the script should use:

declare

v_workspace_id NUMBER;

begin

select workspace_id into v_workspace_id

from apex_workspaces where workspace = 'TESTWORKSPACE';

apex_application_install.set_workspace_id (v_workspace_id);

apex_util.set_security_group_id

(p_security_group_id => apex_application_install.get_workspace_id);

apex_application_install.set_schema('TESTSCHEMA');

apex_application_install.set_application_id(119);

apex_application_install.generate_offset;

apex_application_install.set_application_alias('TESTAPP');

end;

/

This will tell the APEX installer to use a different workspace – and a different schema, application ID and alias as well, since 118 already exists on this server. If your app doesn’t have an alias you can omit that last step. Since we’re changing the application ID, we need to get all the other IDs (e.g. item and button internal IDs) throughout the application changed as well, so we call generate_offset which makes sure they won’t conflict.

2. Installing Images

This is easy. Same remarks apply as above if you’re installing the image script into a different workspace.

3. Installing CSS Files

If you export your CSS files using the APEX export facility, these will work just as well as the above, and the same considerations apply if you’re installing into a different workspace.

If you created your CSS export file manually using Shared Components -> Cascading Style Sheets and clicking on your stylesheet and clicking “Display Create File Script“, you will find it doesn’t quite work as well as you might expect. It does work, except that the file doesn’t include a COMMIT at the end. Which normally wouldn’t be much of a problem, until you discover late that the person deploying your scripts didn’t know they should issue a commit (which, of course, would have merely meant the file wasn’t imported) – and they didn’t actually close their session straight away either, but just left it open on their desktop while they went to lunch or a meeting or something.

This meant that when I sent the test team onto the system, the application looked a little “strange”, and all the text was black instead of the pretty colours they’d asked for – because the CSS file wasn’t found. And when I tried to fix this by attempting to re-import the CSS, my session hung (should that be “hanged”? or “became hung”?) – because the deployment person’s session was still holding the relevant locks. Eventually they committed their session and closed it, and the autocommit nature of SQL*Plus ended up fixing the issue magically for us anyway. Which made things interesting the next day as I was trying to work out what had gone wrong, when the system was now working fine, as if innocently saying to me, “what problem?”.

4. A little bug with Data Load tables

We’re on APEX 4.1.1 If you have any CSV Import function in your application using APEX’s Data Loading feature, if you export the application from one schema and import into another schema, you’ll find that the Data Load will simply not work, because the export incorrectly hardcodes the owner of the data load table in the call to create_load_table. This bug is described here: http://community.oracle.com/message/10309103?#10307103 and apparently there’s a patch for it.

wwv_flow_api.create_load_table(

p_id =>4846012021772170+ wwv_flow_api.g_id_offset,

p_flow_id => wwv_flow.g_flow_id,

p_name =>'IMPORT_TABLE',

p_owner =>'MYSCHEMA',

p_table_name =>'IMPORT_TABLE',

p_unique_column_1 =>'ID',

p_is_uk1_case_sensitive =>'Y',

p_unique_column_2 =>'',

p_is_uk2_case_sensitive =>'N',

p_unique_column_3 =>'',

p_is_uk3_case_sensitive =>'N',

p_wizard_page_ids =>'',

p_comments =>'');

The workaround I’ve been using is, before importing into a different schema, I just edit the application script to fix the p_owner in the calls to wwv_flow_api.create_load_table.

5. Automating the Export

I don’t know if this is improved in later versions, but at the moment you can only export Applications using the provided API – no other objects (such as images or CSS files). Just a sample bit of code (you’ll need to put other bits around this to do what you want with the clob – e.g. my script spits it out to serverout so that SQL*Plus will write it to a sql file):

l_clob := WWV_FLOW_UTILITIES.export_application_to_clob

(p_application_id => &APP_ID.

,p_export_ir_public_reports => 'Y'

,p_export_ir_private_reports => 'Y'

,p_export_ir_notifications => 'Y'

);

That’s all my tips for scripting APEX deployments for now. If I encounter any more I’ll add them here.

EDIT:

Related: “What’s the Difference” – comparing exports to find diffs on an APEX application – http://blog.sydoracle.com/2011/11/whats-difference.html

Every mature language, platform or system has little quirks, eccentricities, and anachronisms that afficionados just accept as “that’s the way it is” and that look weird, or outlandishly strange to newbies and outsiders. The more mature, and more widely used is the product, the more resistance to change there will be – causing friction that helps to ensure these misfeatures survive.

Oracle, due to the priority placed on backwards compatibility, and its wide adoption, is not immune to this phenomenon. Unless a feature is actively causing things to break, as long as there are a significant number of sites using it, it’s not going to change. In some cases, the feature might be replaced and the original deprecated and eventually removed; but for core features such as SQL and PL/SQL syntax, especially the semantics of the basic data types, it is highly unlikely these will ever change.

So here I’d like to list what I believe are the things in Oracle that most frequently confuse people. These are not necessarily intrinsically complicated – just merely unintuitive, especially to a child of the 90’s or 00’s who was not around when these things were first implemented, when the idea of “best practice” had barely been invented; or to someone more experienced in other technologies like SQL Server or Java. These are things I see questions about over and over again – both online and in real life. Oh, and before I get flamed – another disclaimer: some of these are not unique to Oracle – some of them are more to do with the SQL standard; some of them are caused by a lack of understanding of the relational model of data.

Once you know them, they’re easy – you come to understand the reasons (often historical) behind them; eventually, the knowledge becomes so ingrained, it’s difficult to remember what it was like beforehand.

Top 10 Confusing Things in Oracle

-

-

-

-

-

-

-

-

-

-

Got something to add to the list? Drop me a note below.

More resources:

This book takes pride of place on my bookshelf. Highly recommended reading for anyone in the database industry.

If you haven’t seen Fabian Pascal’s blog before, it’s because he’s only just started it – but he’ll be publishing new material, as well as articles previously published at Database Debunkings, infamous for his fundamental, no-holds-barred, uncompromising take on what the Relational Model is, what it isn’t, and what that means for all professionals who design databases.

It was with sadness that I saw the site go relatively static over the past years, and to see it being revived is a fresh blast of cool air in a world that continues to be inundated by fads and misconceptions. Of particular note was the “THE VOCIFEROUS IGNORANCE HALL OF SHAME“… I’m looking forward to seeing the old vigorous debates that will no doubt be revived or rehashed.

The pure view of the Relational model of data is, perhaps, too idealistic for some – impractical for day-to-day use in a SQL-dominated world. Personally, I’ve found (although I cannot pretend to be an expert, in any sense, on this topic) that starting from a fundamentally pure model, unconstrained by physical limitations, conceived at an almost ideal, Platonic level, allows me to discover the simplest, most provably “correct” solution for any data modelling problem. At some stage I have to then “downgrade” it to a form that is convenient and pragmatic for implementation in a SQL database like Oracle; in spite of this, having that logical design in the back of my head helps to highlight potential inconsistencies or data integrity problems that must then be handled by the application.

That this situation is, in fact, not the best of all possible worlds, is something that we can all learn and learn again. Have a look, and see what you think: dbdebunk.blogspot.com.au.

A simple question: you’re designing an API to be implemented as a PL/SQL package, and you don’t (yet) know the full extent to which your API may be used, so you want to cover a reasonable variety of possible usage cases.

You have a function that will return a BOOLEAN – i.e. TRUE or FALSE (or perhaps NULL). Should you implement it this way, or should you return some other kind of value – e.g. a CHAR – e.g. ‘Y’ for TRUE or ‘N’ for FALSE; or how about a NUMBER – e.g. 1 for TRUE or 0 for FALSE?

You have a function that will return a BOOLEAN – i.e. TRUE or FALSE (or perhaps NULL). Should you implement it this way, or should you return some other kind of value – e.g. a CHAR – e.g. ‘Y’ for TRUE or ‘N’ for FALSE; or how about a NUMBER – e.g. 1 for TRUE or 0 for FALSE?

This debate has raged since 2002, and probably earlier – e.g. http://asktom.oracle.com/pls/asktom/f?p=100:11:0::::P11_QUESTION_ID:6263249199595

Well, if I use a BOOLEAN, it makes the code simple and easy to understand – and callers can call my function in IF and WHILE statements without having to compare the return value to anything. However, I can’t call the function from a SQL statement, which can be annoyingly restrictive.

If I use a CHAR or NUMBER, I can now call the function from SQL, and store it in a table – but it makes the code just a little more complicated – now, the caller has to trust that I will ONLY return the values agreed on. Also, there is no way to formally restrict the values as agreed – I’d have to just document them in the package comments. I can help by adding some suitable constants in the package spec, but note that Oracle Forms cannot refer to these constants directly. Mind you, if the value is being stored in a table, a suitable CHECK constraint would be a good idea.

Perhaps a combination? Have a function that returns BOOLEAN, and add wrapper functions that converts a BOOLEAN into a ‘Y’ or ‘N’ as appropriate? That might be suitable.

Personally, though, I hate the NUMBER (1 or 0) idea for PL/SQL. That’s so C-from-the-1970’s. Who codes like that anymore?

An interesting discussion on the PL/SQL Challenge blog here has led to me changing my mind about “the best way” to loop through a sparse PL/SQL associative array.

Normally, if we know that an array has been filled, with no gaps in indices, we would use a simple FOR LOOP:

DECLARE

TYPE t IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

a t;

BEGIN

SELECT x BULK COLLECT INTO a FROM mytable;

FOR i IN a.FIRST..a.LAST LOOP

-- process a(i)

END LOOP;

END;

If, however, the array may be sparsely filled (i.e. there might be one or more gaps in the sequence), this was “the correct way” to loop through it:

Method A (First/Next)

DECLARE

TYPE t IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

a t;

i BINARY_INTEGER;

BEGIN

...

i := a.FIRST;

LOOP

EXIT WHEN i IS NULL;

-- process a(i)

i := a.NEXT(i);

END LOOP;

END;

Method A takes advantage of the fact that an associative array in Oracle is implemented internally as a linked list – the fastest way to “skip over” any gaps is to call the NEXT operator on the list for a given index.

Alternatively, one could still just loop through all the indices from the first to the last index; but the problem with this approach is that if an index is not found in the array, it will raise the NO_DATA_FOUND exception. Well, Method B simply catches the exception:

Method B (Handle NDF)

DECLARE

TYPE t IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

a t;

BEGIN

...

FOR i IN a.FIRST..a.LAST LOOP

BEGIN

-- process a(i)

EXCEPTION

WHEN NO_DATA_FOUND THEN

NULL;

END;

END LOOP;

END;

This code effectively works the same (with one important proviso*) as Method A. The difference, however, is in terms of relative performance. This method is much faster than Method A, if the array is relatively dense. If the array is relatively sparse, Method A is faster.

* It must be remembered that the NO_DATA_FOUND exception may be raised by a number of different statements in a program: if you use code like this, you must make sure that the exception was only raised by the attempt to access a(i), and not by some other code!

A third option is to loop through as in Method B, but call the EXISTS method on the array to check if the index is found, instead of relying on the NO_DATA_FOUND exception.

Method C (EXISTS)

DECLARE

TYPE t IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

a t;

BEGIN

...

FOR i IN a.FIRST..a.LAST LOOP

IF a.EXISTS(i) THEN

-- process a(i)

END IF;

END LOOP;

END;

The problem with this approach is that it effectively checks the existence of i in the array twice: once for the EXISTS check, and if found, again when actually referencing a(i). For a large array which is densely populated, depending on what processing is being done inside the loop, this could have a measurable impact on performance.

Bottom line: there is no “one right way” to loop through a sparse associative array. But there are some rules-of-thumb about performance we can take away:

- When the array is likely often very sparsely populated with a large index range, use Method A (First/Next).

- When the array is likely often very densely populated with a large number of elements, use Method B (Handle NDF). But watch how you catch the NO_DATA_FOUND exception!

- If you’re not sure, I’d tend towards Method A (First/Next) until performance problems are actually evident.

You probably noticed that I haven’t backed up any of these claims about performance with actual tests or results. You will find some in the comments to the afore-mentioned PL/SQL Challenge blog post; but I encourage you to log into a sandpit Oracle environment and test it yourself.

Today, grasshopper, you will learn the Way of the Template. The Templating Way is the path by which complex output is produced in a harmonious fashion.

The Templating Way does not cobble a string together from bits and pieces in linear fashion.

htp.p('<HTML><HEAD><TITLE>'||:title

||'</TITLE></HEAD><BODY>'

||:body||'</BODY></HTML>');

The Templating Way separates the Template from the Substitutions; by this division is harmony achieved.

DECLARE

template VARCHAR2(200)

:= q'[

<HTML>

<HEAD>

<TITLE> #TITLE# </TITLE>

</HEAD>

<BODY> #BODY# </BODY>

</HTML>

]';

BEGIN

htp.p(

REPLACE( REPLACE( template

,'#TITLE#', :title)

,'#BODY#', :body)

);

END;

It is efficient – each substitution expression is evaluated once and once only, even if required many times within the template.

The Templating Way makes dynamic SQL easy to write and debug. It makes bugs shallower.

SELECT REPLACE(REPLACE(REPLACE(q'[

CREATE OR REPLACE TRIGGER #OWNER#.#TABLE#_BI

BEFORE INSERT ON #OWNER#.#TABLE#

FOR EACH ROW

BEGIN

IF :NEW.#COLUMN# IS NULL THEN

SELECT #TABLE#_SEQ.NEXTVAL

INTO :NEW.#COLUMN#

FROM DUAL;

END IF;

END;

]', '#OWNER#', USER)

, '#TABLE#', cc.table_name)

, '#COLUMN#', cc.column_name) AS ddl

FROM user_constraints c, user_cons_columns cc

WHERE c.constraint_type = 'P'

AND c.constraint_name = cc.constraint_name

AND cc.column_name like '%NO';

The Templating Way is simple, but looks complex to the uninitiated. It is readable, and affords maintainability.

Are one or more usage examples enough to specify the requirements for something? For example:

rtrim('123000', '0'); would return '123'

No, as can be seen here: Oracle 8, SQL: RTRIM for string manipulation is not working as expected (Stackoverflow)

When I read that question I thought of TDD (Test Driven Development), something I think I should be doing more of. As said here, however, “Are tests sufficient documentation? Very likely not, but they do form an important part of it.”

I’ve seen unit test cases used as a form of documentation. Generally they could be useful for this – to tell part of the story – but if they only consist of “enter this, expect that”, they will never be good enough to replace requirements documentation.

Footnote: How about the source code – is that sufficient as documentation? In one sense, yes – the source code is the best documentation of what the system does now. What’s lacking, however, is documentation of the business requirements – and this gap can be huge (see e.g. Agile Development and Requirements Management).

I just wanted to bring attention to some very interesting discussion (that’s been going on for years now) regarding Table APIs (TAPI) versus Transactional APIs (XAPI). Some very nice answers, as well as a bit of controversy 🙂

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.

My current client has a large number of APEX applications, one of which is a doozy. It is a mission-critical and complex application in APEX 4.0.2 used throughout the business, with an impressively long list of features, with an equally impressively long list of enhancement requests in the queue.