715175039885776956103287888080

I needed to generate a random string with an exact length consisting of numeric digits, that I could send in an SMS to a user as a temporary account “pin”. DBMS_RANDOM.string is unsuitable for this purpose as its supported modes all include alphabetic characters. So I used DBMS_RANDOM.value instead. I call TRUNC afterwards to lop off the decimal portion.

FUNCTION random_pin (digits IN NUMBER)

RETURN NUMBER IS

BEGIN

IF digits IS NULL OR digits < 1 OR digits > 39 THEN

RAISE_APPLICATION_ERROR(-20000,'digits must be 1..39');

END IF;

IF digits = 1 THEN

RETURN TRUNC( DBMS_RANDOM.value(0,10) );

ELSE

RETURN TRUNC( DBMS_RANDOM.value(

POWER(10, digits-1)

,POWER(10, digits) ));

END IF;

END random_pin;

random_pin(digits => 6);

482372

EDIT 8/1/2016: added special case for 1 digit

ADDENDUM

Because the requirements of my “pin” function was to return a value that would remain unchanged when represented as an integer, it cannot return a string of digits starting with any zeros, which is why the lowerbound for the random function is POWER(10,digits-1). This, unfortunately, makes it somewhat less than perfectly random because zeroes are less frequent – if you call this function 1000 times for a given length of digits, then counted the frequency of each digit from 0..9, you will notice that 0 has a small but significantly lower frequency than the digits 1 to 9.

To fix this, the following function returns a random string of digits, with equal chance of returning a string starting with one or more zeroes:

FUNCTION random_digits (digits IN NUMBER)

RETURN VARCHAR2 IS

BEGIN

IF digits IS NULL OR digits < 1 OR digits > 39 THEN

RAISE_APPLICATION_ERROR(-20000,'digits must be 1..39');

END IF;

RETURN LPAD( TRUNC(

DBMS_RANDOM.value(0, POWER(10, digits))

), digits, '0');

END random_digits;

The above functions may be tested and downloaded from Oracle Live SQL.

When some of my users were using my system to send emails, they’d often copy-and-paste their messages from their favourite word processor, but when my system sent the emails they’d have question marks dotted around, e.g.

“Why doesn’t this work?”

would get changed to

?Why doesn?t? this work??

Simple fix was to detect and replace those fancy-pants quote characters with the equivalent html entities, e.g.:

function enc_chars (m in varchar2) return varchar2 is

begin

return replace(replace(replace(replace(m

,chr(14844060),'“')/*left double quote*/

,chr(14844061),'”')/*right double quote*/

,chr(96) ,'‘')/*left single quote*/

,chr(14844057),'’')/*right single quote*/

;

end enc_chars;

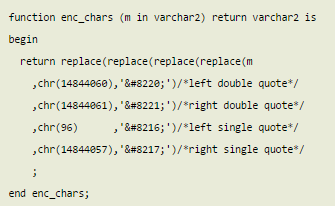

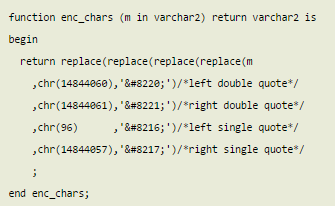

P.S. Stupid wordpress keeps mucking around with my code, trying to replace the html entities with the unencoded versions. In case this doesn’t work, here’s an image of what the above code is supposed to look like:

Disclaimer: I’m not posting to make me look better, we’ve all written code that we’re later ashamed of, and I’m no different!

This is some code I discovered buried in a system some time ago. I’ve kept a copy of it because it illustrates a number of things NOT to do:

FUNCTION password_is_valid

(in_password IN VARCHAR2)

-- do NOT copy this code!!! ...

RETURN VARCHAR2 IS

l_valid VARCHAR2(1);

l_sql VARCHAR2(32000);

CURSOR cur_rules IS

SELECT REPLACE(sql_expression

,'#PASSWORD#'

,'''' || in_password || ''''

) AS sql_expression

FROM password_rules;

BEGIN

FOR l_rec IN cur_rules LOOP

l_valid := 'N';

-- SQL injection, here we come...

l_sql := 'SELECT ''Y'' FROM DUAL ' || l_rec.sql_expression;

BEGIN

-- why not flood the shared pool with SQLs containing

-- user passwords in cleartext?

EXECUTE IMMEDIATE l_sql INTO l_valid;

EXCEPTION

WHEN NO_DATA_FOUND THEN

EXIT;

END;

IF l_valid = 'N' THEN

EXIT;

END IF;

END LOOP;

RETURN l_valid;

END password_is_valid;

I am pretty sure this code was no longer used, but I couldn’t be sure as I didn’t have access to all the instances that could run it.

Simple requirement – I’ve got a CLOB (e.g. after exporting an application from Apex from the command line) that I want to examine, and I’m running my script on my local client so I can’t use UTL_FILE to write it to a file. I just want to spit it out to DBMS_OUTPUT.

Strangely enough I couldn’t find a suitable working example on the web for how to do this, so wrote my own version. This was my first version – it’s verrrrrry slow because it calls DBMS_LOB for each individual line, regardless of how short the lines are. It was taking about a minute to dump a 3MB CLOB.

PROCEDURE dump_clob (clob IN OUT NOCOPY CLOB) IS

offset NUMBER := 1;

amount NUMBER;

len NUMBER := DBMS_LOB.getLength(clob);

buf VARCHAR2(32767);

BEGIN

WHILE offset < len LOOP

-- this is slowwwwww...

amount := LEAST(DBMS_LOB.instr(clob, chr(10), offset)

- offset, 32767);

IF amount < 0 THEN

-- this is slow...

DBMS_LOB.read(clob, amount, offset, buf);

offset := offset + amount + 1;

ELSE

buf := NULL;

offset := offset + 1;

END IF;

DBMS_OUTPUT.put_line(buf);

END LOOP;

END dump_clob;

This is my final version, which is orders of magnitude faster – about 5 seconds for the same 3MB CLOB:

PROCEDURE dump_str (buf IN VARCHAR2) IS

arr APEX_APPLICATION_GLOBAL.VC_ARR2;

BEGIN

arr := APEX_UTIL.string_to_table(buf, CHR(10));

FOR i IN 1..arr.COUNT LOOP

IF i < arr.COUNT THEN

DBMS_OUTPUT.put_line(arr(i));

ELSE

DBMS_OUTPUT.put(arr(i));

END IF;

END LOOP;

END dump_str;

PROCEDURE dump_clob (clob IN OUT NOCOPY CLOB) IS

offset NUMBER := 1;

amount NUMBER := 8000;

len NUMBER := DBMS_LOB.getLength(clob);

buf VARCHAR2(32767);

BEGIN

WHILE offset < len LOOP

DBMS_LOB.read(clob, amount, offset, buf);

offset := offset + amount;

dump_str(buf);

END LOOP;

DBMS_OUTPUT.new_line;

END dump_clob;

Jeffrey Kemp

17 September 2014

This is an idea for an enhancement to the PL/SQL syntax.

If I have the following declaration:

DECLARE

in_record mytable%ROWTYPE;

out_record mytable%ROWTYPE;

BEGIN

I can do this:

INSERT INTO mytable VALUES in_record;

I can also do this:

UPDATE mytable SET ROW = in_record WHERE ...;

I can do this, as long as I list each and every column, in the right order:

INSERT INTO mytable VALUES in_record

RETURNING cola, colb, colc INTO out_record;

But I can’t do this:

INSERT INTO mytable VALUES in_record

RETURNING ROW INTO out_record;

Can we make this happen, Oracle?

If your schemas are like those I deal with, almost every table has a doppelgänger which serves as a journal table; an “after insert, update or delete” trigger copies each and every change into the journal table. It’s a bit of a drag on performance for large updates, isn’t it?

I was reading through the docs (as one does) and noticed this bit:

Scenario: You want to record every change to hr.employees.salary in a new table, employee_salaries. A single UPDATE statement will update many rows of the table hr.employees; therefore, bulk-inserting rows into employee.salaries is more efficient than inserting them individually.

Solution: Define a compound trigger on updates of the table hr.employees, as in Example 9-3. You do not need a BEFORE STATEMENT section to initialize idx or salaries, because they are state variables, which are initialized each time the trigger fires (even when the triggering statement is interrupted and restarted).

http://docs.oracle.com/cd/B28359_01/appdev.111/b28370/triggers.htm#CIHFHIBH

The example shows how to use a compound trigger to not only copy the records to another table, but to do so with a far more efficient bulk insert. Immediately my journal table triggers sprang to mind – would this approach give me a performance boost?

The answer is, yes.

My test cases are linked below – emp1 is a table with an ordinary set of triggers, which copies each insert/update/delete into its journal table (emp1$jn) individually for each row. emp2 is a table with a compound trigger instead, which does a bulk insert of 100 journal entries at a time.

I ran a simple test case involving 100,000 inserts and 100,000 updates, into both tables; the first time, I did emp1 first followed by emp2; in the second time, I reversed the order. From the results below you’ll see I got a consistent improvement, shaving about 4-7 seconds off of about 21 seconds, an improvement of 19% to 35%. This is with the default value of 100 for the bulk operation; tweaking this might wring a bit more speed out of it (at the cost of using more memory per session).

Of course, this performance benefit only occurs for multi-row operations; if your application is only doing single-row inserts, updates or deletes you won’t see any difference in performance. However, I still think this method is neater (only one trigger) than the alternative so would recommend. The only reason I wouldn’t use this method is if my target might potentially be a pre-11g database, which doesn’t support compound triggers.

Here are the test case scripts if you want to check it out for yourself:

ordinary_journal_trigger.sql

compound_journal_trigger.sql

test_journal_triggers.sql

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

insert emp1 (test run #1)

100000 rows created.

Elapsed: 00:00:21.19

update emp1 (test run #1)

100000 rows updated.

Elapsed: 00:00:21.40

insert emp2 (test run #1)

100000 rows created.

Elapsed: 00:00:16.01

update emp2 (test run #1)

100000 rows updated.

Elapsed: 00:00:13.89

Rollback complete.

insert emp2 (test run #2)

100000 rows created.

Elapsed: 00:00:15.94

update emp2 (test run #2)

100000 rows updated.

Elapsed: 00:00:16.60

insert emp1 (test run #2)

100000 rows created.

Elapsed: 00:00:21.01

update emp1 (test run #2)

100000 rows updated.

Elapsed: 00:00:20.48

Rollback complete.

And here, in all its glory, is the fabulous compound trigger:

CREATE OR REPLACE TRIGGER emp2$trg

FOR INSERT OR UPDATE OR DELETE ON emp2

COMPOUND TRIGGER

FLUSH_THRESHOLD CONSTANT SIMPLE_INTEGER := 100;

TYPE jnl_t IS TABLE OF emp2$jn%ROWTYPE

INDEX BY SIMPLE_INTEGER;

jnls jnl_t;

rec emp2$jn%ROWTYPE;

blank emp2$jn%ROWTYPE;

PROCEDURE flush_array (arr IN OUT jnl_t) IS

BEGIN

FORALL i IN 1..arr.COUNT

INSERT INTO emp2$jn VALUES arr(i);

arr.DELETE;

END flush_array;

BEFORE EACH ROW IS

BEGIN

IF INSERTING THEN

IF :NEW.db_created_by IS NULL THEN

:NEW.db_created_by := NVL(v('APP_USER'), USER);

END IF;

ELSIF UPDATING THEN

:NEW.db_modified_on := SYSDATE;

:NEW.db_modified_by := NVL(v('APP_USER'), USER);

:NEW.version_id := :OLD.version_id + 1;

END IF;

END BEFORE EACH ROW;

AFTER EACH ROW IS

BEGIN

rec := blank;

IF INSERTING OR UPDATING THEN

rec.id := :NEW.id;

rec.name := :NEW.name;

rec.db_created_on := :NEW.db_created_on;

rec.db_created_by := :NEW.db_created_by;

rec.db_modified_on := :NEW.db_modified_on;

rec.db_modified_by := :NEW.db_modified_by;

rec.version_id := :NEW.version_id;

IF INSERTING THEN

rec.jn_action := 'I';

ELSIF UPDATING THEN

rec.jn_action := 'U';

END IF;

ELSIF DELETING THEN

rec.id := :OLD.id;

rec.name := :OLD.name;

rec.db_created_on := :OLD.db_created_on;

rec.db_created_by := :OLD.db_created_by;

rec.db_modified_on := :OLD.db_modified_on;

rec.db_modified_by := :OLD.db_modified_by;

rec.version_id := :OLD.version_id;

rec.jn_action := 'D';

END IF;

rec.jn_timestamp := SYSTIMESTAMP;

jnls(NVL(jnls.LAST,0) + 1) := rec;

IF jnls.COUNT >= FLUSH_THRESHOLD THEN

flush_array(arr => jnls);

END IF;

END AFTER EACH ROW;

AFTER STATEMENT IS

BEGIN

flush_array(arr => jnls);

END AFTER STATEMENT;

END emp2$trg;

Just to be clear: it’s not that it’s a compound trigger that impacts the performance; it’s the bulk insert. However, using the compound trigger made the bulk operation much simpler and neater to implement.

UPDATE 14/08/2014: I came across a bug in the trigger which caused it to not flush the array when doing a MERGE. I found I had to pass the array as a parameter internally.

Just a quick post to point out that the Alexandria PL/SQL Library has been updated to v1.7, including updates to the Amazon S3 package and a new package for generating iCalendar objects – more details on Morten’s blog.

Every mature language, platform or system has little quirks, eccentricities, and anachronisms that afficionados just accept as “that’s the way it is” and that look weird, or outlandishly strange to newbies and outsiders. The more mature, and more widely used is the product, the more resistance to change there will be – causing friction that helps to ensure these misfeatures survive.

Oracle, due to the priority placed on backwards compatibility, and its wide adoption, is not immune to this phenomenon. Unless a feature is actively causing things to break, as long as there are a significant number of sites using it, it’s not going to change. In some cases, the feature might be replaced and the original deprecated and eventually removed; but for core features such as SQL and PL/SQL syntax, especially the semantics of the basic data types, it is highly unlikely these will ever change.

So here I’d like to list what I believe are the things in Oracle that most frequently confuse people. These are not necessarily intrinsically complicated – just merely unintuitive, especially to a child of the 90’s or 00’s who was not around when these things were first implemented, when the idea of “best practice” had barely been invented; or to someone more experienced in other technologies like SQL Server or Java. These are things I see questions about over and over again – both online and in real life. Oh, and before I get flamed – another disclaimer: some of these are not unique to Oracle – some of them are more to do with the SQL standard; some of them are caused by a lack of understanding of the relational model of data.

Once you know them, they’re easy – you come to understand the reasons (often historical) behind them; eventually, the knowledge becomes so ingrained, it’s difficult to remember what it was like beforehand.

Top 10 Confusing Things in Oracle

-

-

-

-

-

-

-

-

-

-

Got something to add to the list? Drop me a note below.

More resources:

I recently saw this question on StackOverflow (“Is there any way to determine if a package has state in Oracle?”) which caught my attention.

I recently saw this question on StackOverflow (“Is there any way to determine if a package has state in Oracle?”) which caught my attention.

You’re probably already aware that when a package is recompiled, any sessions that were using that package won’t even notice the change; unless that package has “state” – i.e. if the package has one or more package-level variables or constants. The current value of these variables and constants is kept in the PGA for each session; but if you recompile the package (or modify something on which the package depends), Oracle cannot know for certain whether the new version of the package needs to reset the values of the variables or not, so it errs on the side of caution and discards them. The next time the session tries to access the package in any way, Oracle will raise ORA-04068, and reset the package state. After that, the session can try again and it will work fine.

Side Note: There are a number of approaches to solving the ORA-04068 problem, some of which are given as answers to this question here. Not all of them are appropriate for every situation. Another approach not mentioned there is to avoid or minimize it – move all the package variables to a separate package, which hopefully will be invalidated less often.

It’s quite straightforward to tell whether a given package has “state” and thus has the potential for causing ORA-04068: look for any variables or constants declared in the package specification or body. If you have a lot of packages, however, you might want to get a listing of all of them. To do this, you can use the new PL/Scope feature introduced in Oracle 11g.

select object_name AS package,

type,

name AS variable_name

from user_identifiers

where object_type IN ('PACKAGE','PACKAGE BODY')

and usage = 'DECLARATION'

and type in ('VARIABLE','CONSTANT')

and usage_context_id in (

select usage_id

from user_identifiers

where type = 'PACKAGE'

);

If you have compiled the packages in the schema with PL/Scope on (i.e. alter session set plscope_settings='IDENTIFIERS:ALL';), this query will list all the packages and the variables that mean they will potentially have state.

Before this question was raised, I hadn’t used PL/Scope for real; it was quite pleasing to see how easy it was to use to answer this particular question. This also illustrates a good reason why I like to hang out on Stackoverflow – it’s a great way to learn something new every day.

Recently I refactored some PL/SQL for sending emails – code that I wrote way back in 2004. The number of “WTF“‘s per minute has not been too high; however, I’ve cringed more times than I’d like…

Recently I refactored some PL/SQL for sending emails – code that I wrote way back in 2004. The number of “WTF“‘s per minute has not been too high; however, I’ve cringed more times than I’d like…

1. Overly-generic parameter types

When you send an email, it will have at least one recipient, and it may have many recipients. However, no email will have more than one sender. Yet, I wrote the package procedure like this:

TYPE address_type IS RECORD

(name VARCHAR2(100)

,email_address VARCHAR2(200)

);

TYPE address_list_type IS TABLE OF address_type

INDEX BY BINARY_INTEGER;

PROCEDURE send

(i_sender IN address_list_type

,i_recipients IN address_list_type

,i_subject IN VARCHAR2

,i_message IN VARCHAR2

);

Why I didn’t have i_sender be a simple address_type, I can’t remember. Internally, the procedure only looks at i_sender(1) – if a caller were to pass in a table of more than one sender, it raises an exception.

2. Functional programming to avoid local variables

Simple is best, and there’s nothing wrong with using local variables. I wish I’d realised these facts when I wrote functions like this:

FUNCTION address

(i_name IN VARCHAR2

,i_email_address IN VARCHAR2

) RETURN address_list_type;

FUNCTION address

(i_address IN address_list_type

,i_name IN VARCHAR2

,i_email_address IN VARCHAR2

) RETURN address_list_type;

All that so that callers can avoid *one local variable*:

EMAIL_PKG.send

(i_sender => EMAIL_PKG.address('joe','joe@company.com')

,i_recipients => EMAIL_PKG.address(

EMAIL_PKG.address(

'jill', 'jill@company.com')

,'bob', 'bob@company.com')

,i_subject => 'hello'

,i_message => 'world'

);

See what I did there with the recipients? Populating an array on the fly with just function calls. Smart eh? But rather useless, as it turns out; when we need to send multiple recipients, it’s usually populated within a loop of unknown sized, so this method doesn’t work anyway.

Go ahead – face your past and dig up some code you wrote 5 years ago or more. I think, if you don’t go “WTF!” every now and then, you probably haven’t learned anything or improved yourself in the intervening years. Just saying 🙂